|

| Usermind Journey |

I'm not saying that Usermind calls itself a JOE. Its self-description is “the first unified platform for orchestrating business operations”. But the company uses the language of journeys and customer data stores. So although they see themselves as enabling all kinds of business processes, I think it’s fair to view them largely in the context of customer management.

Usermind is all about simplicity. Its main screen sets the tone by offering just three tabs: Analytics, Journeys, and Integration. Deploying the system actually starts with the last of these, Integration, which is where the user connects to external systems that are both data sources and execution engines. The company lists about a dozen standard integrations including major marketing automation, CRM, email, customer service, collaboration, and analytics systems. Another half-dozen are “coming soon.”

A key feature of Usermind is it makes integration easy by reading the contents of the source systems automatically, so any custom data elements or objects are incorporated without user effort. This also means it adjusts to changes in those systems automatically. Users do build maps that show which fields to use to link customers (or other entities) across systems: for example, a map might use email address to link marketing automation to CRM, and customer ID to link CRM to customer service. The system can also map on combinations of fields and do fuzzy matching on inconsistent data. There can be separate maps for individuals, companies, products, customers, partners, or whatever other entities the user wants to work with. Usermind figures out relationships among tables or objects within each source system, so users simply see a list of available fields without having worry about the underlying data structures.

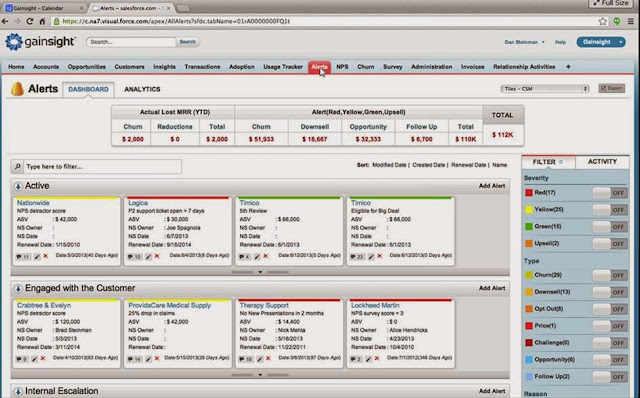

Once the maps are in place, Usermind copies selected data elements into its own database, where they are available to use in journeys. Each journey is a sequence of milestones, which can each contain one or more rules. Each rule has selection conditions and one or more actions to take if the conditions are met. Actions can push data or tasks to back to the source systems. Rules can be triggered by events or executed on schedule.

|

| Usermind Rule |

And that’s pretty much it. The Analytics tab reports on movement of customers through journeys, providing counts, conversion rates and drop-out rates for each milestone. It also analyzes the impact of actions on results. The system can be connected to business intelligence tools for more advanced reporting. But there’s no predictive analytics, content creation, or message execution. True to its description, Usermind is designed to orchestrate actions in other systems, not take actions itself.

Don’t let that simplicity fool you. Usermind (and other JOEs) address the critical challenge of unifying customer data from different sources and coordinating customer treatments. Tools to make this easy are rare; tools to send emails and deliver other messages are not. So Usermind fills an important gap – which is why the company has attracted $22 million in venture funding since it was founded in 2013, and why its investors waited until this year for it to launch the actual product. (Whether they waited patiently is a question I didn’t ask.) As of March, the company reported 15 live customers and was actively looking for more.

You may be wondering whether Usermind can truly be called a JOE since I've defined the essence of JOE-ness as a system that discovers the customer journey for itself rather than relying on the user to define it. Usermind doesn’t pass that test. In fact, Usermind journeys are individual processes rather than an overview of the customer’s lifetime experience. But Usermind still looks JOE-ish because it’s capturing events that occur naturally, not creating its own events like messages in a nurture flow. And its ability to use the journey as a framework for managing customer treatments is exactly what JOEs are all about. So marketers looking for a JOE should put Usermind on their list.