For some unknown reason, my last three presentations all started as headlines (two created by someone else) which I then then wrote a speech to match. This isn’t my usual way of working. It does add a little suspense to the writing process – can I develop an argument to match the title? It also dawns on me that this is the way generative AI works: start with a prompt and create a supporting text. That’s an unsettling thought: are humans now imitating AI instead of the other way around? Or have I already been replaced by an AI but just don’t realize it? How would I even know?

The latest in this series of prompt-generated presentations started when I noticed that the title shown in a conference agenda didn’t match the speech I had prepared. When I pointed this out, the conference organizers said I could give any speech I want, but the problem was, I really liked their title: “Unleashing the Power of Customer Data Platforms (CDPs) and AI: A Game-Changer for Modern Marketing”.

The idea of “unleashing” a CDP to run wild and exercise all its powers, is not something I get to talk about very often, since most of my presentations are about practical topics like defining a CDP, selecting one, or deploying one. And I love how “and AI” is casually tucked into the title like an afterthought: “there’s this little thing called AI, perhaps you’ve heard of it?”

And how about “game changer for modern marketing”? That’s an amazing promise to make: not just will you learn the true meaning of modern marketing, but you'll find how to change its very nature so it’s a game you can win. Who wouldn’t want to learn that?

This was definitely a speech I wanted to hear. The only problem was, the only way for that to happen was for me to write it. So I did. Here’s a lightly modified version.

Let’s start with the goal: winning the modern marketing game. The object of the game is quite simple: deliver the optimal experience for each customer across all interactions. And, when I say all interactions, I mean all interactions: not just marketing interactions, but every interaction from initial advertising impressions through product purchase, use, service, and disposal. I also mean every touch point, from the Internet and email through call centers, repair services, and the product itself.

That’s a broad definition, and you will immediately see the first challenge: all the departments outside of marketing may not want to let marketing take charge of their customer interactions. Nor is your company’s senior management necessarily interested in giving marketing so much authority. So marketing’s role in many interactions may be more of an advisor. The best you can hope for is that marketing is given a seat at the table when those departments set their policies and set up their systems. This requires a cooperative rather than a controlling attitude among marketers.

The second challenge to winning at marketing is the fragmented nature of data and systems. Most marketing departments have a dozen or more systems with customer data; at global organizations, the number can reach into the hundreds. Expanding the scope to include non-marketing systems that interact with customers adds still more sources such as contact centers and support websites. Again, marketing will rarely control these. At best, they may have an option to insert marketing recommendations directly into the customer experience, such as suggesting next best actions to call center agents.

The third challenge is optimization itself. It’s not always clear what action will result in the best long-term results. A proper answer requires capturing data on how customers are treated and how they later behaved as a result. Some of this will come from non-marketing systems, such as call center records of actions taken and accounting system records of purchases. Again, those systems’ owners may not be eager to share their data, although it’s harder for them to argue against sharing historical information than against sharing control over actual customer interactions.

But the challenge of optimization extends beyond data access. Really understanding the drivers of customer behavior requires deep analysis and no small amount of human insight. Some questions can be answered through formal experiments with test and control groups. But the most important questions often can’t be defined in such narrow terms. Even defining the options to test, such as new offers or marketing messages, takes creative thought beyond what analysis alone can reveal.

And even if you could find the optimal treatment in each situation, the playing field itself keeps shifting. New products, offer structures, and channels change what treatments are available. New systems change the data that be captured for analysis. New tools change the costs of actions such as creating customer-specific content. These all change the optimization equation: actions that once required expensive human labor can now be done cheaply with automation; fluctuating product costs and prices change the value of different actions; evolving customer attitudes towards privacy and service change the appeal of different offers. The optimal customer experience is a moving target, if not an entirely mythical one.

None of this is news, or really even new: marketing has always been hard. The question is how “unleashing” the power of CDPs and AI makes a difference.

Let’s start with a framework. If you think of modern marketing as a game, then the players have three types of equipment: data systems to collect and organize customer information; decision systems to select customer experiences; and delivery systems to execute those experiences. It’s quite clear that the CDP maps into the data layer and AI maps into the decision layer. This raises the question of what maps into the delivery layer. We’ll return to that later.

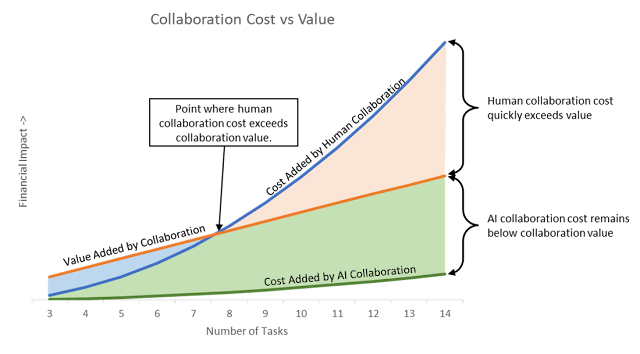

First, we have to answer the question: Why would CDP and AI be game changers? To understand that, you have to imagine, or remember, life before CDP and AI. Probably the best word for that is chaos. There are dozens – often hundreds -- of data sources on the data layer, and dozens more systems making choices on the decision layer. The reason there are so many decision systems is that each channel usually makes its own choices. Even when channels share centralized decision systems, those are often specialized services that deal with one problem, whether it’s product recommendations or churn predictions or audience segmentation.

CDP and AI promise to end the chaos by consolidating all those systems into one customer data source and one decision engine. Each of these would be a huge improvement by itself:

-

the CDP makes complete, consistent customer data available to all decision systems, and

-

AI enables a single decision engine to coordinate and optimize custom experiences for each individual.

Yes, we’ve had journey orchestration engines and experience orchestration engines available for quite some time, but those work at the segment level. What’s unique about AI is not simply that it can power unified decisions, but that each decision can each be tailored to the unique individual.

But we’re talking about more than the advantages of CDP and AI by themselves. We’re talking about the combination, and what makes that a game-changer. The answer is you get a huge leap in what’s possible when that personalized AI decisioning is connected to a unified source of all customer information.

Remember, AI is only as good as the data you feed it. You won’t get anything near the full value of AI if it’s struggling with partial information, or if different bits of information are made available to different AI functions. Connecting the AI to the CDP solves that problem: once any new bit of information is loaded into the CDP, it’s immediately available to every AI service. This means the AI is always working with complete and up-to-date data, and it’s vastly easier to add new sources of customer data because they only have to be integrated once, into the CDP, to become available everywhere.

To put it in more concrete terms, one unified decision system can coordinate and optimize individual-specific customer experiences across all touch points based on one unified set of customer data.

That is indeed a game-changer for modern marketing, and it only reaches its full potential if you “unleash” the CDP to consolidate all your customer data, and “unleash” the AI to make all of your experience decisions.

That’s a lot of unleashing. I hope you find it exciting but you should also be a little bit scared. The question to ask is: How can I take advantage of this ‘game changing potential’ without risking everything on technology that is relatively new and, in the case of AI, largely unproven. Here’s what I would suggest:

Let’s start with CDP. So far, I’ve been using the term without defining it. I hope you know that a CDP creates unified customer profiles that can be shared with any other system. No need to get into the technical details here. What’s important is that the CDP collects data from all your source systems, combines it to build complete customer profiles, and makes them available for any and every purpose.

Some people reading this will already have a CDP in place but most probably do not. So my first bit of advice is: Get one. It’s not such easy advice to follow: a CDP is a big project and there are dozens of vendors to choose from, so you have to work carefully to find a system that fits your needs and then you have to convince the rest of your organization that it’s worth the investment. I won't go into how to do that right now. But probably the most important pro tips are: base your selection on requirements that are directly tied to your business needs, and ensure you keep all stakeholders engaged throughout the entire selection process. If you do those two things, you can be pretty sure you’ll buy a CDP that’s useful and actually used.

That said, there are some specific requirements you’ll want to consider that tie directly to ensuring your CDP will support your AI system. One is make sure you buy a system that can handle all data types, retain all details, and handle any data volume. This does NOT mean you should load in every scrap of customer-related data that you can find. That would be a huge waste. You’ll want to start with a core set of customer data that you clearly need, which will be data that your decision and delivery systems are already working with. This lets the CDP become their primary data source. Beyond that, load additional data as the demand arises and when you’re confident the value created is worth the added cost.

The second requirement is to ensure your CDP can support real time access to its data. That’s another complicated topic because there are different kinds of real time processes. What you want as a minimum is the ability to read a single customer profile in real time, for example to support a personalization request. And you want your CDP to be able to respond in real time to events such as a dropped shopping cart. Your AI system will need both of those capabilities to make the best customer experience decisions. What’s not included in that list is updating the customer profiles in real time as new data arrives, or rebuilding the identity graph that connects data from different sources to the same customer. Some CDPs can do those things in real time but most cannot. Only some applications really need them.

The third requirement relates to identity management. The CDP needs to know which identifiers, such as email, telephone, device ID, and postal address, refer to the same customer. At CDP Institute, we don’t feel the CDP itself needs to find the matches between those identifiers. That’s because there’s lots of good outside software to do that. We do feel the CDP needs to be able to maintain a current list of matches, or identity graph, as matches are added or removed over time. That’s what lets the CDP combine data from different sources into unified profiles.

My second cluster of advice relates to AI. I’d be surprised if anyone reading this hasn’t at least tested ChatGPT, Bing Chat Search, or something similar. At the same time, there’s quite a bit of research showing that relatively few companies have moved beyond the testing stage to put the advanced AI tools into production.

And that’s really okay, so my first piece of advice is: don’t be hasty. You should certainly be testing and probably deploying some initial applications, but don’t feel you must plow full speed ahead or you’ll fall behind. Most of your competitors are moving slowly as well.

That said, you do need to train your people in using AI. They don’t necessarily need to become expert prompt writers, since the systems will keep getting smarter so specific prompting skills will become obsolete quickly. But they do need to build a basic understanding of what the systems can and can’t do and what it’s like to work with them. That will change less quickly than things like a user interface. The more familiar your associates become with AI, the less likely they are to ask it to do something it doesn’t do well or that creates problems for your company.

Third, pay close attention to AI’s ability to ingest and use your company’s own data. Remember the game-changing marketing application for AI is to create an optimal experience for each individual. The means it must be able to access that individual’s data. This is an ability that was barely available in tools like ChatGPT six months ago, but has now become increasingly common. Still, you can bet there will be huge differences in how different products handle this sort of data loading. Some will be designed with customer experience optimization in mind and some won’t. So be sure to look closely at the capabilities of the systems you consider.

Fourth, and closely related, the industry faces a huge backlog of unresolved issues relating to privacy, intellectual property ownership, security, bias, and accuracy. Again, these are all evolving with phenomenal speed, so it’s hard for anyone to keep up – even including the AI specialists themselves. Unless that’s your full time job, I suggest that you keep a general eye on those developments so you’re aware of issues that might come with any particular application you’re considering. Then, when you do begin to explore an application, you’ll know to bring in the experts to learn the current state of play.

Similarly, keep an eye on the new capabilities that AI systems are adding. This is also evolving at incredible speed. Some of those capabilities may change your opinion of what the systems can do well or poorly. Some will be directly relevant to your needs and may form the basis for powerful new applications. We’re still far away from the “one AI to rule them all” that will be the ultimate game-changer for marketing. But it’s coming, so be on the alert.

This brings us back to the third level of marketing technology: delivery. Will there be yet one more game-changer, a unified delivery system that offers the same simplification advantages as unified data and decision layers? The giant suite vendors like Salesforce and Adobe certainly hope so, as do the unified messaging platforms like Braze and Twilio. The fact that we can list those vendors offers something of an answer: some companies think a unified delivery layer is possible and would argue they already provide one. I’m not so confident because new channels keep popping up and it’s nearly impossible for any one vendor to support them all immediately.

What seems more likely is a hybrid approach where most companies have a core delivery platform that handles basic channels like email, websites, and advertising and supports third-party plug-ins to add channels or features they do not. These platforms are already common, so this is less a prediction of the future than an observation of the present, which seems likely to continue. The core delivery platform offers a single connection to the decision layer run by the AI. This gives the primary benefits gained from a single connection between layers, although I wouldn’t call it a game-changer only because it already exists.

My recommendation here is to adapt the delivery platform approach, seeking a platform that is as open as possible to plug-ins so it can coordinate experiences across as many channels as possible. In this view, the delivery platform is really an orchestration system. Which channels are actually delivered in the delivery platform and which are delivered by third-party plug-ins is relatively unimportant from an architectural point of view. Of course, vendors, marketers, and tech staff will all care a great deal about which tools your company chooses.

While we’re discussing architectural options, I should also mention that the big suites would argue that data, decision, and delivery layers should all be part of one unified product, reducing integration efforts to a minimum. That may well be appealing, but remember that most of the integrated suites were cobbled together from separate systems that were purchased by the vendors over time. Connecting all the bits can be almost as much work as connecting products from different vendors.

And, of course, relying on a single vendor for everything means accepting all parts of their suite – some of which may not be as good as tools you could purchase elsewhere. The good news is most suite vendors have connectors that enable users to use external systems instead of their own components for important functions. As always, you have to look in detail at the actual capabilities of each system before judging how well it can meet your needs.

So, where does this leave us?

We’ve seen that the object of the modern marketing game is to deliver the optimal experience for each customer. And we’ve seen that challenges to winning that game include organizational conflicts, fragmented data, and fragmented decision and delivery systems.

We’ve also seen that the combination of customer data platforms and AI systems can indeed be game-changing, because CDPs create the unified data that AI systems need to deliver experiences that are optimized with a previously-impossible level of accuracy.

CDPs and AI won’t fix everything. Organization conflicts will still remain, since other departments can’t be expected to turn over all responsibility to marketing. And fragmentation will probably remain a feature of the delivery layer, simply because new opportunities appear too quickly for a single delivery system to provide everything.

In short, the game may change but it never ends. Build strong systems that can meet current requirements and can adapt easily to new ones. But never forget that change is inherently unpredictable, so even the most carefully crafted systems may prove inadequate or unexpectedly become obsolete. Adapting to those situations is where you’ll benefit from investment in the most important resource of all: people who will work to solve whatever problems come up in the interest of your company and its customers.

And remember that no matter how much the game changes, the goal is always the same: the best experience for each customer. Make sure everything you do is has that goal in mind, and success will follow.