The CDP Institute just published its latest Industry Update, our semi-annual overview of CDP vendors with data on employment, funding, locations, and more. (Download here.) There were three pieces of information that stood out:

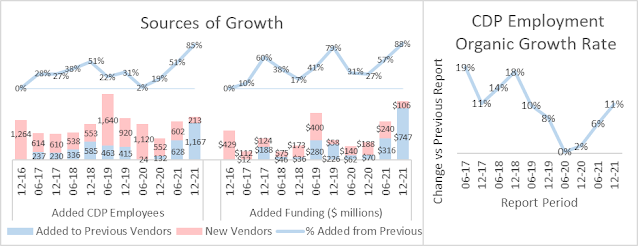

- Only four new vendors were added, compared with an average of fifteen in past reports.

- four companies reported funding rounds over $100 million, compared with one round that size across all past reports

- nearly all employment growth (85%) came from previously listed vendors, compared with just 36% in past reports

Of course, it makes sense that most growth would come from existing vendors if we added few new ones. But industry growth over-all was in line with past trends, and actually a bit stronger: up 12% over the past six months. This meant that the growth rate of existing vendors was high enough to compensate for the “loss” of new vendors. In fact, the existing vendor growth of 11% was the highest since 2018, when the industry was just taking off.

Connecting these dots reveals a clear picture: an industry that has stopped attracting new entrants but is now growing strongly on its own – with leading vendors stockpiling funds to compete against each other an elimination round where only a few can emerge as winners. Think Survivor meets Game of Thrones with a dash of Big Bang Theory.

It’s a picture that makes a lot of sense. Customer Data Platforms are now widely accepted as an essential component of a modern data architecture, so it’s a market worth fighting for. But the situation facing potential entrants is daunting:

- the leading independent CDP vendors now have mature products, big customer bases, high brand recognition, and lots of funding.

- enterprise software companies, including Salesforce, Adobe, Oracle, Microsoft, and SAP, are chipping away at the market by selling CDPs as part of their packages.

- marketing automation, customer support, ecommerce, and other vendors increasingly offer CDP modules baked into their own systems

- IT teams show growing interest in building their own CDP equivalent, supported by a growing array of components that make the job easier.

Some mid-tier CDP vendors have already given up the fight and been acquired, most often by firms needing a CDP to anchor a multi-channel customer experience suite. The acquisition wave may have peaked, since there were just three acquisitions in the latest report, compared with a dozen over the previous two.

Among the remaining firms, some may compete successfully as generalists. But the more promising path in most cases will be to offer specialized products that can be the best in a particular niche. Those niches might be defined by a particular industry, region, company size, or CDP function.

The functional niches are most intriguing because they serve the growing market for CDP components. We’ve seen some movement in that direction, as vendors offer parts of their CDP as stand-alone modules for identity resolution, data collection, data distribution (“reverse ETL”), and campaign management. Those vendors see their modules as a point of entry into clients who will later buy more of their products. They may be right, but I wonder how many companies that buy best-of-breed components will reverse course by favoring components from a single source. What’s certain is that this approach exposes the CDP vendors to competition from point-solution specialists in each area while discarding the advantage of that comes from purchasing a CDP with a full range of pre-integrated functions. Here's a sampling of that competitive landscape:

I also suspect that companies like Snowflake, Amazon Web Services, and Google Cloud Services, which now position themselves as providing one piece of a “composable” solution, will eventually add features that match what the independent component providers now offer. Actually, that’s already happening, so I don’t get much credit for predicting it. It’s a dynamic we’ve seen repeatedly in other markets: primary vendors expand their features to secure their position with clients by adding more value (hooray!) and increasing the cost of switching (boo!).

Let’s be clear: both the added value and the switching costs are the result of integration cost. No matter how many promises are made about easy integration, the fact remains that any non-trivial connection between two systems takes skill to plan, deploy, and maintain. Integration is often the top-ranked vendor selection criterion in surveys, which some see as showing that problem is well understood. I draw the opposite conclusion: people list integration as a consideration because they know it’s poorly understood. This forces them to invest time in trying to avoid integration problems and even sacrifice other benefits to achieve it. If integration were really easy, no one would worry about it.

Right now, someone reading this is saying, “Ah, but no-code changes everything”. I don’t think so. No-code works best when automating simple processes with a few users where flaws are acceptable. The more complex, widely-deployed, and mission-critical a process is, the more important it is to deploy professional-grade design and quality control. Prebuilt components doesn’t change this: configuring those components and connecting them to each other still takes great care and understanding.

This isn’t (just) a cranky-old-man digression. CDP functions rank high in complexity, scale, and risk, so they are poor candidates for no-code development. CDPs certainly can have no-code interfaces that empower business users to do things that might otherwise require a developer. But those interfaces will control carefully defined and constrained tasks, not create core functionality. Assembling CDP-equivalent systems from composable functions is a different matter, and, yes, that should become increasingly possible for people with the right integration skills. What I doubt is that selling modules with those functions will be good business for CDP vendors: they are likely to commoditize their products and ultimately to be pushed aside by platform developers who integrate key functions directly.

I'm not saying that CDP vendors who can’t raise several hundred million dollars are doomed. I am saying that most will have to pick a niche to succeed. One promising option is building customer data profiles, especially for big enterprises. It’s a single function that incorporates enough separate components for CDP vendors to provide value by avoiding integration costs.

The other big niche, or set of niches, is integrated customer experience solutions. Our latest report already shows systems that campaign and delivery CDPs, our name for systems that do this, account for two-thirds of the industry vendor count and funding, and nearly three-quarters of employment. Their actual share may be greater still: immediately after completing the latest report, I happened to glance at G2 Crowd’s list of CDPs and found our reports doesn't include several large, fast-growing retail marketing automation or messaging specialists (Insider, Listrak, SALESmanago, Klaviyo, and Ometria) that offer what looks like CDP-grade multi-source profile building.

Whether those vendors are true CDPs depends on whether they make those profiles available to other systems. Either way, the point is that there’s a large and growing market for cross-channel retail marketing systems with unified customer profiles at their core. There are similar markets outside of retail, where we already see specialist campaign and delivery CDPs in hospitality, financial services, telecommunications, healthcare, education, and elsewhere.

The value of industry-specific systems is, once again, reduced integration cost. In this case, the key integration is with industry-specific operational systems such as airline reservations, core banking, phone billing, health records, and student management. Vendors in these niches compete primarily on the marketing functions they offer, which makes them more departmental than enterprise solutions and pushes them to add marketing features tailored to their particular industry. Systems like this are hard to dislodge once they’re deployed because they are populated with many complex, difficult-to-replicate campaigns, reports, predictive models, and content libraries. This stickiness is what enables many successful vendors to co-exist in each niche, and what makes it hard for non-specialists to enter.

The division of the CDP industry into enterprise-level data CDPs and industry-specific, department-level campaign and delivery CDPs is not a new trend. What is new is the maturity of the competitors within many niches, which will make it increasingly difficult for new entrants to succeed. What’s also new is that building CDP-style customer profiles is increasingly common, making it a standard feature rather than a product differentiator. This encourages vendors to position themselves as something other than a CDP, even though they need to show buyers that their CDP features are first-rate.

My final conclusion is this: the CDP industry will continue grow, and it will remain important for buyers to find the right CDP, even as the CDP itself slips from the spotlight.