The new Web site I mentioned the other day is now up and running at http://www.raabassociatesinc.com/. This turned out to be an interesting little science project. I had originally figured to go the usual route of hiring a Web developer, but realized while I was assessing tools for this blog and MPM Toolkit that I could easily build the site myself in Wordpress in a fraction of the time and nearly for free. Even more important, I can make changes at will. I also looked at the open source content management system Joomla, which would have given some additional capabilities. But Joomla had a steeper learning curve and didn't seem worth the bother, at least for now.

Part of the project was a new archive of my past articles, which is now technically its own Wordpress blog. The big advantage vs. our old archive is you now get a proper search function. Anybody interested in a trip down memory lane regarding marketing software is welcome to browse articles dating from 1993. I've always figured someone would write a doctoral thesis based on this stuff.

Monday, April 28, 2008

Thursday, April 24, 2008

WiseGuys Gives Small Firms Powerful List Selection Software

Like a doctor specialized in “diseases of the rich”, I've been writing mostly about technologies for large organizations: specialized databases, enterprise marketing systems, advanced business intelligence platforms. But the majority of businesses have nowhere near the resources needed to manage such systems. They still need sophisticated applications, but in versions that can be installed and operated with a minimum of technical assistance.

WiseGuys from Desktop Marketing Solutions, Inc. (DMSI) is a good example of the breed. It is basically a system to help direct marketers select names for catalog mailings and email. But while simple query engines rely on the marketer to know whom to pick, WiseGuys provides substantial help with that decision. More to the point—and this the hallmark of a good small business product—it provides the refinements needed to make a system usable in the real world. In big enterprise products, these adjustments would be handled by technical staff through customization or configurations. In WiseGuys, marketers can control them directly.

Let’s start at the beginning. WiseGuys imports customer and transaction records from an external fulfillment system. During the import process, it does calculations including RFM (recency, frequency, monetary value) scoring, Lifetime Value, response attribution, promotion profitability, and cross-purchases ratios between product pairs. What’s important is that the system recognizes it can’t simply do those calculations on every record it gets. There will always be some customers, some products, some transactions, some campaigns or some date ranges that should be excluded for one reason or another. In fact, there will be different sets of exclusions for different purposes. WiseGuys handles this gracefully by letting users define multiple “setups” which define collections of records and the tasks that will apply to them. Thus, instead of one RFM score there might be several, each suited to a particular type of promotion or customer segment. These setups can be run once or refreshed automatically with each update.

The data import takes incremental changes in the source information – that is, new and updated customers and new transactions – rather than requiring a full reload. It identifies duplicate records, choosing the survivor based on recency, RFM score or presence of an email address as the user prefers. The system will combine the transaction history of the duplicates, but not move information from one customer record to another. This means that if the surviving record lacks information such as the email address or telephone number, it will not be copied from a duplicate record that does.

The matching process itself takes the simplistic approach of comparing the first few characters of the Zip Code, last name and street address. Although most modern systems are more sophisticated, DMSI says its method has proven adequate. One help is that the system can be integrated with AccuZip postal processing software to standardize addresses, which is critical to accurate character-based matching.

The matching process can also create an organization key to link individuals within a household or business. Selections can be limited to one person per organization. RFM scores can also be created for an organization by combining the transactions of its members.

As you’d expect, WiseGuys gives the user many options for how RFM is calculated. The basic calculation remains constant: the RFM score is the sum of scores for each of the three components. But the component scores can be based on user-specified ranges or on fixed divisions such as quintiles or quartiles. Users decide on the ranges separately for each component. They also specify the number of points assigned to each range. DMSI can calculate these values through a regression analysis based on reports extracted from the system.

Actual list selections can use RFM scores by themselves or in combination with other elements. Users can take all records above a specified score or take the highest-scoring records available to meet a specified quantity. Each selection can be assigned a catalog (campaign) code and source code and, optionally, a version code based on random splits for testing. The system can also flag customers in a control group that was selected for a promotion but withheld from the mailing. The same catalog code can be assigned to multiple selections for unified reporting. Unlike most marketing systems, WiseGuys does not maintain a separate campaign table with shared information such as costs and content details.

Once selections are complete, users can review the list of customers and their individual information, such as last response date and number of promotions in the past year. Users can remove individual records from the list before it is extracted. The list can be generated in formats for mail and email delivery. The system automatically creates a promotion history record for each selected customer.

Response attribution also occurs during the file update. The system first matches any source codes captured with the orders against the list of promotion source codes. If no source code is available, it applies the orders based on promotions received by the customer, using either the earliest (typically for direct mail) or latest (for email) promotion in a user-specified time window.

The response reports show detailed statistics by catalog, source and version codes, including mail date, mail quantity, responses, revenue, cost of goods, and derived measures such as profit per mail piece. Users can click on a row in the report and see the records of the individual responders as imported from the source systems. The system can also create response reports by RFM segment, which are extracted to calculate the RFM range scores. Other reports show Lifetime Value grouped by entry year, original source, customer status, business segment, time between first and most recent order, RFM scores, and other categories. The Lifetime Value figures only show cumulative past results (over a user-specified time frame): the system does not do LTV projections.

Cross sell reports show the percentage of customers who bought specific pairs of products. The system can use this to produce a customer list showing the three products each customer is most likely to purchase. DMSI says this has been used for email campaigns, particularly to sell consumables, with response rates as high as 7% to 30%. The system will generate a personalized URL that sends each customer to a custom Web site.

WiseGuys was introduced in 2003 and expanded steadily over the years. It runs on a Windows PC and uses the Microsoft Access database engine. A version based on SQL Server was added recently. The one-time license for the Access versions ranges from $1,990 to $3,990 depending on mail volume and fulfillment system (users of Dydacomp http://www.dydacomp.com Mail Order Manager get a discount). The SQL Server version costs $7,990. The system has about 50 clients.

WiseGuys from Desktop Marketing Solutions, Inc. (DMSI) is a good example of the breed. It is basically a system to help direct marketers select names for catalog mailings and email. But while simple query engines rely on the marketer to know whom to pick, WiseGuys provides substantial help with that decision. More to the point—and this the hallmark of a good small business product—it provides the refinements needed to make a system usable in the real world. In big enterprise products, these adjustments would be handled by technical staff through customization or configurations. In WiseGuys, marketers can control them directly.

Let’s start at the beginning. WiseGuys imports customer and transaction records from an external fulfillment system. During the import process, it does calculations including RFM (recency, frequency, monetary value) scoring, Lifetime Value, response attribution, promotion profitability, and cross-purchases ratios between product pairs. What’s important is that the system recognizes it can’t simply do those calculations on every record it gets. There will always be some customers, some products, some transactions, some campaigns or some date ranges that should be excluded for one reason or another. In fact, there will be different sets of exclusions for different purposes. WiseGuys handles this gracefully by letting users define multiple “setups” which define collections of records and the tasks that will apply to them. Thus, instead of one RFM score there might be several, each suited to a particular type of promotion or customer segment. These setups can be run once or refreshed automatically with each update.

The data import takes incremental changes in the source information – that is, new and updated customers and new transactions – rather than requiring a full reload. It identifies duplicate records, choosing the survivor based on recency, RFM score or presence of an email address as the user prefers. The system will combine the transaction history of the duplicates, but not move information from one customer record to another. This means that if the surviving record lacks information such as the email address or telephone number, it will not be copied from a duplicate record that does.

The matching process itself takes the simplistic approach of comparing the first few characters of the Zip Code, last name and street address. Although most modern systems are more sophisticated, DMSI says its method has proven adequate. One help is that the system can be integrated with AccuZip postal processing software to standardize addresses, which is critical to accurate character-based matching.

The matching process can also create an organization key to link individuals within a household or business. Selections can be limited to one person per organization. RFM scores can also be created for an organization by combining the transactions of its members.

As you’d expect, WiseGuys gives the user many options for how RFM is calculated. The basic calculation remains constant: the RFM score is the sum of scores for each of the three components. But the component scores can be based on user-specified ranges or on fixed divisions such as quintiles or quartiles. Users decide on the ranges separately for each component. They also specify the number of points assigned to each range. DMSI can calculate these values through a regression analysis based on reports extracted from the system.

Actual list selections can use RFM scores by themselves or in combination with other elements. Users can take all records above a specified score or take the highest-scoring records available to meet a specified quantity. Each selection can be assigned a catalog (campaign) code and source code and, optionally, a version code based on random splits for testing. The system can also flag customers in a control group that was selected for a promotion but withheld from the mailing. The same catalog code can be assigned to multiple selections for unified reporting. Unlike most marketing systems, WiseGuys does not maintain a separate campaign table with shared information such as costs and content details.

Once selections are complete, users can review the list of customers and their individual information, such as last response date and number of promotions in the past year. Users can remove individual records from the list before it is extracted. The list can be generated in formats for mail and email delivery. The system automatically creates a promotion history record for each selected customer.

Response attribution also occurs during the file update. The system first matches any source codes captured with the orders against the list of promotion source codes. If no source code is available, it applies the orders based on promotions received by the customer, using either the earliest (typically for direct mail) or latest (for email) promotion in a user-specified time window.

The response reports show detailed statistics by catalog, source and version codes, including mail date, mail quantity, responses, revenue, cost of goods, and derived measures such as profit per mail piece. Users can click on a row in the report and see the records of the individual responders as imported from the source systems. The system can also create response reports by RFM segment, which are extracted to calculate the RFM range scores. Other reports show Lifetime Value grouped by entry year, original source, customer status, business segment, time between first and most recent order, RFM scores, and other categories. The Lifetime Value figures only show cumulative past results (over a user-specified time frame): the system does not do LTV projections.

Cross sell reports show the percentage of customers who bought specific pairs of products. The system can use this to produce a customer list showing the three products each customer is most likely to purchase. DMSI says this has been used for email campaigns, particularly to sell consumables, with response rates as high as 7% to 30%. The system will generate a personalized URL that sends each customer to a custom Web site.

WiseGuys was introduced in 2003 and expanded steadily over the years. It runs on a Windows PC and uses the Microsoft Access database engine. A version based on SQL Server was added recently. The one-time license for the Access versions ranges from $1,990 to $3,990 depending on mail volume and fulfillment system (users of Dydacomp http://www.dydacomp.com Mail Order Manager get a discount). The SQL Server version costs $7,990. The system has about 50 clients.

Wednesday, April 16, 2008

OpenBI Finds Success with Open Source BI Software

I had an interesting conversation last week with Steve Miller and Rich Romanik of OpenBI a consultancy specializing in using open source products for business intelligence. It was particularly relevant because I’ve also been watching an IT Toolbox discussion of BI platform selection degenerate into a debate about whose software is cheaper. The connection, of course, is that nothing’s cheaper than open source, which is usually free (or, as the joke goes, very reasonable*) .

Indeed, if software cost were the only issue, then open source should already have taken over the world. One reason it hasn’t is that early versions of open source projects are often not as powerful or easy to use as commercial software. But this evolves over time, with each project obviously on its own schedule. Open source databases like MySQL, Ingres and PostgreSQL are now at or near parity with the major commercial products, except for some specialized applications. According to Miller, open source business intelligence tools including Pentaho and JasperSoft are now highly competitive as well. In fact, he said that they are actually more integrated than commercial products, which use separate systems for data extraction, transformation and loading (ETL), reporting, dashboards, rules, and so on. The open source BI tools offer these as services within a single platform.

My own assumption has been that the primary resistance to open source is based on the costs of retraining. Staff who are already familiar with existing solutions are reluctant to devalue their experience by bringing in something new, and managers are reluctant to pay the costs in training and lost productivity. Miller said that half the IT people he sees today are consultants, not employees, whom a company would simply replace with other consultants if it switched tools. It’s a good point, but I’m guessing the remaining employees are the ones making most of the selection decisions. Retraining them, not to mention end-users, is still an issue.

In other words, there still must be a compelling reason for a company will switch from commercial to open source products—or, indeed, from an incumbent to any other vendor. Since the majority of project costs come from labor, not software, the most likely advantage would be increased productivity. But Miller said productivity with open source BI tools is about the same as with commercial products: not any worse, now that the products have matured sufficiently, but also not significantly better. He said he has seen major productivity improvements recently, but these have come through “agile” development methods that change how people work, not the tools they use. (I don’t recall whether he used the term "agile".)

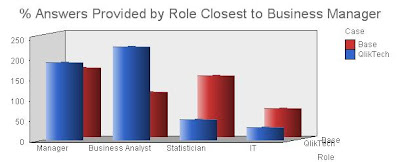

I did point out that certain products—QlikView being exhibit A—can offer meaningful productivity improvements over existing standard technologies. But I’m not sure he believed me.

Of course, agile methods can be applied with any tool, so the benefits from open source still come down to savings on software licenses. These can be substantial for a large company: a couple hundred dollars per seat becomes real money when thousands of users are involved. Still, even those costs can easily be outweighed by small changes in productivity: add a database administrator here and an extra cube designer there, and in no time you’ve spent more than you saved on software.

This circles right back to the quality issue. Miller argued that open source products improve faster than commercial systems, so eventually any productivity gaps will be eliminated or even reversed in open source’s favor. Since open source allows day-to-day users to work directly on the problems that are bothering them, it may in fact do better at making incremental productivity improvements than a top-down corporate development process. I'm less sure this applies to major as well as incremental innovations, but suppose examples of radical open source innovation could be found.

Whatever. At the moment, it seems the case for open source BI is strong but not overwhelming. In other words, a certain leap of faith is still required. Miller said most of OpenBI’s business has come from companies where other types of open source systems have already been adopted successfully. These firms have gotten over their initial resistance and found that the quality is acceptable. The price is certainly right.

*******************************************************************************

* (Guy to girl: “Are you free on Saturday night?” Girl to guy: “No, but I’m reasonable”. Extra points to whoever lists the most ways that is politically incorrect.)

Indeed, if software cost were the only issue, then open source should already have taken over the world. One reason it hasn’t is that early versions of open source projects are often not as powerful or easy to use as commercial software. But this evolves over time, with each project obviously on its own schedule. Open source databases like MySQL, Ingres and PostgreSQL are now at or near parity with the major commercial products, except for some specialized applications. According to Miller, open source business intelligence tools including Pentaho and JasperSoft are now highly competitive as well. In fact, he said that they are actually more integrated than commercial products, which use separate systems for data extraction, transformation and loading (ETL), reporting, dashboards, rules, and so on. The open source BI tools offer these as services within a single platform.

My own assumption has been that the primary resistance to open source is based on the costs of retraining. Staff who are already familiar with existing solutions are reluctant to devalue their experience by bringing in something new, and managers are reluctant to pay the costs in training and lost productivity. Miller said that half the IT people he sees today are consultants, not employees, whom a company would simply replace with other consultants if it switched tools. It’s a good point, but I’m guessing the remaining employees are the ones making most of the selection decisions. Retraining them, not to mention end-users, is still an issue.

In other words, there still must be a compelling reason for a company will switch from commercial to open source products—or, indeed, from an incumbent to any other vendor. Since the majority of project costs come from labor, not software, the most likely advantage would be increased productivity. But Miller said productivity with open source BI tools is about the same as with commercial products: not any worse, now that the products have matured sufficiently, but also not significantly better. He said he has seen major productivity improvements recently, but these have come through “agile” development methods that change how people work, not the tools they use. (I don’t recall whether he used the term "agile".)

I did point out that certain products—QlikView being exhibit A—can offer meaningful productivity improvements over existing standard technologies. But I’m not sure he believed me.

Of course, agile methods can be applied with any tool, so the benefits from open source still come down to savings on software licenses. These can be substantial for a large company: a couple hundred dollars per seat becomes real money when thousands of users are involved. Still, even those costs can easily be outweighed by small changes in productivity: add a database administrator here and an extra cube designer there, and in no time you’ve spent more than you saved on software.

This circles right back to the quality issue. Miller argued that open source products improve faster than commercial systems, so eventually any productivity gaps will be eliminated or even reversed in open source’s favor. Since open source allows day-to-day users to work directly on the problems that are bothering them, it may in fact do better at making incremental productivity improvements than a top-down corporate development process. I'm less sure this applies to major as well as incremental innovations, but suppose examples of radical open source innovation could be found.

Whatever. At the moment, it seems the case for open source BI is strong but not overwhelming. In other words, a certain leap of faith is still required. Miller said most of OpenBI’s business has come from companies where other types of open source systems have already been adopted successfully. These firms have gotten over their initial resistance and found that the quality is acceptable. The price is certainly right.

*******************************************************************************

* (Guy to girl: “Are you free on Saturday night?” Girl to guy: “No, but I’m reasonable”. Extra points to whoever lists the most ways that is politically incorrect.)

Tuesday, April 15, 2008

Making Some Changes

Maybe it's that spring has finally arrived, but, for whatever reason, I have several changes to announce.

- I've also started a new blog "MPM Toolkit" at http://mpmtoolkit.blogspot.com/. In this case, MPM stands for Marketing Performance Measurement. This has a been a topic of growing professional interest to me; in fact, I have a book due out on the topic this fall (knock wood). I felt the subject was different enough from what I've been writing about here to justify a blog of its own. Trying to keep the brand messages clear, as it were.

- I have resumed working under the Raab Associates Inc. umbrella, and am now a consultant rather than partner with Client X Client. This has more to do with accounting than anything else. It does, however, force me to revisit my portion of the Raab Associates Web site, which has not been updated since the (Bill) Clinton Administration. I'll probably set up a new separate site fairly soon.

Sorry to bore you with personal details. I'll make a more substantive post tomorrow.

- Most obviously, I've changed the look of this blog itself. Partly it's because I was tired of the old look, but mostly it's to allow me to take advantage of new capabilities now provided by Blogger. The most interesting is a new polling feature. You'll see the first poll at the right.

- I've also started a new blog "MPM Toolkit" at http://mpmtoolkit.blogspot.com/. In this case, MPM stands for Marketing Performance Measurement. This has a been a topic of growing professional interest to me; in fact, I have a book due out on the topic this fall (knock wood). I felt the subject was different enough from what I've been writing about here to justify a blog of its own. Trying to keep the brand messages clear, as it were.

- I have resumed working under the Raab Associates Inc. umbrella, and am now a consultant rather than partner with Client X Client. This has more to do with accounting than anything else. It does, however, force me to revisit my portion of the Raab Associates Web site, which has not been updated since the (Bill) Clinton Administration. I'll probably set up a new separate site fairly soon.

Sorry to bore you with personal details. I'll make a more substantive post tomorrow.

Wednesday, April 09, 2008

Bah, Humbug: Let's Not Forget the True Meaning of On-Demand

I was skeptical the other day about the significance of on-demand business intelligence. I still am. But I’ve also been thinking about the related notion of on-demand predictive modeling. True on-demand modeling – which to me means the client sends a pile of data and gets back a scored customer or prospect list – faces the same obstacle as on-demand BI: the need for careful data preparation. Any modeler will tell you that fully automated systems make errors that would be obvious to a knowledgeable human. Call it the Sorcerer’s Apprentice effect.

Indeed, if you Google “on demand predictive model”, you will find just a handful of vendors, including CopperKey, Genalytics and Angoss. None of these provides the generic “data in, scores out” service I have in mind. There are, however, some intriguing similarities among them. Both CopperKey and Genalytics match the input data against national consumer and business databases. Both Angoss and CopperKey offer scoring plug-ins to Salesforce.com. Both Genalytics and Angoss will also build custom models using human experts.

I’ll infer from this that the state of the art simply does not support unsupervised development of generic predictive models. Either you need human supervision, or you need standardized inputs (e.g., Salesforce.com data), or you must supplement the data with known variables (e.g. third-party databases).

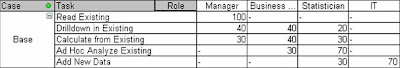

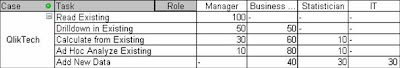

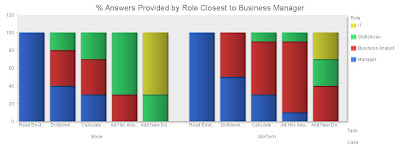

Still, I wonder if there is an opportunity. I was playing around recently with a very simple, very robust scoring method a statistician showed me more than twenty years ago. (Sum of Z-scores on binary variables, if you care.) This did a reasonably good job of predicting product ownership in my test data. More to the point, the combined modeling-and-scoring process needed just a couple dozen lines of code in QlikView. It might have been a bit harder in other systems, given how powerful QlikView is. But it’s really quite simple regardless.

The only requirements are that the input contains a single record for each customer and that all variables are coded as 1 or 0. Within those constraints, any kind of inputs are usable and any kind of outcome can be predicted. The output is a score that ranks the records by their likelihood of meeting the target condition.

Now, I’m fully aware that better models can be produced with more data preparation and human experts. But there must be many situations where an approximate ranking would be useful if it could be produced in minutes with no prior investment for a couple hundred dollars. That's exactly what this approach makes possible: since the process is fully automated, the incremental cost is basically zero. Pricing would only need to cover overhead, marketing and customer support.

The closest analogy I can think of among existing products are on-demand customer data integration sites. These also take customer lists submitted over the Internet and automatically return enhanced versions – in their case, IDs that link duplicates, postal coding, and sometimes third-party demograhpics and other information. Come to think of it, similar services perform on-line credit checks. Those have proven to be viable businesses, so the fundamental idea is not crazy.

Whether on-demand generic scoring is also viable, I can’t really say. It’s not a business I am likely to pursue. But I think it illustrates that on-demand systems can provide value by letting customers do things with no prior setup. Many software-as-a-service vendors stress other advantages: lower cost of ownership, lack of capital investment, easy remote access, openness to external integration, and so on. These are all important in particular situations. But I’d also like to see vendors explore the niches where “no prior setup and no setup cost” offers the greatest value of all.

Indeed, if you Google “on demand predictive model”, you will find just a handful of vendors, including CopperKey, Genalytics and Angoss. None of these provides the generic “data in, scores out” service I have in mind. There are, however, some intriguing similarities among them. Both CopperKey and Genalytics match the input data against national consumer and business databases. Both Angoss and CopperKey offer scoring plug-ins to Salesforce.com. Both Genalytics and Angoss will also build custom models using human experts.

I’ll infer from this that the state of the art simply does not support unsupervised development of generic predictive models. Either you need human supervision, or you need standardized inputs (e.g., Salesforce.com data), or you must supplement the data with known variables (e.g. third-party databases).

Still, I wonder if there is an opportunity. I was playing around recently with a very simple, very robust scoring method a statistician showed me more than twenty years ago. (Sum of Z-scores on binary variables, if you care.) This did a reasonably good job of predicting product ownership in my test data. More to the point, the combined modeling-and-scoring process needed just a couple dozen lines of code in QlikView. It might have been a bit harder in other systems, given how powerful QlikView is. But it’s really quite simple regardless.

The only requirements are that the input contains a single record for each customer and that all variables are coded as 1 or 0. Within those constraints, any kind of inputs are usable and any kind of outcome can be predicted. The output is a score that ranks the records by their likelihood of meeting the target condition.

Now, I’m fully aware that better models can be produced with more data preparation and human experts. But there must be many situations where an approximate ranking would be useful if it could be produced in minutes with no prior investment for a couple hundred dollars. That's exactly what this approach makes possible: since the process is fully automated, the incremental cost is basically zero. Pricing would only need to cover overhead, marketing and customer support.

The closest analogy I can think of among existing products are on-demand customer data integration sites. These also take customer lists submitted over the Internet and automatically return enhanced versions – in their case, IDs that link duplicates, postal coding, and sometimes third-party demograhpics and other information. Come to think of it, similar services perform on-line credit checks. Those have proven to be viable businesses, so the fundamental idea is not crazy.

Whether on-demand generic scoring is also viable, I can’t really say. It’s not a business I am likely to pursue. But I think it illustrates that on-demand systems can provide value by letting customers do things with no prior setup. Many software-as-a-service vendors stress other advantages: lower cost of ownership, lack of capital investment, easy remote access, openness to external integration, and so on. These are all important in particular situations. But I’d also like to see vendors explore the niches where “no prior setup and no setup cost” offers the greatest value of all.

Wednesday, April 02, 2008

illuminate Systems' iLuminate May Be the Most Flexible Analytical Database Ever

OK, I freely admit I’m fascinated by offbeat database engines. Maybe there is a support group for this. In any event, the highlight of my brief visit to the DAMA International Symposium and Wilshire Meta-Data Conference conference last month was a presentation by Joe Foley of illuminate Solutions , which marked the U.S. launch of his company’s iLuminate analytical database.

(Yes, the company name is “illuminate” and the product is “iLuminate”. And if you look inside the HTML tag, you’ll see the Internet domain is “i-lluminate.com”. Marketing genius or marketing madness? You decide.)

illuminate calls iLuminate a “correlation database”, a term they apparently invented to distinguish it from everything else. It does appear to be unique, although somewhat similar to other products I’ve seen over the years: Digital Archaeology (now deceased), Omnidex and even QlikView come to mind. Like iLuminate, these systems store links among data values rather than conventional database tables or columnar structures. iLuminate is the purest expression I’ve seen of this approach: it literally stores each value once, regardless of how many tables, columns or rows contain it in the source data. Indexes and algorithms capture the context of each original occurrence so the system can recreate the original records when necessary.

The company is rather vague on the details, perhaps on purpose. They do state that each value is tied to a conventional b-tree index that makes it easily and quickly accessible. What I imagine—and let me stress I may have this wrong—is that each value is then itself tied to a hierarchical list of the tables, columns and rows in which it appears. There would be a count associated with each level, so a query that asked how many times a value appears in each table would simply look at the pre-calculated value counts; a query of how many times the value appeared in a particular column could look down one level to get the column counts. A query that needed to know about individual rows would retrieve the row numbers. A query that involved multiple values would retrieve multiple sets of row numbers and compare them: so, say, a query looking for state = “NY” and eye color = “blue” might find that “NY” appears in the state column for records 3, 21 and 42, while “blue” appears in the eye color for records 5, 21 and 56. It would then return row=21 as the only intersection of the two sets. Another set of indexes would make it simple to retrieve the other components of row 21.

Whether or not that’s what actually happens under the hood, this does illustrate the main advantages of iLuminate. Specifically, it can import data in any structure and access it without formal database design; it can identify instances of the same value across different tables or columns; it can provide instant table and column level value distributions; and it can execute incremental queries against a previously selected set of records. The company also claims high speed and terabyte scalability, although some qualification is needed: initial results from a query appear very quickly, but calculations involving a large result set must wait for the system to assemble and process the full set of data. Foley also said that although the system has been tested with a terabyte of data, the largest production implementation is a much less impressive 50 gigabytes. Still, the largest production row count is 200 million rows – nothing to sneeze at.

The system avoids some of the pitfalls that sometimes trap analytical databases: load times are comparable to load times for comparable relational databases (once you include time for indexing, etc.); total storage (including the indexes, which take up most of the space) is about the same as relational source data; and users can write queries in standard SQL via ODBC. This final point is particularly important, because many past analytical systems were not SQL-compatible. This deterred many potential buyers. The new crop of analytical database vendors has learned this lesson: nearly all of the new analytical systems are SQL-accessible. Just to be clear, iLuminate is not an in-memory database, although it will make intelligent use of what memory is available, often loading the data values and b-tree indexes into memory when possible.

Still, at least from my perspective, the most important feature of iLuminate is its ability to work with any structure of input data—including structures that SQL would handle poorly or not at all. This is where users gain huge time savings, because they need not predict the queries they will write and then design a data structure to support them. In this regard, the system is even more flexible than QlikView, which it in many ways resembles: while QlikView links tables with fixed keys during the data load, iLuminate does not. Instead, like a regular SQL system, iLuminate can apply different relationships to one set of data by defining the relationships within different queries. (On the other hand, QlikView’s powerful scripting language allows much more data manipulation during the load process.)

Part of the reason I mention QlikView is that iLuminate itself uses QlikView as a front-end tool under the label of iAnalyze. This extracts data from iLuminate using ODBC and then loads it into QlikView. Naturally, the data structure at that point must include fixed relationships. In addition to QlikView, iAnalyze also includes integrated mapping. A separate illuminate product, called iCorrelated, allows ad hoc, incremental queries directly against iLuminate and takes full advantage of its special capabilities.

illuminate, which is based in Spain, has been in business for nearly three years. It has more than 40 iLuminate installations, mostly in Europe. Pricing is based on several factors but the entry cost is very reasonable: somewhere in the $80,000 to $100,000 range, including iAnalyze. As part of its U.S. launch, the company is offering no-cost proof of concept projects to qualified customers.

(Yes, the company name is “illuminate” and the product is “iLuminate”. And if you look inside the HTML tag, you’ll see the Internet domain is “i-lluminate.com”. Marketing genius or marketing madness? You decide.)

illuminate calls iLuminate a “correlation database”, a term they apparently invented to distinguish it from everything else. It does appear to be unique, although somewhat similar to other products I’ve seen over the years: Digital Archaeology (now deceased), Omnidex and even QlikView come to mind. Like iLuminate, these systems store links among data values rather than conventional database tables or columnar structures. iLuminate is the purest expression I’ve seen of this approach: it literally stores each value once, regardless of how many tables, columns or rows contain it in the source data. Indexes and algorithms capture the context of each original occurrence so the system can recreate the original records when necessary.

The company is rather vague on the details, perhaps on purpose. They do state that each value is tied to a conventional b-tree index that makes it easily and quickly accessible. What I imagine—and let me stress I may have this wrong—is that each value is then itself tied to a hierarchical list of the tables, columns and rows in which it appears. There would be a count associated with each level, so a query that asked how many times a value appears in each table would simply look at the pre-calculated value counts; a query of how many times the value appeared in a particular column could look down one level to get the column counts. A query that needed to know about individual rows would retrieve the row numbers. A query that involved multiple values would retrieve multiple sets of row numbers and compare them: so, say, a query looking for state = “NY” and eye color = “blue” might find that “NY” appears in the state column for records 3, 21 and 42, while “blue” appears in the eye color for records 5, 21 and 56. It would then return row=21 as the only intersection of the two sets. Another set of indexes would make it simple to retrieve the other components of row 21.

Whether or not that’s what actually happens under the hood, this does illustrate the main advantages of iLuminate. Specifically, it can import data in any structure and access it without formal database design; it can identify instances of the same value across different tables or columns; it can provide instant table and column level value distributions; and it can execute incremental queries against a previously selected set of records. The company also claims high speed and terabyte scalability, although some qualification is needed: initial results from a query appear very quickly, but calculations involving a large result set must wait for the system to assemble and process the full set of data. Foley also said that although the system has been tested with a terabyte of data, the largest production implementation is a much less impressive 50 gigabytes. Still, the largest production row count is 200 million rows – nothing to sneeze at.

The system avoids some of the pitfalls that sometimes trap analytical databases: load times are comparable to load times for comparable relational databases (once you include time for indexing, etc.); total storage (including the indexes, which take up most of the space) is about the same as relational source data; and users can write queries in standard SQL via ODBC. This final point is particularly important, because many past analytical systems were not SQL-compatible. This deterred many potential buyers. The new crop of analytical database vendors has learned this lesson: nearly all of the new analytical systems are SQL-accessible. Just to be clear, iLuminate is not an in-memory database, although it will make intelligent use of what memory is available, often loading the data values and b-tree indexes into memory when possible.

Still, at least from my perspective, the most important feature of iLuminate is its ability to work with any structure of input data—including structures that SQL would handle poorly or not at all. This is where users gain huge time savings, because they need not predict the queries they will write and then design a data structure to support them. In this regard, the system is even more flexible than QlikView, which it in many ways resembles: while QlikView links tables with fixed keys during the data load, iLuminate does not. Instead, like a regular SQL system, iLuminate can apply different relationships to one set of data by defining the relationships within different queries. (On the other hand, QlikView’s powerful scripting language allows much more data manipulation during the load process.)

Part of the reason I mention QlikView is that iLuminate itself uses QlikView as a front-end tool under the label of iAnalyze. This extracts data from iLuminate using ODBC and then loads it into QlikView. Naturally, the data structure at that point must include fixed relationships. In addition to QlikView, iAnalyze also includes integrated mapping. A separate illuminate product, called iCorrelated, allows ad hoc, incremental queries directly against iLuminate and takes full advantage of its special capabilities.

illuminate, which is based in Spain, has been in business for nearly three years. It has more than 40 iLuminate installations, mostly in Europe. Pricing is based on several factors but the entry cost is very reasonable: somewhere in the $80,000 to $100,000 range, including iAnalyze. As part of its U.S. launch, the company is offering no-cost proof of concept projects to qualified customers.

Thursday, March 27, 2008

The Limits of On-Demand Business Intelligence

I had an email yesterday from Blink Logic , which offers on-demand business intelligence. That could mean quite a few things but the most likely definition is indeed what Blink Logic provides: remote access to business intelligence software loaded with your own data. I looked a bit further and it appears Blink Logic does this with conventional technologies, primarily Microsoft SQL Server Analysis Services and Cognos Series 7.

At that point I pretty much lost interest because (a) there’s no exotic technology, (b) quite a few vendors offer similar services*, and (c) the real issue with business intelligence is the work required to prepare the data for analysis, which doesn’t change just because the system is hosted.

Now, this might be unfair to Blink Logic, which could have some technology of its own for data loading or the user interface. It does claim that at least one collaboration feature, direct annotation of data in reports, is unique. But the major point remains: Blink Logic and other “on-demand business intelligence” vendors are simply offering a hosted version of standard business intelligence systems. Does anyone truly think the location of the data center is the chief reason that business intelligence has so few users?

As I see it, the real obstacle is that most source data must be integrated and restructured before business intelligence systems can use it. It may be literally true that hosted business intelligence systems can be deployed in days and users can build dashboards in minutes, but this only applies given the heroic assumption that the proper data is already available. Under those conditions, on-premise systems can be deployed and used just as quickly. Hosting per se has little benefit when it comes to speed of deployment. (Well, maybe some: it can take days or even a week or two to set up a new server in some corporate data centers. Still, that is a tiny fraction of the typical project schedule.)

If hosting isn't the answer, what can make true “business intelligence on demand” a reality? Since the major obstacle is data preparation, then anything that allows less preparation will help. This brings us back to the analytical databases and appliances I’ve been writing about recently : Alterian, Vertica, ParAccel, QlikView, Netezza and so on. At least some of them do reduce the need for preparation because they let users query raw data without restructuring it or aggregating it. This isn’t because they avoid SQL queries, but because they offer a great enough performance boost over conventional databases that aggregation or denormalization are not necessary to return results quickly.

Of course, performance alone can’t solve all data preparation problems. The really knotty challenges like customer data integration and data quality still remain. Perhaps some of those will be addressed by making data accessible as a service (see last week’s post). But services themselves do not appear automatically, so a business intelligence application that requires a new service will still need advance planning. Where services will help is when business intelligence users can take advantage of services created for operational purposes.

“On demand business intelligence” also requires that end-users be able to do more for themselves. I actually feel this is one area where conventional technology is largely adequate: although systems could always be easier, end-users willing to invest a bit of time can already create useful dashboards, reports and analyses without deep technical skills. There are still substantial limits to what can be done – this is where QlikView’s scripting and macro capabilities really add value by giving still more power to non-technical users (or, more precisely, to power users outside the IT department). Still, I’d say that when the necessary data is available, existing business intelligence tools let users accomplish most of what they want.

If there is an issue in this area, it’s that SQL-based analytical databases don’t usually include an end-user access tool. (Non-SQL systems do provide such tools, since users have no alternatives.) This is a reasonable business decision on their part, both because many firms have already selected a standard access tool and because the vendors don’t want to invest in a peripheral technology. But not having an integrated access tool means clients must take time to connect the database to another product, which does slow things down. Apparently I'm not the only person to notice this: some of the analytical vendors are now developing partnerships with access tool vendors. If they can automate the relationship so that data sources become visible in the access tool as soon as they are added to the analytical system, this will move “on demand business intelligence” one step closer to reality.

* results of a quick Google search: OnDemandIQ, LucidEra, PivotLink (an in-memory columnar database), oco, VisualSmart, GoodData and

Autometrics.

At that point I pretty much lost interest because (a) there’s no exotic technology, (b) quite a few vendors offer similar services*, and (c) the real issue with business intelligence is the work required to prepare the data for analysis, which doesn’t change just because the system is hosted.

Now, this might be unfair to Blink Logic, which could have some technology of its own for data loading or the user interface. It does claim that at least one collaboration feature, direct annotation of data in reports, is unique. But the major point remains: Blink Logic and other “on-demand business intelligence” vendors are simply offering a hosted version of standard business intelligence systems. Does anyone truly think the location of the data center is the chief reason that business intelligence has so few users?

As I see it, the real obstacle is that most source data must be integrated and restructured before business intelligence systems can use it. It may be literally true that hosted business intelligence systems can be deployed in days and users can build dashboards in minutes, but this only applies given the heroic assumption that the proper data is already available. Under those conditions, on-premise systems can be deployed and used just as quickly. Hosting per se has little benefit when it comes to speed of deployment. (Well, maybe some: it can take days or even a week or two to set up a new server in some corporate data centers. Still, that is a tiny fraction of the typical project schedule.)

If hosting isn't the answer, what can make true “business intelligence on demand” a reality? Since the major obstacle is data preparation, then anything that allows less preparation will help. This brings us back to the analytical databases and appliances I’ve been writing about recently : Alterian, Vertica, ParAccel, QlikView, Netezza and so on. At least some of them do reduce the need for preparation because they let users query raw data without restructuring it or aggregating it. This isn’t because they avoid SQL queries, but because they offer a great enough performance boost over conventional databases that aggregation or denormalization are not necessary to return results quickly.

Of course, performance alone can’t solve all data preparation problems. The really knotty challenges like customer data integration and data quality still remain. Perhaps some of those will be addressed by making data accessible as a service (see last week’s post). But services themselves do not appear automatically, so a business intelligence application that requires a new service will still need advance planning. Where services will help is when business intelligence users can take advantage of services created for operational purposes.

“On demand business intelligence” also requires that end-users be able to do more for themselves. I actually feel this is one area where conventional technology is largely adequate: although systems could always be easier, end-users willing to invest a bit of time can already create useful dashboards, reports and analyses without deep technical skills. There are still substantial limits to what can be done – this is where QlikView’s scripting and macro capabilities really add value by giving still more power to non-technical users (or, more precisely, to power users outside the IT department). Still, I’d say that when the necessary data is available, existing business intelligence tools let users accomplish most of what they want.

If there is an issue in this area, it’s that SQL-based analytical databases don’t usually include an end-user access tool. (Non-SQL systems do provide such tools, since users have no alternatives.) This is a reasonable business decision on their part, both because many firms have already selected a standard access tool and because the vendors don’t want to invest in a peripheral technology. But not having an integrated access tool means clients must take time to connect the database to another product, which does slow things down. Apparently I'm not the only person to notice this: some of the analytical vendors are now developing partnerships with access tool vendors. If they can automate the relationship so that data sources become visible in the access tool as soon as they are added to the analytical system, this will move “on demand business intelligence” one step closer to reality.

* results of a quick Google search: OnDemandIQ, LucidEra, PivotLink (an in-memory columnar database), oco, VisualSmart, GoodData and

Autometrics.

Thursday, March 20, 2008

Service Oriented Architectures Might Really Change Everything

I put in a brief but productive appearance at the DAMA International Symposium and Wilshire Meta-Data Conference running this week in San Diego. This is THE event for people who care passionately about topics like “A Semantic-Driven Application for Master Data Management” and “Dimensional-Modeling – Alternative Designs for Slowly Changing Dimensions”. As you might imagine, there aren't that many of them, and it’s always clear that the attendees revel in spending time with others in their field. I’m sure there are some hilarious data modeling jokes making the rounds at the show, but I wasn’t able to stick around long enough to hear any.

One of the few sessions I did catch was a keynote by Gartner Vice President Michael Blechar. His specific topic was the impact of a services-driven architecture on data management, with the general point being that services depend on data being easily available for many different purposes, rather than tied to individual applications as in the past. This means that the data feeding into those services must be redesigned to fit this broader set of uses.

In any case, what struck me was Blechar’s statement the fundamental way I’ve always thought about systems is now obsolete. I've always thought that systems do three basic things: accept inputs, process them, and create outputs. This doesn't apply in the new world, where services are strung together to handle specific processes. The services themselves handle the data gathering, processing and outputs, so these occur repeatedly as the process moves from one service to another. (Of course, a system can still have its own processing logic that exists outside a service.) But what’s really new is that a single service may be used in several different processes. This means that services are not simply components within a particular proces or system: they have an independent existence of their own.

Exactly how you create and manage these process-independent services is a bit of a mystery to me. After all, you still have to know they will meet the requirements of whatever processes will use them. Presumably this means those requirements must be developed the old fashioned way: by defining the process flow in detail. Any subtle differences in what separate processes need from the same service must be accommodated either by making the service more flexible (for example, adding some parameters that specify how it will function in a particular case) or by adding specialized processing outside of the service. I'll assume that the people who worry about these things for a living must have recognized this long ago and worked out their answers.

What matters to me is what an end-user can do once these services exist. Blechar argued that users now view their desktop as a “composition platform” that combines many different services and uses the results to orchestrate business processes. He saw executive dashboards in particular as evolving from business intelligence systems (based on a data warehouse or data mart) to business activity monitoring based on the production systems themselves. This closer connection to actual activity would in turn allow the systems to be more “context aware”—for example, issuing alerts and taking actions based on current workloads and performance levels.

Come to think of it, my last post discussed eglue and others doing exactly this to manage customer interactions. What a more comprehensive set of services should do is make it easer to set up this type of context-aware decision making.

Somewhat along these same lines, Computerworld this morning describes another Gartner paper arguing that IT’s role in business intelligence will be “marginalized” by end-users creating their own business intelligence systems using tools like enterprise search, visualization and in-memory analytics (hello, QlikView!). The four reader comments posted so far have been not-so-politely skeptical of this notion, basically because they feel IT will still do all the heavy lifting of building the databases that provide information for these user-built systems. This is correct as far as it goes, but it misses the point that IT will be exposing this data as services for operational reasons anyway. In that case, no additional IT work is needed to make it available for business intelligence. Once end-users have self-service tools to access and analyze the data provided by these operational services, business intelligence systems would emerge without IT involvement. I'd say that counts as "marginalized".

Just to bring these thoughts full circle: this means that designing business intelligence systems with the old “define inputs, define processes, define outputs” model would indeed be obsolete. The inputs would already be available as services, while the processes and outputs would be created simultaneously in end-user tools. I’m not quite sure I really believe this is going to happen, but it’s definitely food for thought.

One of the few sessions I did catch was a keynote by Gartner Vice President Michael Blechar. His specific topic was the impact of a services-driven architecture on data management, with the general point being that services depend on data being easily available for many different purposes, rather than tied to individual applications as in the past. This means that the data feeding into those services must be redesigned to fit this broader set of uses.

In any case, what struck me was Blechar’s statement the fundamental way I’ve always thought about systems is now obsolete. I've always thought that systems do three basic things: accept inputs, process them, and create outputs. This doesn't apply in the new world, where services are strung together to handle specific processes. The services themselves handle the data gathering, processing and outputs, so these occur repeatedly as the process moves from one service to another. (Of course, a system can still have its own processing logic that exists outside a service.) But what’s really new is that a single service may be used in several different processes. This means that services are not simply components within a particular proces or system: they have an independent existence of their own.

Exactly how you create and manage these process-independent services is a bit of a mystery to me. After all, you still have to know they will meet the requirements of whatever processes will use them. Presumably this means those requirements must be developed the old fashioned way: by defining the process flow in detail. Any subtle differences in what separate processes need from the same service must be accommodated either by making the service more flexible (for example, adding some parameters that specify how it will function in a particular case) or by adding specialized processing outside of the service. I'll assume that the people who worry about these things for a living must have recognized this long ago and worked out their answers.

What matters to me is what an end-user can do once these services exist. Blechar argued that users now view their desktop as a “composition platform” that combines many different services and uses the results to orchestrate business processes. He saw executive dashboards in particular as evolving from business intelligence systems (based on a data warehouse or data mart) to business activity monitoring based on the production systems themselves. This closer connection to actual activity would in turn allow the systems to be more “context aware”—for example, issuing alerts and taking actions based on current workloads and performance levels.

Come to think of it, my last post discussed eglue and others doing exactly this to manage customer interactions. What a more comprehensive set of services should do is make it easer to set up this type of context-aware decision making.

Somewhat along these same lines, Computerworld this morning describes another Gartner paper arguing that IT’s role in business intelligence will be “marginalized” by end-users creating their own business intelligence systems using tools like enterprise search, visualization and in-memory analytics (hello, QlikView!). The four reader comments posted so far have been not-so-politely skeptical of this notion, basically because they feel IT will still do all the heavy lifting of building the databases that provide information for these user-built systems. This is correct as far as it goes, but it misses the point that IT will be exposing this data as services for operational reasons anyway. In that case, no additional IT work is needed to make it available for business intelligence. Once end-users have self-service tools to access and analyze the data provided by these operational services, business intelligence systems would emerge without IT involvement. I'd say that counts as "marginalized".

Just to bring these thoughts full circle: this means that designing business intelligence systems with the old “define inputs, define processes, define outputs” model would indeed be obsolete. The inputs would already be available as services, while the processes and outputs would be created simultaneously in end-user tools. I’m not quite sure I really believe this is going to happen, but it’s definitely food for thought.

Tuesday, March 11, 2008

eglue Links Data to Improve Customer Interactions

Let me tell you a story.

For years, United Parcel Service refused to invest in the tracking systems and other technologies that made Federal Express a preferred carrier for many small package shippers. It wasn’t that the people at UPS were stupid: to the contrary, they had built such incredibly efficient manual systems that they could never see how automated systems would generate enough added value to cover their cost. Then, finally, some studies came out the other way. Overnight, UPS expanded its IT department from 300 people to 3,000 people (these may not be the exact numbers). Today, UPS technology is every bit as good as its rivals’ and the company is more dominant in its industry than ever.

The point of this story—well, one point anyway—is that innovations which look sensible to outsiders often don’t get adopted because they don’t add enough value to an already well-run organization. (I could take this a step further to suggest that once the added value does exceed costs, a “tipping point” is reached and adoption rates will soar. Unfortunately, I haven’t seen this in reality. Nice theory, though.)

This brings us to eglue, which offers “real time interaction management” (my term, not theirs): that is, it helps call center agents, Web sites and other customer-facing systems to offer the right treatment at each point during an interaction. The concept has been around for years and has consistently demonstrated substantial value. But adoption rates have been perplexingly low.

We’ll get back to adoption rates later, although I should probably note right here that eglue has doubled its business for each of the past three years. First, let’s take a closer look at the product itself.

To repeat, the basic function of eglue is making recommendations during real time interactions. Specifically, the system adds this capability to existing applications with a minimum of integration effort. Indeed, being “minimally invasive” (their term) is a major selling point, and does address one of the significant barriers to adopting interaction management systems. Eglue can use standard database queries or Web services calls to capture interaction data. But its special approach is what it calls “GUI monitoring”—reading data from the user interface without any deeper connection to the underlying systems. Back in the day, we used to call this “screen scraping”, although I assume eglue’s approach is much more sophisticated.

As eglue captures information about an on-going interaction, it applies rules and scoring models to decide what to recommend. These rules are set up by business users, taking advantage of data connections prepared in advance by technical staff. This is as it should be: business users should not need IT assistance to make day-to-day rule changes.

On the other hand, a sophisticated business environment involves lots of possible business rules, and business users only have so much time or capacity to understand all the interconnections. Eglue is a bit limited here: unlike some other interaction management systems, it does not automatically generate recommended rules or update its scoring models as data is received.

This may or may not be a weakness. How much automation makes sense is a topic of heated debate among people who care about such things. User-generated rules are more reliable than unsupervised automation, but they also take more effort and can’t react immediately to changes in customer behavior. I personally feel eglue’s heavy reliance on rules is a disadvantage, though a minor one. eglue does provide a number of prebuilt applications for specific tasks, so clients need not build their own rules from scratch.

What impressed me more about eglue was that its rules can take into account not only the customer’s own behavior, both during and before the interaction, but also the local context (e.g., the current workload and wait times at the call center) and the individual agent on the other end of the phone. Thus, agents with a history of doing well at selling a particular product are provided more recommendations for that product. Or the system could restrict recommendations for complex products to experienced agents who are capable of handling them. eglue rules can also alert supervisors about relationships between agents and interactions. For example, it might tell a supervisor when a high value customer is talking to an inexperienced agent, so she can listen and intervene if necessary.

The interface for presenting the recommendations is also quite appealing. Recommendations appear as pop-ups on the user’s screen, which makes them stand out nicely. More important, they provide a useful range of information: the recommendation itself, selling points (which can be tailored to the customer and agent), a mechanism to capture feedback (was the recommended offer presented to the customer? Did she accept or reject it?), and links to additional information such as product features. There is an option to copy information into another application—for example, saving the effort to type a customer’s name or account information into an order processing system. As anyone who has had to repeat their phone number three times during the course of a simple transaction can attest—and that would be all of us—that feature alone is worth the price of admission. The pop-up can also show the business rule that triggered a recommendation and the data that rule is using.

As each interaction progresses, eglue automatically captures information about the offers it has recommended and customer response. This information can be used in reports, applied to model development, and added to customer profiles to guide future recommendations. It can also be fed back into other corporate systems.

The price for all this is not insignificant. Eglue costs about $1,000 to $1,200 per seat, depending on the details. However, this is probably in line with competitors like Chordiant, Pegasystems, and Portrait Software. eglue targets call centers with 250 to 300 seats; indeed, its 30+ clients are all Fortune 1000 firms. Its largest installation has 20,000 seats. The company’s “GUI monitoring” approach to integration and prebuilt applications allow it to complete an implementation in a relatively speedy 8 to 12 weeks.

This brings us back, in admittedly roundabout fashion, to the original question: why don’t more companies use this technology? The benefits are well documented—one eglue case study showed a 27% increase in revenue per call at Key Bank, and every other vendor has similar stories. The cost is reasonable and implementation gets easier all the time. But although eglue and its competitors have survived and grown on a small scale, this class of software is still far from ubiquitous.

My usual theory is lack of interest by call center managers: they don’t see revenue generation as their first priority, even though they may be expected to do some of it. But eglue and its competitors can be used for training, compliance and other applications that are closer to a call center manager’s heart. There is always the issue of data integration, but that keeps getting easier as newer technologies are deployed, and it doesn’t take much data to generate effective recommendations. Another theory, echoing the UPS story, is that existing call center applications have enough built-in capabilities to make investment in a specialized recommendation system uneconomic. I’m guessing that answer may be the right one.

But I’ll leave the last word to Hovav Lapidot, eglue’s Vice President of Product Marketing and a six-year company veteran. His explanation was that the move to overseas outsourced call centers over the past decade reflected a narrow focus on cost reduction. Neither the corporate managers doing the outsourcing nor the vendors competing on price were willing to pay more for the revenue-generation capabilities of interaction management systems. But Lapidot says this has been changing: some companies are bringing work back from overseas in the face of customer unhappiness, and managers are showing more interest in the potential value of inbound interactions.

The ultimate impact of interaction management software is to create a better experience for customers like you and me. So let’s all hope he’s right.

For years, United Parcel Service refused to invest in the tracking systems and other technologies that made Federal Express a preferred carrier for many small package shippers. It wasn’t that the people at UPS were stupid: to the contrary, they had built such incredibly efficient manual systems that they could never see how automated systems would generate enough added value to cover their cost. Then, finally, some studies came out the other way. Overnight, UPS expanded its IT department from 300 people to 3,000 people (these may not be the exact numbers). Today, UPS technology is every bit as good as its rivals’ and the company is more dominant in its industry than ever.

The point of this story—well, one point anyway—is that innovations which look sensible to outsiders often don’t get adopted because they don’t add enough value to an already well-run organization. (I could take this a step further to suggest that once the added value does exceed costs, a “tipping point” is reached and adoption rates will soar. Unfortunately, I haven’t seen this in reality. Nice theory, though.)

This brings us to eglue, which offers “real time interaction management” (my term, not theirs): that is, it helps call center agents, Web sites and other customer-facing systems to offer the right treatment at each point during an interaction. The concept has been around for years and has consistently demonstrated substantial value. But adoption rates have been perplexingly low.

We’ll get back to adoption rates later, although I should probably note right here that eglue has doubled its business for each of the past three years. First, let’s take a closer look at the product itself.

To repeat, the basic function of eglue is making recommendations during real time interactions. Specifically, the system adds this capability to existing applications with a minimum of integration effort. Indeed, being “minimally invasive” (their term) is a major selling point, and does address one of the significant barriers to adopting interaction management systems. Eglue can use standard database queries or Web services calls to capture interaction data. But its special approach is what it calls “GUI monitoring”—reading data from the user interface without any deeper connection to the underlying systems. Back in the day, we used to call this “screen scraping”, although I assume eglue’s approach is much more sophisticated.

As eglue captures information about an on-going interaction, it applies rules and scoring models to decide what to recommend. These rules are set up by business users, taking advantage of data connections prepared in advance by technical staff. This is as it should be: business users should not need IT assistance to make day-to-day rule changes.

On the other hand, a sophisticated business environment involves lots of possible business rules, and business users only have so much time or capacity to understand all the interconnections. Eglue is a bit limited here: unlike some other interaction management systems, it does not automatically generate recommended rules or update its scoring models as data is received.

This may or may not be a weakness. How much automation makes sense is a topic of heated debate among people who care about such things. User-generated rules are more reliable than unsupervised automation, but they also take more effort and can’t react immediately to changes in customer behavior. I personally feel eglue’s heavy reliance on rules is a disadvantage, though a minor one. eglue does provide a number of prebuilt applications for specific tasks, so clients need not build their own rules from scratch.

What impressed me more about eglue was that its rules can take into account not only the customer’s own behavior, both during and before the interaction, but also the local context (e.g., the current workload and wait times at the call center) and the individual agent on the other end of the phone. Thus, agents with a history of doing well at selling a particular product are provided more recommendations for that product. Or the system could restrict recommendations for complex products to experienced agents who are capable of handling them. eglue rules can also alert supervisors about relationships between agents and interactions. For example, it might tell a supervisor when a high value customer is talking to an inexperienced agent, so she can listen and intervene if necessary.

The interface for presenting the recommendations is also quite appealing. Recommendations appear as pop-ups on the user’s screen, which makes them stand out nicely. More important, they provide a useful range of information: the recommendation itself, selling points (which can be tailored to the customer and agent), a mechanism to capture feedback (was the recommended offer presented to the customer? Did she accept or reject it?), and links to additional information such as product features. There is an option to copy information into another application—for example, saving the effort to type a customer’s name or account information into an order processing system. As anyone who has had to repeat their phone number three times during the course of a simple transaction can attest—and that would be all of us—that feature alone is worth the price of admission. The pop-up can also show the business rule that triggered a recommendation and the data that rule is using.

As each interaction progresses, eglue automatically captures information about the offers it has recommended and customer response. This information can be used in reports, applied to model development, and added to customer profiles to guide future recommendations. It can also be fed back into other corporate systems.

The price for all this is not insignificant. Eglue costs about $1,000 to $1,200 per seat, depending on the details. However, this is probably in line with competitors like Chordiant, Pegasystems, and Portrait Software. eglue targets call centers with 250 to 300 seats; indeed, its 30+ clients are all Fortune 1000 firms. Its largest installation has 20,000 seats. The company’s “GUI monitoring” approach to integration and prebuilt applications allow it to complete an implementation in a relatively speedy 8 to 12 weeks.

This brings us back, in admittedly roundabout fashion, to the original question: why don’t more companies use this technology? The benefits are well documented—one eglue case study showed a 27% increase in revenue per call at Key Bank, and every other vendor has similar stories. The cost is reasonable and implementation gets easier all the time. But although eglue and its competitors have survived and grown on a small scale, this class of software is still far from ubiquitous.