Tuesday, February 24, 2009

First Look at New Marketo Release

The changes that Fernandez described seemed good but subtle. Major themes were greater access to detailed information, more precise targeting, and tighter integration with Salesforce.com. The company also reworked the user interface--of more than 200 total changes in this upgrade, about 75 were tied to usability--although it still takes the same basic approach of building campaigns as lists of steps, rather than branching flow charts. In Fernandez’ view, this reflects a fundamental philosophical difference from his competitors: Marketo sees marketing as reacting to prospect-initiated behaviors, not executing company-driven interaction paths. Although I actually think that quite a few demand generation vendors share the Marketo philosophy, it’s still helpful to hear the distinction made clearly.

What's ultimately more important than the uniqueness of Marketo's philosophy is how they have built it into their software. In Marketo, each campaign is a relatively small, self-contained sequence of steps that is triggered by a particular prospect need. Fernandez used the analogy of a cocktail party: you might have a few stories you expect to tell, but don’t know exactly when you’ll tell them or in what order. The simplicity of these individual campaigns is what lets Marketo use a simple interface to build them. There is certainly more to it than that—the company has a fanatical devotion to usability—but I’d argue that being structured around simple campaigns is the key to Marketo’s well-deserved reputation as a system that’s easy to use.

The challenge with all these simple campaigns is the same as the challenge of telling stories at a cocktail party: you have to be sure to tell the right story to the right person at the right time. Marketo’s approach is to trigger campaigns based on specified events or list criteria. But since this by itself won’t coordinate the separate campaigns, Marketo also lets users set up other campaigns (which sound suspiciously like flow charts, although they are not displayed that way) whose rules send prospects to one campaign or another. This is a perfectly straightforward approach, although I suspect that marketers may find it hard to keep track of the selection rules as they get increasingly complicated.

Here is where it’s worth considering the approaches of other vendors. Products including Silverpop Engage B2B (formerly Vtrenz), Market2Lead and Marketbright also let marketers set up small, sequential campaigns and embed them in selection framework. Each vendor takes a different approach to building this framework, and, like Marketo, they can all be difficult to grasp once you pass a certain threshold of complexity.

Still, I think its fair to say that the general approach of simple campaigns linked in a decision matrix is a more effective way to implement non-linear, prospect-driven interactions than conventional flow charts. My own experience over the years has been that flow charts quickly become too complicated for most marketers to deal with.

Hmm, I seem to have fallen back into a discussion of usability without planning to. You can just imagine how much fun I am at a cocktail party. Fernandez and I did in fact discuss other things, including Marketo’s phenomenal growth (he hopes to sign his 150th customer any day now), its success in selling to non-technology companies (healthcare, manufacturing and business services have been strong), and the growing importance of demand generation systems as buyers take control of the purchasing process.

That last point morphed into a discussion of the increased integration between marketing and sales. Today's prospects repeatedly bounce between the two during hyper-extended sales cycles that begin earlier and are far less linear. That resonated with me because I’ve noticed several other demand generation vendors offering features that cross into traditional sales department territories, such as prospect portals and reseller management.

In Marketo’s case, though, it’s less a matter of adding sales-type functions than tightening its integration with Salesforce.com. Changes to support this include faster response times, more extensive data sharing, and more precise control over synchronization rules. Still, the general point is the same: marketing and sales must cooperate more closely than ever. It's always interesting to see how a thoughtful company like Marketo looks at a trend like this and decides to react.

Monday, February 23, 2009

Three Options for Measuring Software Ease of Use

In preparation, I wanted to share a general look at the options I have available for building usability rankings. This should help clarify why I’ve chosen the path I’m following.

First, let’s set some criteria. A suitable scoring method has to be economically feasible, reasonably objective, easily explained, and not subject to vendor manipulation. Economics is probably the most critical stumbling block: although I’d be delighted to run each product through formal testing in a usability lab or do a massive industry-wide survey, these would be impossibly expensive. Being objective and explicable are less restrictive goals; mostly, they rule out me just arbitrarily assigning the scores without explanation. But I wouldn’t want to do that anyway. Avoiding vendor manipulation mostly applies to surveys: if I just took an open poll on a Website, there is a danger of vendors trying to “stuff the ballot box” in different ways. So I won’t go there.

As best I can figure, those constraints leave me with three primary options:

1. Controlled user surveys. I could ask the vendors to let me survey their client base or selected segments within that base. But it would be really hard to ensure that the vendors were not somehow influencing the results. Even if that weren’t a concern, it would still be difficult to design a reliable survey that puts the different vendors on the same footing. Just asking users to rate the systems for “ease of use” surely would not work, because people with different skill levels would give inconsistent answers. Asking how long it took to learn the system or build their first campaigns, or how long it takes to build an average campaign or to perform a specific task would face similar problems plus the additional unreliability of informal time estimates. In short, I just can’t see a way to build and execute a reliable survey to address the issue.

2. Time all vendors against a standard scenario. This would require defining all the components that go into a simple campaign, and then asking each vendor to build the campaign while I watch. Mostly I’d be timing how long it took to complete the process, although I suppose I’d also be taking notes about what looks hard or easy. You might object that the vendors would provide expert users for their systems, but that’s okay because clients also become expert users over time. There are some other issues having to do with set-up time vs. completion time—that is, how much should vendors be allowed to set up in advance? But I think those issues could be addressed. My main concern is whether the vendors would be willing to invest the hour or two it would take to complete a test like this (not to mention whether I can find the dozen or two hours needed to watch them all and prepare the results). I actually do like this approach, so if the vendors reading this tell me that they’re willing, I’ll probably give it a go.

3. Build a checklist of ease-of-use functions. This involves defining specific features that make a system easy to use for simple programs, and then determining which vendors provide those features. The challenge here is selecting the features, since people will disagree about what is hard or easy. But I’m actually pretty comfortable with the list I’ve developed, because there do seem to be some pretty clear trade-offs between making it easy to do simple things or complicated things. The advantage of this method is that once you’ve settled on the checklist, the actual vendor ratings are quite objective and easily explained. Plus it’s no small bonus that I’ve gathered most of the information already as part of my other vendor research. This means I can deliver the rankings fairly quickly and with minimal additional effort by the vendors or myself.

So those are my options. I'm not trying to convince you that approach number 3 is the “right” choice, but simply to show that I’ve considered several possibilities and number 3 seems to be the most practical solution available. Let me stress right here that I intend to produce two ease of use measures, one for simple programs and another for complex programs. This is very important because of the trade-offs I just mentioned. Having two measures will force marketers to ask themselves which one applies to them, and therefore to recognize that there is no single right answer for everyone. I wish I could say this point is so obvious that I needn't make it, but it's ignored more often than anyone would care to admit.

Of course, even two measures can’t capture the actual match between different vendors’ capabilities and each company’s particular requirements. There is truly no substitute for identifying your own needs and assessing the vendors directly against them. All I can hope to do with the generic ratings is to help buyers select the few products that are most likely to fit their needs. Narrowing the field early in the process will give marketers more time to look at the remaining contenders in more depth.

Monday, February 16, 2009

How to Compare Demand Generation Vendors: Choosing Summary Measures

This raises the issue of exactly what should be in the summary listings. It seems that what people really want is an easy way to identify the best candidates for their particular situation. (Duh.) This suggests the summary should contain two components: a self-evaluation where people describe their situation, and a scoring mechanism to compare their needs with vendor strengths and weaknesses.

Categories for the self-evaluation seem pretty obvious: they would be the standard demand generation functions (outbound email, landing pages and forms, nurturing campaigns, lead scoring, and Salesforce.com integration), maybe a menu for less standard functions (e.g. events like Webinars, paid and organic search, online chat, direct mail, telemarketing, partner management, etc.), price range, and willingness to consider less established vendors. Once you’ve settled on these, you have the vendor ratings categories too, since they have to align with each other. That lets you easily find the vendors with the highest scores in your highest priority areas. Simple enough.

Except...some marketers only need simple versions of these functions while other marketers need sophisticated versions. But every system in the Guide can meet the simple needs, so there’s no point to setting up a separate scores for those: everyone would be close to a perfect 10. On the other hand, the vendors do differ in how easily they perform those basic tasks, and it’s important to capture that in the scoring.

The literal-minded solution is to score vendors on their ease-of-use for each of the functions. Sounds good, and as soon as someone offers me a couple hundred thousand dollars to sit with a stopwatch and time users working with different systems, I’ll get right on it. Until that happens, I need a simpler but still reasonably objective approach. Ideally, this would allow me to create the scores based on the information I’ve already assembled in the Guide.

My current thinking is to come up with a list of specific list of system attributes that make it easy to do basic functions, and then create a single “ease of use for basic tasks” score for each vendor. As a practical matter, this makes sense because I don’t have enough detail to create separate scores for each function. Plus, I’m pretty sure that each vendor’s scores would be similar across the different functions. I also think most buyers would need similar levels of sophistication across the functions as well. So I think one score will work, and be much simpler for readers to deal with.

The result would be a matrix that looks something like the one below. It would presumably be accompanied by a paragraph or two thumbnail description of each vendor:

| Ease of Basic | Sophistication of Tasks | Other Tasks (list) | Ease of Purchase (Cost) | Vendor Strength | ||||

email campaigns | Web forms/ | nurture campaigns | lead scoring | Salesforce integration | |||||

Vendor A | 5 | 8 | 4 | 8 | 4 | 9 | 4 | 7 | 5 |

Vendor B | 8 | 4 | 8 | 4 | 7 | 4 | 7 | 5 | 8 |

Vendor C | 4 | 8 | 4 | 9 | 4 | 7 | 5 | 8 | 6 |

... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

So the first question is: would people find this useful? Remember that the detail behind the numbers would be available in the full Raab Guide but not as part of the free version.

The second question is: what attributes could support the “ease of basic tasks” score? Here is the set I’m considering at the moment. (Explanations are below; I’m listing them here to make this post a little easier to read):

- build a campaign as a list of steps.

- explicitly direct leads from one campaign to another.

- define campaign schedules and entry conditions at the campaign level, not for individual steps.

- select marketing contents from shared libraries.

- build decision rules from prebuilt functions for specific situations, such as ‘X web page visits in past Y days’.

- build split tests into content, not decision flows.

- explicitly define lead scoring as a campaign step, but have it point to a central lead scoring function.

- explicitly define lead transfer to sales as a campaign step, but have it point to a central lead transfer function.

These items are all fairly easy to judge: either a system works that way or it doesn’t. So in that sense they’re objective. But they’re subjective in that some people may not agree that they actually make a system easier to use for basic tasks. Again, I’d much prefer direct measures like the number of keystrokes or time required the complete a task. But I just don’t see a cost-effective way to gather that information.

Other measures I’d use in my scoring would be the amount of training provided during implementation, typical implementation time, and typical time for users to become proficient. These should be good indications of the difficulty of basic tasks. I do have some of this data, although it’s only what they vendors have told me. Answers will also be affected by differences in vendor deployment practices and in how sophisticated their users are. So I can’t give these too much weight.

Let me know what you think. Do these measures make sense? Are there others I can gather? Is there a better way to do this?

++++++++++

Explanations of the “ease of basic tasks” attributes:

- build a campaign as a list of steps. Each step can be an outbound message (email), an inbound message (landing page or form), or an action such as sending the lead for scoring or to another campaign. Campaigns can be saved as templates and reused, but the initial construction starts with a blank list. (This seems counterintuitive; surely it would be simpler to start with a prebuilt template? Yes for experienced marketers, but not for people who are just starting, who would have to explore the templates to figure out what they contain. For those people, it’s actually easier to start with something they’ve created for themselves and therefore fully understand.) Another aspect to this is that many marketers prefer a list of steps rather than a flow chart, even though they are logically the same. The reason is that simple campaigns tend to be very linear, so a flow chart adds more complexity than necessary. (Of all the items on this list, this is the one I’m probably least committed to. But the really simple systems I’ve seen do use lists more than flow charts, so I think there’s something to it.)

- explicitly direct leads from one campaign to another. Even basic marketing programs need to move leads from one treatment sequence to another, based on changes in lead status or behaviors. But building many different paths into a single campaign flow quickly becomes overwhelming. The easiest solution for basic marketing programs is to create separate, relatively simple campaigns and explicitly direct leads from one campaign to another at steps within the campaign designs. This approach works well only when there are relatively few campaigns in the program. More complex programs require other solutions, such as using rules to send different treatments to different people within the same campaign, having each campaign independently scan the database for leads that meet its acceptance criteria, or embedding small sequences (such as an email, landing page, thank you page and confirmation email) as a single step in a larger campaign. Systems built to handle sophisticated campaign requirements sometimes provide an alternative, simpler interface to handle simple campaign designs.

- define campaign schedules and entry conditions at the campaign level, not for individual steps. Basic marketing campaigns will have a single, campaign-level schedule and set of entry conditions. Even campaigns fed by other campaigns often impose additional entry criteria, for example to screen out leads who have already completed a particular campaign. Users will look for the schedule and entry conditions among the campaign attributes, not as attributes of the first step in the campaign or of a separate list attached to the campaign. More sophisticated marketing programs may also give each step its own schedule and/or entry conditions, instead of the campaign attributes or in addition to them.

- select marketing contents from shared libraries. The libraries contain complete documents with shared elements such as headers, footers, layouts and color schemes, plus a body of text and data entry fields. Marketers can either use a document as-is or make a copy and then edit it. In practice, nearly every system has this sort of library, so it’s not a differentiator. But it’s still worth listing just in case you come across a system that doesn’t. More sophisticated approaches, such as templates shared across multiple documents, only add efficiency for more advanced marketing departments.

- build decision rules from prebuilt functions for specific situations, such as ‘X web page visits in past Y days’. This saves users from having to figure out how to build those functions themselves, which can be quite challenging. In the example given, it would require knowing which field in the database is used to measure Web page visits, how to write a query that counts something entries in that field, and how to limit the selection to a relative time period. There are a great many ways to get these wrong.

- build split tests into content, not decision flows. The easiest way to test different versions of an email or landing page is to treat the versions as a single item in the campaign flow, and have the system automatically serve the alternatives during program execution. The common alternative approach is to directly split the campaign audience, either at the start of the campaign or at a stage in the flow directly before the split itself. This results in a more complex campaign flow and is often more difficult to analyze.

- explicitly define lead scoring as a campaign step, but have it point to a central lead scoring function. This is a compromise between independently defining the lead scoring rules every time they’re used, and automatically scoring leads (after each event or on a regular schedule) without creating a campaign step at all. Independent score definitions are a great deal of extra work and can lead to painful inconsistencies, so it’s pretty clear why you would want to avoid them. Doing the scoring automatically is actually simpler, but may confuse marketers who don’t see the scoring listed as a step in their campaign flow.

- explicitly define lead transfer to sales as a campaign step, but have it point to a central lead transfer function. The logic here is the same as applies to lead scoring. A case could be made that transfer to sales should in fact be part of the lead scoring, but if nothing else you’d still want marketers to decide on for each campaign whether sending a lead to sales should also remove the lead from the current campaign. Once you have campaign-level decisions of that sort, the process should be a step in the campaign flow.

Friday, February 13, 2009

How Demand Generation Systems Handle Company Data: Diving into the Details

More than anything else, this exercise reinforced my understanding of how hard it is to answer a seemly simple question about a software product’s capabilities. My original approach in the Guide had simply been to ask vendors whether they had a separate company table in their system. In theory, this would imply that company data is stored once and applied to all the associated individuals, and that the demand generation system could aggregate data by company, use that data to calculate company-level lead scores, and change which company an individual is linked to. This turns out not to be the case. So I had to specifically ask about each of those capabilities, and even those questions don’t necessarily have simple answers.

This type of complexity is why I’ve always avoided simple summary grids that hide all the gory details. I’m perfectly aware – and people remind me quite often, should I forget – that most people find the details overwhelming and really just want a simple way to sekect a few systems to consider.

But it just doesn’t work that way. Yes, you can screen on non-functional criteria like cost, technical skills required, and vendor stability (not that those are exactly simple, either). But let’s say that leaves you with a dozen vendors, and you use some gross criteria to select the top three. If it turns out once you drill into details that two are missing some small-but-critical feature, you have to either go with the one remaining or start the process again with another set of candidates.

Starting again would be fine if you had the time, but let's face it: here in the real world you'll be under pressure to make a choice and will probably just choose whichever vendor remains standing. This isn’t necessarily so terrible, although your negotiating position will be weak and you might have missed another, better product.

But what if all three vendors fail on one detail or another? Now you’re really in trouble.

The only way to avoid these scenarios is to review the details up front. Thankfully, you don’t have to review all the details, but can limit yourself to the details that matter. Of course, this means you have to know what those details are, which in turn requires still more preliminary work. I was going to (and still may) write a separate post about this, but basically that means you have to lay out the details of the marketing programs you expect to execute with the system, and then identify the features needed to support those programs.

The good news here is you’ll need to lay out those programs anyway once you start using the system, so this is just a matter of time-shifting the work rather than adding it. Of course, doing more work now isn't easy, since you probably don't have a lot of free time. But knowing what you need will actually make the project go faster as well, so the payback will come fairly quickly.

So, the bottom line is that you really do need to look at product details early in the selection process. That said, I do think it’s possible to produce summaries that are linked to details, so people can more easily screen vendors against the summary criteria and then only look at the details of the most promising. I’m working on incorporating something along those lines into the Raab Guide.

Ok, now for the company-level data itself. Here are the questions I asked, with summaries of answers from the five vendors in the current Raab Guide (Eloqua, Manticore Technology, Market2Lead, Marketo, Vtrenz) plus two I’ll be adding shortly (Marketbright and Neolane.) As usual, even though I’ve looked at these products in detail, I’m ultimately reporting what the vendors told me. ("SFDC" stands for Salesforce.com.)

Question | Eloqua | Manticore | Market2 | Marketo | Vtrenz | Market | Neolane |

Is there a distinct company table linked in a one-to-many relationship with individual records? | yes, link set by Eloqua | no | yes, link set by SFDC | yes, link set by SFDC | no | yes, link set by SFDC | yes; typically use SFDC link but client could set own |

Are changes in company-level data copied to CRM company (account) records? | yes, if client chooses | no | no | not now; next release will allow client to choose | no | yes, if client chooses | yes, if client chooses |

Can the demand generation system establish or modify company-to-individual relationships, and have these changes apply to CRM records? | yes | no | no | not now; | no | no | yes, if client chooses |

Do demand generation reports give a consolidated company-wide view of activities (i.e., combined activities for all individuals associated with a company) | no; available in SFDC | no; available in SFDC | yes | no; available in SFDC | possible with special effort | yes for Web activities | yes |

Can demand generation lead scores be based on company-wide data (i.e., create a company-level score in addition to individual level scores)? | yes for attributes, no for behaviors | no | yes | yes | no | yes | yes |

If company-level scores are possible, can they be created within the normal score-building interface? | yes | n/a | yes | yes | n/a | yes | yes |

As you see, the answers even at this level of detail are more than simple yes or no. In the case of the first question, which is a restatement of the original question about whether a separate company table exists, “yes” answers must be extended to clarify whether the link between that table and the individual records is imported from Salesforce.com or can be set within the demand generation system. I explored this in most depth with Marketo, who clarified that any individual NOT linked to a company by Salesforce.com will be given its own company record (a one-to-one relationship), even if the database contains several individuals from the same organization. Users can edit that company data, but not the company data imported from Salesforce.

Market2Lead and Marketbright also use the company data and links imported from Salesforce.com. But while Market2Lead matches Marketo's policy of not changing company data in Salesforce.com, Marketbright lets clients determine what do to a field-by-field basis and actually have rules for different cases for the same field. (For example, you might want to let a demand generation user add data to a blank field, but not overwrite data where it exists.)

Just to add a bit more confusion: Marketo itself is changing its system to let clients decide during implementation whether to let users to override the Salesforce links and company data. Apparently some Marketo clients really wanted to do this, while others were firmly opposed.

The other especially knotty question is the one about company-level lead scores. All vendors with company tables can generate scores based on the data attributes in the company records. But I had also intended that question to include aggregate behavior of all individuals associated with a company – such as total emails opened or the date of the most recent Web site visit by anyone in the group.

Eloqua volunteered that they couldn’t do this, which I appreciated. The only other vendor I explored this with in detail was Marketo. They can in fact use behaviors in company scores, but only for individuals linked in Salesforce.com and only by using separate rules to assign points to individuals and to companies. That is, a Web download would have one rule to assign points to individual-level scores and another to assign points to the company score. This isn’t quite the same as building the company calculation by examining each individual independently . For example, Marketo's method can't limit the impact of a single hyperactive individual on the company score.

This is pretty picky stuff, but that’s exactly the point: people who really care about these things tend to be pretty picky about the details. They should make sure they understand them before they buy a product, rather than risk unpleasant surprises after the fact.

Thursday, February 12, 2009

Infusionsoft: Impressive Marketing Power for a Very Low Price

The point of the story, other than showing why economists are poor comedians, is that the market is not always perfectly efficient. I suppose no one needs reminding of that in today’s economic situation. But most of us still assume there is a reasonable relationship between price and value. This is why it’s hard to imagine that low-priced software can deliver similar performance to mainstream products.

Now we come to Infusionsoft, which offers marketing automation, CRM and ecommerce for as little as $199 per month. Like a free breakfast at Denny’s, that sounds too good to be true. But the company has been around since 2001 and has thousands of customers, so there must be something to it. At least it’s worth a closer look.

I took that look last week and came away impressed. The company’s marketing is tightly targeted at very small businesses (under 25 employees) but its marketing features are competitive with demand generation products aimed at much larger firms. Three of the five core demand generation functions are clearly there: outbound email, Web forms, and lead nurturing campaigns. Of the other two, lead scoring is primitive at best (I only saw an ability to apply segment tags, which I suppose is all you really need; the company says lead scoring is available “but we don’t advertise it because we haven’t made it easy enough within the software yet”). The fifith core function, integration with Salesforce.com, is not provided because Infusionsoft has its own sales automation capabilities. If you really wanted it, the system does provide an API that would let someone with the right skills set it up.

If advanced lead scoring or Salesforce.com integration are show stoppers for you, then read no further. If not, the marketing automation functions in Infusionsoft are worth considering. Although I mentioned only email campaigns before, users can in fact import lists and then execute email, fax and voice broadcast from within Infusionsoft, or extract lists for direct mail, call center or other external vendors. Simple telemarketing could also be handled within the system using its CRM features. The system includes an email builder with the usual features such as personalization and required “unsubscribe” links. Infusionsoft enforces double opt-in email procedures, monitors its clients results closely, and has a ‘three strikes” policy to educate and if necessary remove clients who violate the rules.

Users can create landing pages and forms within the system, although they must load the HTML onto their own Web sites since Infusionsoft doesn’t host them. This differs from other demand generation vendors, who nearly always host those pages themselves. The practical impact is nil, since the Infusionsoft forms do post data to the client's Infusionsoft-hosted marketing database. I suspect the difference reflects the small-company orientation of Infusionsoft: while marketing departments in larger companies want to be independent of their Web team, Infusionsoft clients probably don't have a Web team separate from marketing (if they have a Web team at all).

Users can also specify the activities that follow submission of a form or other customer interaction. This is where the real power of Infusionsoft shines through. The activities can include assigning the lead to a sequence of follow up messages, assigning it a tag for later segmentation, or sending it to a salesperson or affiliate, either directly or through a round robin distribution. Different answers on Web forms can be linked to different sequences, and users can add filters that determine whether these actions take place. Subsequent events can add leads to new sequences and remove them from existing ones, thereby adjusting to customer behavior.

The result is enough fine-grained control over lead treatment to satisfy all but the most demanding marketers. To me, this is the essence of a lead nurturing system and probably the critical feature of demand generation in general. After all, any email client can send an email and any Web system can put up a landing page. It’s the multi-step, behavior-dependent campaign logic that’s otherwise hard to come by.

That said, the campaign manager you’re getting here is not as polished as the best mainstream demand generation systems. To that extent, at least, you get what you pay for. There’s no flow chart to visualize campaign flows and no easy way to do split testing. Nor is there branching within a sequence, although you could achieve the same effect by having one sequence feed into several other sequences with different entry conditions.

(Side note: Infusionsoft has a public ideas forum for users to suggest and vote on enhancements. Split testing currently ranks number five, behind four refinements to the ecommerce features. This probably means that most users find the marketing features relatively adequate, at least compared with the ecommerce capabilities which definitely looked much less mature. The public forum itself certainly shows a healthy attitude by Infusionsoft towards its own customers. The company also has excellent online documentation and an active user forum for questions and answers.)

On the other hand, Infusionsoft actually does a better job than some demand generation systems of tracking marketing costs. Users can attach a fixed cost and per-response cost to each lead source, which can be an outbound marketing campaign or an inbound source such as Web ads, trade shows, or traditional advertising. Users can also attach a piece cost to each message in a sequence. Revenue for individual customers can be captured with the shopping cart or from opportunities in the CRM system.

This information is presented in a variety of standard reports, although, perplexingly, I couldn’t find one that related the cost of a campaign to the revenue from its respondents. Reports do track response rates, conversion rates, and movement of opportunities through stages in the sales funnel. Standard reports can be run against user-specified date ranges and sometimes against user-specified customer segments. Users can’t create their own reports within the system, but can export their data to analyze elsewhere.

User rights (i.e., which users can do what) are actually more fine-grained in Infusionsoft than in many demand generation systems. Infusionsoft needs the control because it will be used by people throughout the company than a typical marketing system. Infusionsoft also includes contact management and project workflow features, such as tracking tasks and appointments, that aren’t found in most demand generation products.

Infusionsoft pricing starts at $199 per month for a system limited to 10,000 leads and 25,000 emails per month. This version has pretty much all the features needed for demand generation. Going to $299 per month adds ecommerce, sales automation, affiliate management and the API. Even people who don’t need those features might pay the extra money just to move the volume limits to 100,000 leads and 100,000 emails and go from two to four users. $499 per month buys the same features but higher volumes and one more seat. Clients can also add seats for $59 for the base version or $79 per month for the higher two. Implementation costs range from $1,999 to $5,999 depending on the version. [Note: in July 2009, Infusionsoft dropped its implementation fees. Other prices were unchanged.]

Any way you slice it, Infusionsoft would be a tremendous value for a marketing department that could use it instead of a mainstream demand generation system. That’s not to say the choice is a slam-dunk: you may need some of the missing features, and there are other demand generation products that also underprice the mainstream vendors (see last week’s post). Still, if money is tight and your needs are limited, Infusionsoft is certainly an option to consider.

Wednesday, February 11, 2009

Tuesday, February 10, 2009

Blog Posts I'll Never Write (With Apologies to Borges)

In that same spirit, here are summaries of three blog posts I doubt I’ll ever have time to actually write.

1. The Future of Technology Analysts. This is a reaction to a blog post Do CRM Analysts Provide Value for Money? by veteran consultant Graham Hill. Basically he concludes that the ‘big-name’ analysts don’t tell him much that he he can’t pick up from his own experience or other sources like blogs, books and academic papers. He does feel that ‘niche’ analysts provide more value.

As a ‘niche’ analyst myself, I tend to agree. But the more important issue is the movement away from traditional analysts to a community or peer-to-peer model. Rather than listen to expensive experts, marketers want to hear from other marketers, whom I think they perceive as more practical and less self-interested. This implies a change in the business model of analysts and consultants, who must deliver concrete business solutions rather than simply offering knowledge that clients can’t get elsewhere.

This is probably a good thing in the long run, although it does raise the question of who will do original research when no one will pay the analysts for the results. (This is a special case of the larger issue raised by the Internet, where professional journalists are squeezed out by volunteers who work for free but may not do a thorough or accurate job.) People will need to evolve new mechanisms to judge the value of different information sources—a problem that is widely recognized but nowhere near being solved.

2. A Cookbook for Demand Generation. Probably the biggest obstacle to selling demand generation systems is that many marketers don’t know what to do with them. This reminds me of the little cookbooks that come with kitchen appliances, which give a few simple recipes that make use of the appliances’ capabilities. How about a little demand generation cookbook that would describe a dozen or so starter projects, each with a list of ingredients (emails, landing pages, etc.), step-by-step instructions for setting them up, and maybe a snapshot of the expected results? I guess we could call it “The Joy of Demand Gen.” The goal is to make this as non-intimidating as possible.

3. Taxonomy for Twitter Analytics. I think there is a hierarchy of analytical methods for Twitter and other social media. The simplest layer would be to count mentions of a company or product. The next would be to list the people who are making those mentions, ranked by frequency. Then you’d measure the influence of those people based on number of followers, links, reposts/retweets, etc. Next look at the content of the mentions: are they positive or negative? Finally, really understand the content, taking into account things like sarcasm and emoticons, and maybe convert this into some sort of long-term attitude measure (enthusiastic, skeptical, objective, etc.). Most or all of these measures are available to some degree, but I don’t think I’ve seen them all linked together in a single solution.

Wednesday, February 04, 2009

Low Cost Systems for Demand Generation

But what really concerns me is that these people are apparently limiting their consideration to just those two products. I do recognize that they are the best known vendors in the space (with apologies to Vtrenz, whose identity is somewhat blurred since its purchase by Silverpop). But there are plenty of other options, particularly for marketers with limited budgets. Marketo is certainly a fine product, but marketers should still look around before picking it by default. Here are some alternatives that will come in at or below Marketo’s published starting price of $2,400 per month (or $1,500 for their “Lite” version). (To be fair, many of the vendors below charge an installation of that can be several thousand dollars or more, while Marketo doesn't. But even including that, the first year cost for most of these will be less.) :

Manticore Technology: a full-featured demand generation product. See my 2007 blog entry for some information or buy the Raab Guide to Demand Generation Systems for a detailed review. (Just kidding...or am I?) Pricing on their Web site is quite close to Marketo’s: $1,000 a month for a limited edition and $2,400 per month for all the bells and whistles, and no extra charge for installation.

Pardot: another pretty powerful product; see my blog review from December 2008. But much better pricing at the low end $750 per month for the smallest complete system, and $1,250 per month for something that should be adequate for larger firms.

OfficeAutoPilot: this is the current incarnation of what used to be Moonray. I took a detailed look at a beta of the next release a couple of weeks ago and liked both the interface and breadth of functionality. I’m just waiting for the official release (due in for late February) to publish a detailed review. [Click here to read the review, published in April.] Pricing for a full-featured system was $597 per month—quite a bargain. Even cheaper options are available if you need fewer functions.

Treehouse Interactive: another company I spoke with recently, and another one I’m not writing about yet because they showed me some features that won’t be released for a while (early March). [Click read my March 18 review.] The company has a low profile but has been selling its demand generation product for nearly ten years. It offers a pretty complete set of features, with shortfalls in some areas balanced by strengths in others. More important to people who need it, the company offers partner management and channel sales management products that integrate with its demand generation offering. Pricing starts at $599 per month.

Infusionsoft: I spoke with them just yesterday, and there’s nothing preventing me from writing about them in detail except that I do like to sleep occasionally. Maybe next week. [I got to it on February 12. Read it here.] They aim to be a complete business operating system for very small companies (under 25 employees). So they offer not just demand generation but everything from contact management to e-commerce. However, marketing is their core function and they provide a decent set of features, although certainly not as polished as some other products I’ve mentioned. On the other hand, pricing starts at $199 per month for a 2 user system with pretty much all of their marketing features, and you’d be hard-pressed to spend more than $500 per month unless you want lots of users for non-marketing functions such as sales automation or order entry. Although you may not be familiar with them, the company is five years old and has more than 12,000 (yes, that's twelve thousand) clients.

Active Conversion: I had a chat with president Fred Yee last August although I didn’t publish a detailed review. [I did publish a real review in July 2009.] They focus on email nurturing campaigns and marketing measurement. The system didn’t do landing pages or forms when I spoke with Fred, although he tells me it does now. Pricing starts at $250 per month and averages around $500.

Act-On Software: a slightly different take, with strong Webinar support and an option to use its own low-cost sales automation system as an alternative to Salesforce.com Price starts at $499 per month. I reviewed it here in March.

Before I get any complaints from other vendors in the industry, let me stress that the systems I've listed above are ones that I know have low price points. My own consideration set also includes Marketbright, Market2Lead, MarketingGenius, LeadLife, LoopFuse, LeadGenesys, , eTrigue and SalesFusion360, although I haven't looked at all of those in detail.

Obviously you need to evaluate these products in depth before deciding which is right for you. There are free papers on the Raab Guide site that can help you organize this process and of course I do consult in this area for a living. But the point of today's post isn't that you should run a thorough selection project. It's simply that should recognize that you do have choices, and take advantage of them.

Monday, February 02, 2009

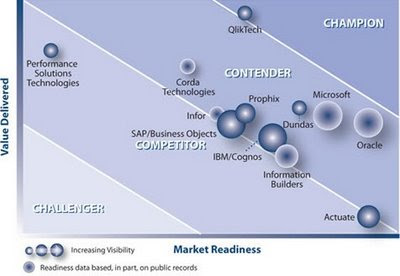

QlikView Is Champion In Aberdeen AXIS (But Is This Graph Necessary?)

Here is the AXIS itself, with QlikView alone at the top:

And therein lies the problem. Fond as I am of QlikView, I can’t imagine any measure by which it would be a dominant business intelligence tool, let alone rank so far above its competitors. So I was more than a little perplexed to see the Aberdeen ranking, especially because I wasn’t aware that Aberdeen produced something similar to the Forrester “waves” and Gartner “magic quadrants”.

Indeed, it turns out that the AXIS program is brand new. The BI report is the second to be issued but will be followed by several per quarter. Aberdeen’s materials describe its reports as “unlike other comparative products that primarily focus on feature and functionality”, but to my eye it looks pretty similar. Apparently what makes it “the market’s first technology solution software provider assessment tool that is truly customer-centric” is that one of its two dimensions, “value delivered”, is based on Aberdeen’s surveys that identify best-in-class companies.

Exactly how this relates to the vendor rankings is unclear, although I could find out if I paid $895 for the report. Maybe a higher “value delivered” ranking means the product is used by more best-in-class companies, although I rather doubt it given QlikView's still-limited market penetration. More likely, the higher ranked products are viewed by users as delivering greater value, perhaps with some weighting towards the relative performance of the companies doing the ranking. I’ve no doubt that QlikView users are more enthusiastic than any other vendors’, both because it truly is a great product but also because it’s still in the relatively early adapter stage where users tend to be highly motivated and vocal. Perhaps Aberdeen looks at the features desired by best-in-class companies and compares those to the features delivered by the different products, although this too seems unlikely.

The second dimension of “market readiness” is described by Aberdeen as based on “evaluation of responses to a standardized vendor questionnaire, analyst briefings, public records and customer interviews.” This sure sounds like a conventional feature-and-function assessment to me.

As someone who has been evaluating software for many years, I fully appreciate the appeal of these sorts of matrices. Vendors love them because, if they’re ranked near the top, it gives them something to crow about. Buyers love them because they can save work by considering only the top-ranked alternatives (even though vendors piously warn this is inappropriate). The analyst firms love them because they get lots of publicity, both directly because the press loves a horse race and from the winning vendors who promote them.

I have always avoided producing such rankings, even when people ask for them, precisely because they make it too easy for buyers to avoid the essential work of assessing products against their own needs. Still, the commercial advantages of the rankings are so great that I may yet feel impelled to produce them. That being the case, I can’t really criticize Aberdeen for rolling out their own. But I do hope they make a serious effort at educating people on what the "AXIS" means and how it should and shouldn’t be used.

Thursday, January 29, 2009

SQLStream Simplifies Event Stream Processing

SQLStream’s particular claim to fame is that its queries are almost identical to garden-variety SQL. Other vendors in this space apparently use more proprietary approaches. I say “apparently” because I haven’t researched the competition in any depth. A quick bit of poking around was enough to scare me off: there are many vendors in the space and it is a highly technical topic. It turns out that stream processing is one type of “complex event processing,” a field which has attracted some very smart but contentious experts. To see what I mean, check out Event Processing Thinking (Opher Etzion) and Cyberstrategics Complex Event Processing Blog (Tim Bass). This is clearly not a group to mess with.

That said, SQLStream’s more or less direct competitors seem to include: Coral8, Truviso, Progress Apama, Oracle BAM, TIBCO BusinessEvents, KX Systems, StreamBase and Aleri . For a basic introduction to data stream processing, see this presentation from Truvisio.

Back to SQLStream. As I said, it lets users write what are essentially standard SQL queries that are directed against a data stream rather than a static table. The data stream can be any JDBC-accessible data source, which includes most types of databases and file structures. The system can also accept streams of XML data over HTTP, which includes RSS feeds, Twitter posts and other Web sources. Its queries can also incorporate conventional (non-streaming) relational database tables, which is very useful when you need to compare streamed inputs against more or less static reference information. For example, you might want to check current activity against a customer’s six-month average bank balance or transaction rate.

The advantages of using SQL queries are that there are lots of SQL programmers out there and that SQL is relatively easy to write and understand. The disadvantage (in my opinion; not surprisingly, SQLStream didn’t mention this) is that SQL is really bad at certain kinds of queries, such as queries comparing subsets within the query universe and queries based on record sequence. Lack of sequencing may sound like a pretty big drawback for a stream processing system, but SQLStream compensates by letting queries specify a time “window” of records to analyze. This makes queries such as “more than three transactions in the past minute” quite simple. (The notion of “windows” is common among stream processing systems.) To handle subsets within queries, SQLStream mimics a common SQL technique of converting one complex query into a sequence of simple queries. In SQLStream terms, this means the output of one query can be a stream that is read by another query. These streams can be cascaded indefinitely in what SQLStream calls a “data flow architecture”. Queries can also call external services, such as address verification, and incorporate the results. Query results can be posted as records to a regular database table.

SQLStream does its actual processing by holding all the necessary data in memory. It automatically examines all active queries to determine how long data must be retained: thus, if three different queries need a data element for one, two and three minutes, the system will keep that data in memory for three minutes. SQLStream can run on 64-bit servers, allowing effectively unlimited memory, at least in theory. In practice, it is bound by the physical memory available: if the stream feeds more data than the server can hold, some data will be lost. The vendor is working on strategies to solve this problem, probably by retaining the overflow data and processing it later. For now, the company simply recommends that users make sure they have plenty of extra memory available.

In addition to memory, system throughput depends on processing power. SQLStream currently runs on multi-core, single-server systems and is moving towards multi-node parallel processing. Existing systems process tens of thousands of records per second. By itself, this isn't a terribly meaningful figure, since capacity also depends on record size, query complexity, and data retention windows. In any case, the vendor is aiming to support one million records per second.

SQLStream was founded in 2002 and owns some basic stream processing patents. The product itself was launched only in 2008 and currently has about a dozen customers. Since the company is still seeking to establish itself, pricing is, in their words, “very aggressive”.

If you’re still reading this, you probably have a pretty specific reason for being interested in SQLStream or stream processing in general. But just in case you’re wondering “Why the heck is he writing about this in a marketing blog?” there are actually several reasons. The most obvious is that “real time analytics” and “real time interaction management” are increasingly prominent topics among marketers. Real time analytics provides insights into customer behaviors at either a group level (e.g., trends in keyword response) or for an individual (e.g., estimated lifetime value). Real time interaction management goes beyond insight to recommend individual treatments as the interaction takes place (e.g., which offer to make during a phone call). Both require the type of quick reaction to new data that stream processing can provide.

There is also increasing interest in behavior detection, sometimes called event driven marketing. This monitors customer behaviors for opportunities to initiate an interaction. The concept is not widely adopted, even thought it has proven successful again and again. (For example, Mark Holtom of Eventricity recently shared some very solid research that found event-based contacts were twice as productive as any other type. Unfortunately the details are confidential, but if you contact Mark via Eventriicty perhaps he can elaborate.) I don’t think lack of stream processing technology is the real obstacle to event-based marketing, but perhaps greater awareness of stream processing would stir up interest in behavior detection in general.

Finally, stream processing is important because so much attention has recently been focused on analytical databases that use special storage techniques such as columnar or in-memory structures. These require processing to put the data into the proper format. Some offer incremental updates, but in general the updates run as batch processes and the systems are not tuned for real-time or near-real-time reactions. So it’s worth considering stream processing systems as a complement to that lets companies employ these other technologies without giving up quick response to new data.

I suppose there's one more reason: I think this stuff is really neat. Am I allowed to say that?

Tuesday, January 20, 2009

Salespeople: One Question Matters Most

The two clearest answers came from questions about the information salespeople want and why they don’t follow up on inquiries. By far the most desired piece of information about a lead was purchasing time frame: this was cited by 41% of respondents, compared with budget (17%), application (15%), lead score (15%) and authority (12%). I guess it’s a safe bet that salespeople jump quickly on leads who are about to purchase and pretty much ignore the others, so this finding strongly reinforces the need for nurturing campaigns that allow marketers to keep in contact with leads who are not yet ready to buy.

Note that none of listed categories included behavioral information such as email clickthroughs or Web page visits, which demand generation vendors make so much of. I doubt they would have ranked highly had they been included. Although behavioral data provides some insights into a lead’s state of mind, it's useful to be reminded that wholly pragmatic facts about time frame are a salesperson's paramount concern.

The other clear message from the survey was that the main reason leads are not followed up is “not enough info”. This was cited by 55% of respondents, compared with 14% for “inquired before, never bought”, 12% for “no system to organize leads”, 10% for “no phone number”, 7% for "geo undesirable" and 2% because of "no quota on product". This is an unsurprising result, since (a) good information is often missing and (b) salespeople don’t like to waste time on unqualified leads. Based on the previous question, we can probably assume that the critical piece of necessary information is time frame. So this answer reinforces the importance of gathering that information and passing it on.

One set of answers that surprised me a bit were that 77% or 80% of salespeople were working with an automated lead management system, either “CRM/lead management” or “Software as a Service”. I’ve given two figures because the question was purposely asked two different ways to check for consistency. The categories don’t make much sense to me because they overlap: products like Salesforce.com are both CRM systems and SaaS. Still, this doesn't affect the main finding that nearly everyone has some type of automated system to “update lead status” and “manage your inquires” (the two different questions that were asked). This is higher market penetration than I expected, although I do recognize that those questions deal more with lead management (a traditional sales automation function) than lead generation (the province of demand generation systems). Still, to the extent that CRM systems can offer demand generation functions, there may be a more limited market for demand generation than the vendors expect.

One final interesting set of figures had to do with marketing measurement. The survey found that 23% of companies measure ROI for all lead generation tactics, 30% measure it for some tactics, and 47% don’t measure it at all. The authors of the survey report seem to find these numbers distressingly low, particularly in comparison with the 80% of companies that have a system in place and, at least in theory, are capturing the data needed for measurement. I suppose I come at this from a different perspective, having seen so many surveys over the years showing that most companies don’t do much measurement. To me, 23% measuring everything seems unbelievably high. (For example, Jim Lenskold's 2008 Marketing ROI and Measurements Study found 26% of respondents measured ROI on some or all campaigns; the combination of "some" and "all" in the SLMA study is 53%.) Either way, of course, there is plenty of room for improvement, and that's what really counts.

Monday, January 19, 2009

New Best Practices White Paper

Saturday, January 17, 2009

Best Practices for Marketing Automation and Demand Generation Campaigns

But I digress. The heart of my presentation on Wednesday ended up as a list of 37 “best practices” for marketing automation / demand generation programs. I’ll probably embed them in a white paper for the Raab Guide Web site in the near future, but for now I thought I’d share them here. (If you want the full slide deck, complete with moderately witty speaker notes, drop me at email at draab@raabassociates.com.)

A bit of context: the presentation listed a sequence of steps for marketing campaign creation, deployment and analysis. The best practices are organized around those steps.

Step 1: Gather Data. The marketer assembles information about the target audience. Best practices here involve the types of data, and, in particular, expanding beyond traditional sources.

• leads, promotions, responses, orders: these are the traditional data sources used in most marketing systems. Best practices would link actual orders back to the individual leads, and do accurate customer data integration.

• external demographics, preferences, contact names: the best practice here is to supplement internal data with external sources such as D&B, Hoovers, LexisNexis, ZoomInfo, etc. More information allows more accurate targeting and better lead scoring.

• social networks: these can be another source of contact names, and sometimes of introductions via mutual friends. A close look at what individuals have said and done in these networks could provide deep insight into a particular person’s needs, attitudes and interests, but this is more an activity for salespeople than marketers.

• summarized activity detail: marketing systems gather an overwhelming mass of detail about prospect activity, down to every click on every Web page. Best practice is to make this more usable by flagging summaries such as “three visits in the past seven days” and making them available for segmentation and event-triggered marketing.

• self-adjusting surveys: once a lead has answered a survey question, the system should automatically replace that question with new one. This builds a richer customer profile and avoids annoying the lead by asking the same question twice. For a bonus best practice, the system should choose the next question based on user-defined rules that select the most useful question for each individual.

• order detail, payments, customer service: the best practice is to gather information beyond the basic order history from operational systems. This also allows more precise targeting and may uncover opportunities that are otherwise invisible, such as follow-up to service problems.

• near real-time updates: fast access to information about lead behaviors allows quick response, particularly in the form of event-triggered messages. This can be critical to engaging a prospect when her interest is at its peak, and before she turns to a competitor.

• household and company levels: consumers should be grouped by households and business leads by their company, division or project. This grouping permits selections and scoring based on activity of the entire group, which may display patterns that are not visible when looking at just a single individual.

Step 2: Design Campaign. The marketer now designs the flow of the campaign itself. Traditional marketing programs use a small number of simple campaigns, each designed from scratch and often used just once. Even traditional campaigns often include multiple steps, so this itself isn’t listed separately as a new best practice.

• many specialized campaigns: the best practice marketer deploys many campaigns, each tailored to a specific customer segment or business need. These are more effective because they are more tightly targeted.

• cross sell, up sell and retention campaigns: demand generation focuses primarily on campaigns to acquire new leads. The best practice is to supplement these with campaigns that help sell more to existing customers and to retain those customers. Marketing automation has generally included these types of campaigns, at least in theory, but many firms could productively expand their efforts in these areas.

• share and reuse components (structure, rules, lists): when marketers are running many specialized campaigns, they have greater opportunity to share common components, and greater benefit from doing so. Sharing makes it possible to build more complex, sophisticated components and to ensure consistency both in how each customer is treated and in how company policies are implemented.

• new channels (search, Web ads, mobile, social): these new channels are often more efficient than traditional channels, and many have other benefits such as being easier to measure. Best practice marketers test new channels aggressively to find out what works and how they can best be used. Even if the new channels are not immediately cost-effective, marketers can limit their investment but still build some experience that will be useful later.

• multiple channels in same campaign: true multichannel campaigns contact customers through different media. A mix of media allows you to reach customers who are responsive in different channels, thereby boosting the aggregate response. Channels may also be chosen based on stated customer preferences and the nature of a particular contact. Marketing automation systems make it easy to switch between media within a single campaign.

Step 3: Develop Content. This step creates the actual marketing materials needed by each step in the campaign design. These are emails, call scripts, landing pages, brochures, and so on.

• rule-selected content blocks and best offers: content is tailored to individuals not simply by inserting data elements (“Dear [First_Name]” but by executing rules that select different messages based on the situation. For example, a rule might send different messages based on the customer’s account balance.

• map drip-marketing message to buyer stage: best practice nurturing campaigns deliver messages that move the lead through a sequence of stages, typically starting with general information and becoming more product oriented. This is more effective than sending the same message to everyone or always sending product information.

• standard templates: messages are built using standard templates that share a desired look-and-feel and contain common elements such as headers and footers. This provides consistency, saves work, and ensures that policies are followed.

• share and reuse components (items, content blocks, images): like shared campaign components, shared marketing contents minimize the work needed to create many different, tailored campaigns. Sharing also makes it easy to deploy changes, such as a new price or new logo, without individually modifying every item in every campaign.

• unified content management system across channels: even though most marketing materials are channel-specific, many components such as images and text blocks can in fact be shared across different channels. Managing these through a single system further saves work, supports sharing, and ensures consistency.

Step 4: Execute Campaign. The campaign is deployed to actual customers. Best practice campaigns often run continuously, rather than being executed once and then replaced with something new. This lets marketers refine them over time, testing different treatments for different conditions and keeping the winners.

• separate treatments by segment: messages and campaign flows are tailored to the needs of each segment. This could be done by creating one campaign with variations for different segments or by creating separate campaigns for each segment. Which works best depends largely on your particular marketing automation system. Either way, shared components should keep the redundant work to a minimum.

• statistical modeling for segmentation: predictive model scores can often define segments more accurately than manual segmentations. Perhaps more important, they can be less labor-intensive to create, allowing marketers to build more segments and rebuild them more often. This matters because best practice marketing involves so many specialized campaigns and is constantly adjusting to new conditions.

• change campaign flow based on responses, events, activities: best practice campaigns change lead treatments in response to their behaviors. Thus, instead of a fixed sequence of treatments, they send leads down different branches and, in some cases, move them from one campaign to another. Changes may be triggered by activities within the campaign, such as response to a message or data provided within a form, or by information recorded elsewhere and reported to the marketing automation system.

• advanced scoring (complex rules, activity patterns, event depreciation, point caps): simple lead scoring formulas are often inaccurate predictors of future behavior. Best practice scoring may involve complex calculations based on relationships among several data elements, summarized activity detail, reduced value assigned to less recent events, and caps on the number of points assigned for any single type of activity. A related challenge for system designers is making complex formulas reasonably easy to set up and understand.

• company-level scores and activity tracking: the best practice campaign can use aggregated company or household data to calculate scores, guide individual treatments, and issue alerts. This allows more appropriate treatment than looking at each individual in isolation.

• multiple scores per lead: for companies with several products, the best practice to calculate a separate lead score for each. The scores may also have different thresholds for sending the lead to sales.

• define score formula jointly with sales: the salesperson is the ultimate judge of whether a lead is qualified. But many marketing departments still set up lead scoring formulas without sales input. Best practice is to work together on defining the criteria and then to periodically review the results to see if the formula can be improved.

• let sales return leads for more nurturing: traditional lead management is a one-way street, with leads sent from marketing to sales and then never heard from again. Best practice marketers allow salespeople to return leads to marketing for further nurturing. This improves the chances of a lead ultimately making a purchase, even if it doesn’t happen right away.

Step 5: Analyze Results. Learning from past campaigns may be the most important best practice of all. Having many targeted campaigns allows for continuous incremental improvement, achieved by quickly evaluating the return on each project and adjusting future programs based on the results.

• advanced response attribution: traditional methods often credit a lead to whichever campaign contacted them first, or whichever generated the first response. Best practice marketers look more deeply at the factors which may have influenced a lead’s behavior, often applying sophisticated analytics to estimate the incremental impact of different campaigns.

• standard metrics, within and across channels: resources can only be allocated to their optimal use if return on investment can be compared across campaigns. This requires standard metrics, which must be calculated consistently and clearly understood throughout the organization.

• formal test designs (a/b, multivariate): traditional marketers often do little testing, and the tests they do are often poorly designed. Best practice marketing involves continuous, formal testing designed to answer specific questions and lead to actionable results.

• capture immediate and long-term results: initial response rate or cost per lead fails to take into account the value of the leads generated, which can differ hugely from campaign to campaign. Best practice requires measuring the long-term value and building it into standard campaign metrics.

• evaluate on customer profitability, not revenue: customers with the same revenue can vary greatly in the actual profit they bring to the company, depending on the profit margins of their purchases and other costs such as customer support. Best practice metrics include accurate profitability measures, preferably drawn from an activity-based costing system.

• continually assess and reallocate spending: best practice marketers have a formal process to shift resources to the most productive marketing investments. These will change as campaigns are refined, business conditions evolve, and new opportunities emerge. A formal assessment process is essential because organizations otherwise tend to resist change.

Infrastructure. Individual campaigns are made possible by an underlying infrastructure that has best practices of its own.

• consolidated systems (multi-channel content management, campaign management and analytics): today’s marketing systems can usually handle multiple channels, so decommissioning older channel-based systems may save money as well as making multi-channel campaigns easier to execute. Consolidated multi-channel analytics, which may occur outside of the marketing automation system, are particularly important for gaining a complete view of each customer.

• advanced system training: marketing departments often provide workers with the minimum training needed to gain competency in their tools. Best practice departments recognize that additional training can make users more productive, particularly as the tools themselves add new capabilities that users would otherwise not be able to exploit.

• advanced analytics training: analytics play a central role in the continuous improvement process. Solid analytics training ensures that users can set up proper tests and interpret the results. Because data and tools are often already available, lack of training is frequently the main obstacle that prevents marketers from using analytics effectively.

• formal processes: best practice marketers develop, document and enforce formal, consistent business processes. This both ensures that work is done efficiently and makes it possible to execute changes when opportunities arise.

• cross-department cooperation: working with sales, service, finance and other departments is essential to sharing systems, data and metrics. A cross-department perspective ensures that each department considers the impact of its decisions on the rest of the company and on the customers themselves.

Summary

The best practice vision is many marketing campaigns, each precisely targeted, efficiently executed, and carefully designed to yield the greatest possible value. The campaigns are supported by detailed analysis to understand results and identify potential improvements. This information is quickly fed into new campaigns, ensuring that the company continually evolves its approaches and makes the best possible use of marketing resources. Continuous optimization is the ultimate best practice that all other practices should support.

Thursday, January 08, 2009

Company-Level Data in Demand Generation Systems

There are really two separate issues at play. The first is how the demand generation system treats company-level data. This, to start at the very beginning, is data about the company associated with an individual. Typically this is the company they work for, although there might be another relationship such as consultant or services vendor. From a database design standpoint, having one company record that is linked to multiple individuals avoids redundant data and, therefore, potential inconsistencies between company data for different people. (The technical term for this is “normalization”, meaning that the database is designed so a given piece of information is stored only once.) Some of the demand generation systems do indeed have a separate company table: it is one of the marks of a sophisticated design.

But the wrinkle here is that most CRM systems in general, and Salesforce.com in particular, also have separate company and individual levels. In fact, Salesforce.com actually has two types of individuals: “leads” which are unattached individuals, and “contacts”, which are individuals associated with an “account” (typically a company, although it might a smaller entity such as a division or department). Most demand generation systems make little distinction between CRM “leads” and “contacts”, converting them both to the same record type when data is loaded or synchronized.

The common assumption among demand generation vendors is that the CRM system is the primary source of information for which individuals are associated with which companies and for the company information itself. This makes sense, given that the salespeople who manage the CRM data are much closer to the companies and individuals than the marketers who run the demand generation system. Demand generation systems therefore generally import the company / individual relationships as part of their synchronization process. Systems with a separate company table store the imported company data in it; those without a separate company table copy the (same) company data onto each individual record. So far so good.

However, this raises the question of whether the demand generation system should be permitted to override company / individual relationships defined in the CRM system or to edit the company (or, for that matter, individual) data itself. I have to get back to the vendors and ask the question, but I believe that most vendors do NOT let the demand generation system assign individuals to companies or change the imported relationships. (In at least some cases, users can choose whether or not to allow such changes.) Interestingly enough, these limits apply even to systems that infer a visitor’s company from their IP address and show it in reports. Whether demand generation can change company-level data and have those changes flow back into the CRM system is less clear: again, it may be matter of configuration in some products. The vendors who don’t provide this capability will argue, probably correctly, that few marketers really want to do this and or in fact should do it.

So what information DOES originate in the demand generation system? Basically, it is new individuals, which correspond to “lead” records in Salesforce.com, and attributes for existing individuals, which may relate to either CRM “leads” or “contacts”. The demand generation system may also capture company information, but this is stored on the individual record and kept distinct from the company information in the CRM system. When a new individual is sent from demand generation to the CRM system, it is set up in CRM as a “lead” (that is, unattached to a company). The CRM user can later convert it to a contact within an account. But the demand generation system cannot generally set up accounts and contacts itself. (Again, let me stress that there may be some exceptions—I’ll let you know when I find out.)

Bottom line: leads, lead data and contact data may originate in either demand generation or CRM, and the synchronization is truly bi-directional in that changes made in either system will be copied to the other. But accounts and account / contact relationships are only maintained in the CRM system: most demand generation systems simply copy that data and don’t allow changes. Thus, it is essentially a unidirectional synchronization.

This leads us to the second issue, which is company-level reporting. It’s touted by most demand generation vendors, but a closer look reveals that some actually rely on the account-level reporting in Salesforce.com to deliver it. This isn’t necessarily a problem, since the Salesforce.com report can incorporate activities captured by the demand generation system. Per the earlier discussion, these activities will be linked to individuals (leads or contacts in Salesforce.com terms). Of course, if the individuals have not been linked to a company in the CRM system, they cannot be included in company-level reports.