What really sets QlikView apart is its scripting language, which lets analysts build processing streams to combine and transform multiple data sources. Although QlikView is far from comparable with enterprise-class data integration tools like Informatica, its scripts allow sophisticated data preparation that is vastly too complex to repeat regularly in Excel. (See my post What Makes QlikTech So Good for more on this.)

Lyzasoft Lyza is the first product I’ve seen that might give QlikView a serious run for its money. Lyza doesn’t have scripts, but users can achieve similar goals by building step-by-step process flows to merge and transform multiple data sources. The flows support different kinds of joins and Excel-style formulas, including if statements and comparisons to adjacent rows. This gives Lyza enough power to do most of the manipulations an analyst would want in cleaning and extending a data set.

Lyza also has the unique and important advantage of letting users view the actual data at every step in the flow, the way they’d see rows on a spreadsheet. This makes it vastly easier to build a flow that does what you want. The flows can also produce reports, including tables and different kinds of graphs, which would typically be the final result of an analysis project.

All of that is quite impressive and makes for a beautiful demonstration. But plenty of systems can do cool things on small volumes of data – basically, they throw the data into memory and go nuts. Everything about Lyza, from its cartoonish logo to its desktop-only deployment to the online store selling at a sub-$1,000 price point, led me to expect the same. I figured this would be another nice tool for little data sets – which to me means 50,000 to 100,000 rows – and nothing more.

But it seems that’s not the case. Lyzasoft CEO Scott Davis tells me the system regularly runs data sets with tens of millions of rows and the biggest he’s used is 591 million rows and around 7.5-8 GB.

A good part of the trick is that Lyza is NOT an in-memory database. This means it’s not bound by the workstation’s memory limits. Instead, Lyza uses a columnar structure with indexes on non-numeric fields. This lets it read required data from the disk very quickly. Davis also said that in practice most users either summarize or sample very large data sets early in their data flows to get down to more manageable volumes.

Summarizing the data seems a lot like cheating when you’re talking about scalability, so that didn’t leave me very convinced. But you can download a free 30 day trial of Lyza, which let me test it myself.

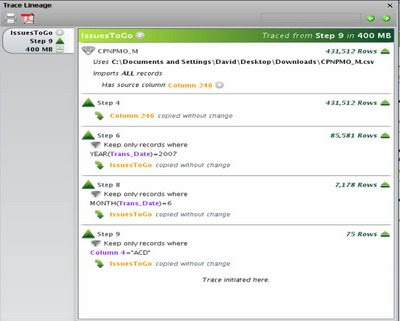

Bottom line: my embarrassingly ancient desktop (2.8 GHz CPU, 2 GB RAM, Windows XP) loaded a 400 MB CSV file with about 430,000 rows in just over 6 minutes. That’s somewhat painful, but it does suggest you could load 4 GB in an hour – a practical if not exactly desirable period. The real issue is that each subsequent step could take similar amounts of time: copying my 400 MB set to a second step took a little over 2 minutes and, more worrisome, subsequent filters took the same 2 minutes even though they reduced the record count to 85,000 then 7,000 then 50. This means a complete processing flow on a large data set could run for hours.

Still, a typical real-world scenario would be to do development work on small samples, and then only run a really big flow once you knew you had it right. So even the load time for subsequent steps is not necessarily a show-stopper.

Better news is that rerunning an existing filter with slightly different criteria took just a few seconds, and even rerunning the existing flow from the start was much faster than the first time through. Users can also rerun all steps after a given point in the flow. This works because Lyza saves the intermediate data sets. It means that analysts can efficiently explore changes or extend an existing project without waiting for the entire flow to re-execute. It’s not as nice as running everything on a lightning-fast data server, but most analysts would find it gives them all the power they need.

As a point of comparison, loading that same 400 MB CSV file took almost 11 minutes with QlikView. I had forgotten how slowly QlikView loads text files, particularly on my limited CPU. On the other hand, loading a 100 MB Excel spreadsheet took about 90 seconds for Lyza vs. 13 seconds in QlikView. QlikView also compressed the 400 MB to 22 MB on disk and about 50 MB in memory, whereas Lyza more than doubled data to 960 MB of disk, due mostly to indexes. Memory consumption in Lyza rose only about 10 MB.

Of course, compression ratios for both QlikView and Lyza depend greatly on the nature of the data. This particular set had lots of blanks and Y/N fields. The result was much more compression than I usually see in QlikView and, I suspect, more expansion than usual in Lyza. In general, Lyza seems to make little use of data compression, which is usually a key advantage of columnar databases. Although this seems like a problem today, it also means there's an obvious opportunity for improvement as the system finds itself dealing with larger data sets.

What I think this boils down to is that Lyza can effectively handle multi-gigabyte data volumes on a desktop system. The only reason I’m not being more definite is I did see a lot of pauses, most accompanied by 100% CPU utilization, and occasional spikes in memory usage that I could only resolve by closing the software and, once or twice, by rebooting. This happened when I was working with small files as well as the large ones. It might have been the auto-save function, my old hardware, crowded disk drives, or Windows XP. On the other hand, Lyza is a young product (released September 2008) with only a dozen or so clients, so bugs would not be surprising. I'm certainly not ready to say Lyza doesn't have them.

Tracking down bugs will be harder because Lyza also runs on Linux and Mac systems. In fact, judging by the Mac-like interface, I suspect it wasn't developed on a Windows platform. According to Davis, performance isn’t very sensitive to adding memory beyond 1 GB, but high speed disk drives do help once you get past 10 million rows or so. The absolute limit on a 32 bit system is about 2 billion rows, a constraint related to addressable memory space (2^31 = about 2 billion) rather than anything peculiar to Lyza. Lyza can also run on 64 bit servers and is certified on Intel multi-core systems.

Enough about scalability. I haven’t done justice to Lyza’s interface, which is quite good. Most actions involve dragging objects into place, whether to add a new step to a process flow, move a field from one flow stage to the next, or drop measures and dimensions onto a report layout. Being able to see the data and reports instantly is tremendously helpful when building a complex processing flow, particularly if you’re exploring the data or trying to understand a problem at the same time. This is exactly how most analysts work.

Lyza also provides basic statistical functions including descriptive statistics, correlation and Z-test scores, a mean vs. standard deviation plot, and stepwise regression. This is nothing for SAS or SPSS to worry about; in fact, even Excel has more options. But it’s enough for most purposes. Similarly, data visualization is limited compared to a Tableau or ADVIZOR, but allows some interactive analysis and is more than adequate for day-to-day purposes.

Users can combine several reports onto a single dashboard, adding titles and effects similar to a Powerpoint slide. The report remains connected to the original workflow but doesn’t update automatically when the flow is rerun.

Intriguingly, Lyza can also display the lineage of a table or chart value. It traces the data from its source through all subsequent workflow steps, listing any transformations or selections applied along the way. Davis sees this as quickly answering the ever-popular question, “Where did that number come from?” Presumably this will leave more time to discuss American Idol.

Users can also link one workflow to another by simply dragging an object onto a new worksheet. This is a very powerful feature, since it lets users break big workflows into pieces and lets one workflow feed data into several others. The company has just taken this one step further by adding a collaboration server, Lyza Commons, that lets different users share workflows and reports. Reports show which users send and receive data from other users, as well as which data sets send and receive information from other data sets.

Those reports are more than just neat: they're documenting data flows that are otherwise lost in the “shadow IT” which exists outside of formal systems in most organizations. Combined with lineage tracing, this is where IT departments and auditors should start to find Lyza really interesting.

A future version of Commons will also let non-Lyza users view Lyza reports over the Web – further extending Lyza beyond the analyst’s personal desktop to be an enterprise resource. Add in the 64-bit capability, an API to call Lyza from other systems, and some other tricks the company isn’t ready to discuss in public, and there’s potential here to be much more than a productivity tool for analysts.

This brings us back to pricing. If you were reading closely, you noticed that little comment about Lyza being priced under $1,000. Actually there are two versions: a $199 Lyza Lite that only loads from Microsoft Excel, Access and text files, and the $899 regular version that can also connect to standard relational databases and other ODBC sources and includes the API.

This isn’t quite as cheap as it sounds because these are one year subscriptions. But even so, it is an entry cost well below the several tens of thousands of dollars you’d pay to get started with full versions of QlikView or ADVIZOR, and even a little cheaper than Tableau. The strategy of using analysts’ desktop as a beachhead is obvious, but that doesn’t make it any less effective.

So, should my friends at QlikView be worried? Not right away – QlikView is a vastly more mature product with many features and capabilities that Lyza doesn’t match, and probably can’t unless it switches to an in-memory database. But analysts are QlikView’s beachhead too, and there’s probably not enough room on their desktops for both systems. With a much lower entry price and enough scalability, data manipulation and analysis features to meet analysts’ basic needs, Lyza could be the easier one to pick. And that would make QlikView's growth much harder.

----------------------------

*ADVIZOR Solutions and Tableau Software have excellent visualization with an in-memory database, although they’re not so scalable. PivotLink, Birst and LucidEra are on-demand systems that are highly scalable, although their visualization is less sophisticated. Here are links to my reviews: ADVIZOR , Tableau, PivotLink, Birst and LucidEra.

6 comments:

I agree and made the same point in my own blog at

http://jeromepineau.blogspot.com

However I have NOT been able to load any significantly large amounts of data with Lyza -- anything over a few dozen megs and it becomes just unusable.

This is an interesting post. Thanks.

A few comments:

1. The main disadvantage Lyza have is that they cannot generate interactive documents. Their reports are rendered images that cannot be modified (slicing and dicing, etc), only re-rendered when new data is imported.

2. The size limit on 32-bit cannot be 2 billion rows. Lyza use a columnar database, therefore the size limit is 2 billion bytes of data, not 2 billion rows. 2 billion rows would only be true if your data had a single field, with 2 billion values, each of which are the size of one byte.

Elad Israeli

SiSense

www.sisense.com

Excellent post, thoroughly researched.

I will offer the following replies to the comments on scalability, performance, and interactivity:

1. Scalability: Elad's comment is based upon a misunderstanding of Lyza's storage architecture. The PER COLUMN limit is currently the smaller of 2billion records or 2GB, but the user can have N workflows with N steps and N tables all of which have N columns of that size -- up to the sum total of all disk space available.

2. Performance: Jerome's problem with performance was related to an unusual datasource/dataset, and an enhancement to Lyza was released shortly thereafter in response to address his needs. Lyzasoft release performance improvements routinely, and we're happy to share performance benchmarks if anyone is interested.

3. Interactivity: It is true that drill-thru interactivity with Lyza report objects is currently limited. Interactivity with distributed objects is a valuable feature, and Lyzasoft will be releasing that enhancement before the end of 2009 -- for people consuming reports through Lyza itself and for those consuming reports through web browsers.

We appreciate the feedback, and we're working hard to deliver all the great ideas and suggestions Lyzasoft tryers, beta members, and customers have provided. We release new features, performance tweaks, and bug fixes several times per month -- so it's a safe bet that what you see at any point in time is a lot better than it was a few weeks earlier.

Scott Davis

Founder

Lyzasoft

scott@lyzasoft.com

Scott,

Thank you for your response. I keep track of Lyza because I like the product personally (as a BI freak), even though you and we (SiSense) are not after the same markets and I don't really see Lyza as a competitor.

Truth be told, I believe that you and QlikView aren't after the same markets either.

From a technical standpoint, what you say would be practical if I had unlimited RAM on my desktop. Sadly, that is not the case. Both of our lives would be easier if it was :-)

While you may be only physically constrained to the size of the disk, that is not really true. In-memory technology (columnar or otherwise) is only effective if the calculations can be performed entirely in-memory. If you exceed available memory and start dumping data onto the hard-drive, in-memory technology loses its edge significantly.

So, if you have a 1TB hard drive, that does not realistically mean that you can work with 1 TB of data. As soon as your memory runs out, performance would degrade exponentially, no matter what in-memory technology you use, simply because you would require hard drive read/writes to partition the data.

The true challenge with all types of in-memory technologies (this includes yours, QlikView's and ours) is how to use as little memory as possible to describe as much data as possible, as well as how to perform the calculations themselves without taking up too much memory.

Because Lyza markets itself as a desktop analysis tool for power analysts (read the group 451 report about your guys), the columnar technique you use is probably practical in many cases (most users really don't need access to dozens of millions of rows of raw data).

So, that said, good luck and I hope we get to chat again, as one BI enthusiast to another :-)

Elad Israeli

CEO

SiSense

Elad: Lyza is not an in-memory application. It reads and writes to the disk without buffering everything into memory. Always. The "magic" of Lyza technology is to make the experience feel relatively fluid (albeit not as fast as in-memory) WITHOUT relying on RAM, since most workstations don't have very much. This makes Lyza much, much more scalable than the other products you mention. Hope that helps, but let me know if you'd like to see a demo with a memory-monitor running in real-time.

Scott

It will be my pleasure and honor to take you up on your offer, Scott.

If it is alright with you, I will contact you offline.

Elad

elad(at)sisense.com

Post a Comment