Can we retire Lego blocks as an analogy for API connections? Apart from being a cliché that’s as old as the hills and tired as a worn-out shoe, it gives a false impression of how easy such connections are to make and manage.

Very simply, all Lego block connectors are exactly the same, while API connections can vary greatly. This means that while you can plug any Lego block into any other Lego block and know it will work correctly, you have to look very carefully at each API connector to see what data and functions it supports, and how these align with your particular requirements. Often, getting the API to do what you need will involve configuration or even customization – a process that can be both painstaking and time consuming. When was the last time you set parameters on a Lego block?

There’s a second way the analogy is misleading. Lego blocks are truly interchangeable: if you have two blocks that are the same size and shape, they will do exactly the same thing (which is to say, nothing; they’re just solid pieces of plastic). But no two software applications are exactly the same. Even if they used the same API, they would have different internal functions, performance characteristics, and user interfaces. Anyone who has tried to pick a WordPress plug-in or smartphone app knows the choice is never easy because there are so many different products. Some research is always required, and the more important the application, the more important it is to be sure you select a product that meets your needs.

This is why companies (and individuals) don’t constantly switch apps that do the same thing: there’s a substantial cost to researching a new choice and then learning how to use it. So people stick with their existing solution even if they know better options are available. Or, more precisely, they stick with their existing solution until the value gained from changing to a new one is higher than the cost of making a switch. It turns out that’s often a pretty high bar to meet, especially because the limiting factor is the time of the person or people who have to make the switch, and very often those people have other, higher priority tasks to complete first.

I’ve made these points before, although they do bear repeating at a time when “composability” is offered as a brilliant new concept rather than a new label for micro-services or plain old modular design. But the real reason I’m repeating them now is I’ve seen the Lego block analogy applied to software that users build for themselves with no-code tools or artificial intelligence. The general argument is those technologies make it vastly easier to build software applications, and those applications can easily be connected to create new business processes.

The problem is, that’s only half right. Yes, the new tools make it vastly easier for users to build their own applications. But easily connected?

Think of the granddaddy of all no-code tools, the computer spreadsheet. An elaborate spreadsheet is an application in any meaningful sense of the term, and many people build wonderfully elaborate spreadsheets. But those spreadsheets are personal tools: while they can be shared and even connected to form larger processes, there’s a severe limit to how far they can move beyond their creator before they’re used incorrectly, errors creep in, and small changes break the connection of one application to another.

In fact, those problems apply to every application, regardless of who built it or what tool they used. They can only be avoided if strict processes are in place to ensure documentation, train users, and control changes. The problem is actually worse if it’s an AI-based application where the internal operations are hidden in a way that spreadsheet formulas are not.

And don’t forget that moving data across all those connections has costs of its own. While the data movement costs for any single event can be tiny, they add up when you have thousands of connections and millions of events. This report from Amazon Prime Video shows how they reduced costs by 90% by replacing a distributed microservices approach with a tightly integrated monolithic application. Look here for more analysis. Along related lines, this study found that half of “citizen developer” programs are unsuccessful (at least according to CIOs), and that custom solutions built with low-code tools are cheaper, faster, better tailored to business needs, and easier to change than systems built from packaged components. It can be so messy when sacred cows come home to roost.

In other words, what’s half right is that application building is now easier than ever. What’s half wrong is the claim that applications can easily be connected to create reliable, economical, large-scale business processes. Building a functional process is much harder than connecting a pile of Lego blocks.

There’s one more, still deeper problem with the Lego analogy. It leads people to conceive of applications as distinct units with fixed boundaries. This is problematic because it creates a hidden rigidity in how businesses work. Imagine all the issues of selection cost, connection cost, and functional compatibility suddenly vanished, and you really could build a business process by snapping together standard modules. Even imagine that the internal operations of those modules could be continuously improved without creating new compatibility issues. You would still be stuck with a process that is divided into a fixed set of tasks and executes those tasks either independently or in a fixed sequence, where the output of one task is input to the next.

That may not sound so bad: after all, it’s pretty much the way we think about processes. But what if there’s an advantage to combining parts of two tasks so they interact? If the task boundaries are fixed, that just can’t be done.

For example, most marketing organizations create a piece of content by first building a text, and then creating graphics to illustrate that text. You have a writer here, and a designer there.

The process works well enough, but what if the designer has a great idea that’s relevant but doesn’t illustrate the text she’s been given? Sure, she could talk to the writer, but that will slow things down, and it will look like “rework” in the project flow – and rework is the ultimate sin in process management. More likely, the designer will just let go of her inspiration and illustrate what was originally requested. The process wins. The organization loses.

AI tools or apps by themselves don’t change this. It doesn’t matter if the text is written by AI and then the graphics are created by AI. You still have the same lost opportunity -- and, if anything, the AIs are even less likely than people to break the rules in service of a good idea.

What’s needed here is not orchestration, which manages how work moves from one box to the next, but collaboration, which manages how different boxes are combined so each can benefit from the other.

This is a critical distinction, so it’s worth elaborating a bit. Orchestration implies central planning and control: one authority determines who will do what and when. Any deviation is problematic. It’s great for ensuring that a process is repeated consistently, but requires the central authority to make any changes. The people running the individual tasks may have some autonomy to change what they do, but only if the inputs and outputs remain the same. The image of one orchestra conductor telling many musicians what to do is exactly correct.

Collaboration, on the other hand, assumes people work as a team to create an outcome, and are free to reorganize their work as they see fit. The team can include people from many different specialties who consult with each other. There’s no central authority and changes can happen quickly so long as all team members understand what they need to do. There’s no penalty for doing work outside the standard sequence, such as the designer showing her idea to the copywriter. In fact, that’s exactly what’s supposed to happen. The musical analogy is a jazz ensemble although a clearer example might be a well-functioning hockey or soccer team: players have specific roles but they move together fluidly to reach a shared goal as conditions change.

If you want a different analogy: orchestration is actors following a script, while collaboration is an improv troupe reacting to each other and the audience. Both can be effective but only one is adaptable.

Of course, there’s nothing new about collaboration. It’s why we have cross-functional teams and meetings. But the time those teams spend in meetings is expensive and the more people and tasks are handled in the same team, the more time those meetings take up. Fairly soon, the cost of collaboration outweighs its benefits. This is why well-managed companies limit team size and meeting length.

What makes this important today is that AI isn’t subject to the same constraints on collaboration as mere humans. An AI can consider many more tasks simultaneously with collaboration costs that are close to zero. In fact, there’s a good chance that collaboration costs within a team of specialist AIs will be less than communication costs of having separate specialist AIs each execute one task and then send the output to the next specialist AI.

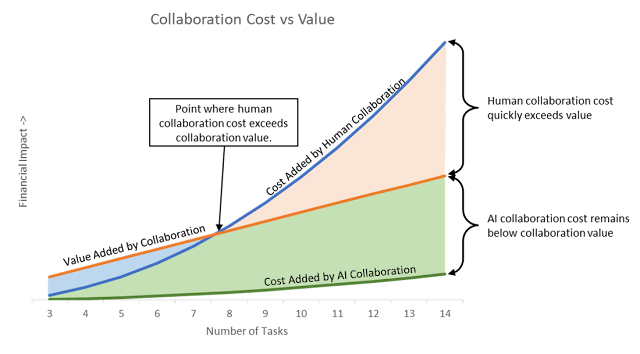

If you want to get pseudo-mathy about it, write equations that compare the value and cost added by collaboration, with the value and cost of doing each task separately. The key relationship as you add tasks is that collaboration cost grows exponentially while value grows linearly.* This means collaboration cost increases faster than value, until at some point it exceeds the value. That point marks the maximum effective team size.

We can do the same calculation where the work is being done by AIs rather than humans. Let’s generously (to humans) assume that AI collaboration adds the same value as human collaboration. This means the only difference is the cost of collaboration, which is vastly lower for the AIs. Even if AI collaboration cost also rises exponentially, it won’t exceed the value added by collaboration until the number of tasks is very, very large.

Of course, finding the actual values for those graphs would be a lot of work, and, hey, this is just a blog post. My main point is that collaboration allows organizations to restructure work so that formerly separate tasks are performed together. Building and integrating task-specific apps won’t do this, no matter how cheaply they’re created or connected. My secondary point is that AI increases the amount of profitable collaboration that’s possible, which means it increases the opportunity cost of sticking with the old task structure.

As it happens, we don’t need to imagine how AI-based collaboration might work. Machine learning systems today offer a real-world example the difference between human work and AI-based collaboration.

Before machine learning, building a predictive model typically followed a process divided into sequential tasks: the data was first collected, then cleaned and prepped for analysis, then explored to select useful inputs, then run through modeling algorithms. Results were then checked for accuracy and robustness and, when a satisfactory scoring formula was found, it was transferred into a production scoring system. Each of those tasks was often done by a different person, but, even if one person handled everything, the tasks were sequential. It was painful to backtrack if, say, an error was discovered in the original data preparation or a new data source became available late in the process.

With machine learning, this sequence no longer exists. Techniques differ, but, in general, the system trains itself by testing a huge number of formulas, learning from past results to improve over time. Data cleaning, preparation, and selection are part of this testing, and may be added or dropped based on the performance of formulas that include different versions. In practice, the machine learning system will probably draw on fixed services such as address standardization or identity resolution. But it at least has the possibility of testing methods that don’t use those services. More important, it will automatically adjust its internal processes to produce the best results as conditions change. This makes it economical to rebuild models on a regular basis, something that can be quite expensive using traditional methods.

Note that it might possible to take the machine learning approach by connecting separate specialist AI modules. But this is where connection costs become an issue, because machine learning runs an enormous number of tests. This would create a very high cumulative cost of moving data between specialist modules. An integrated system will have fewer internal connections, keeping the coordination costs to a minimum.

I may have wandered a bit here, so let me summarize the case against the Lego analogy:

- It ignores integration costs. You can snap together Lego blocks, but you can’t really snap together software modules.

- It ignores product differences. Lego blocks are interchangeable, but software modules are not. Selecting the right software module requires significant time, effort, and expertise.

- It prevents realignment of tasks, which blocks improvements, reduces agility, and increases collaboration costs. This is especially important when AI is added to the picture, because expanded collaboration is a major potential benefit from AI technologies.

Lego blocks are great toys but they’re a poor model for system development. It’s time to move on.

___________________________________________________________

* My logic is that collaboration cost is essentially the amount of time spent in meetings. This is a product of the number of meetings and the number of people in each meeting. If you assume each task adds one more meeting and one more team member, and each meeting last one hour, then a one-task project has one meeting with one person (one hour, talking to herself), a two-task project has two meetings with two people in each (four hours), a three-task project has three meetings with three people (nine hours), and so on. When tasks are done sequentially, there is presumably a kick-off at the start of each task, where the previous team hands the work off to the new team: so each task adds one meeting of two people, or two hours, a linear increase.

There’s no equivalently easy was to estimate the value added by collaboration, but it must grow by some amount with each added task (i.e., linearly), and it’s likely that diminishing returns prevent it from increasing endlessly. So linear growth is a reasonable, if possibly conservative, assumption. It's more clear that cumulative value will grow when tasks are performed sequentially, since otherwise the tasks wouldn't be added. Let's again assume the increase is linear. Presumably the value grows faster with collaboration than sequential management, but if both are growing linearly, the difference will grow linearly as well.

No comments:

Post a Comment