The fourth and final item I had planned to add to the Demand Generation Guide Web site was posted yesterday. This is a spreadsheet on calculating the business value of a demand generation system. Basically it defines a formula for calculating profit based on factors that are affected by a demand generation system: number of leads, lead-to-customer conversion rate, net margin per customer, acquisition cost, lead handling cost and sales cost. It then identifies which factors are affected by different demand generation applications (lead generation campaigns, lead nuturing campaigns, lead distribution, reporting). These are set out on a spreadsheet so users can enter the current values and then make changes to reflect gains expected from the demand generation system. The system calculates profits from both sets of figures and shows the difference, which is the value of the new system.

Nothing fancy about all that, but bringing it down to the different applications adds a note of reality to the usual "pull numbers of thin air" approach, I think.

The business case worksheet and other articles are available for free at www.raabguide.com.

Friday, October 31, 2008

Monday, October 27, 2008

Demand Gen vs. CRM Paper Now Available

Over the weekend I completed "Demand Generation vs. Customer Relationship Management", the third in my trio of papers explaining where demand generation systems fit into the larger world of customer management software. Like the other two, they are available at the Raab Guide to Demand Generation Systems Web site http://www.raabguide.com/. This one was much easier to write because I was able to draw on the six-task framework established in the first of the trio, "Introduction to Demand Generation Systems". It's all good stuff, in my humble and unbiased opinion. Do take a look.

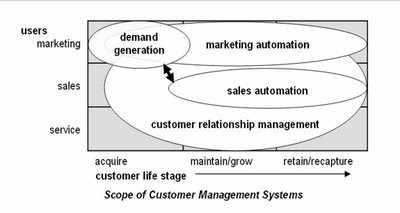

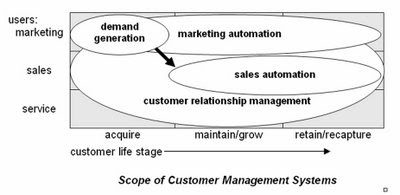

While writing the new paper I slightly revised the scope diagram I published last week. The new one is below. Changes are subtle--a two-headed arrow and showing some slight overlap between demand generation and sales automation.

Thursday, October 23, 2008

Demand Generation Overview

As I promised (threatened?) in my last post, I've been furiously writing articles to explain demand generation for the new Guide Web site. I just finished #2, the not-very-creatively titled "Introduction to Demand Generation Systems" and posted it there.

I won't recap the piece in detail, but am pleased that it does contain pictures. One illustrates my conception of how demand generation systems fit into the world of marketing systems, as follows:

Is that cute or what?

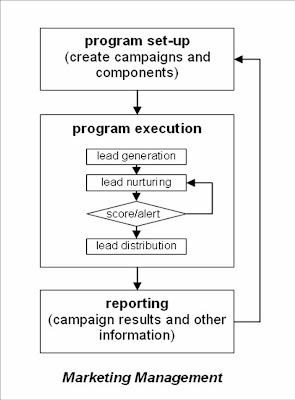

The others are a pair of flow charts illustrating four tasks within the lead managgement cycle...

... and then how those fit into the larger marketing management process:

Anyway, you probably knew all that. But I do like the pictures.

Monday, October 20, 2008

Marketing Automation vs. Demand Generation: What's the Difference?

One of the first people I told about the new Guide to Demand Generation Systems -- an experienced database marketing consultant, no less -- was receptive to the Guide but asked whether there was any real difference between "demand generation" and "marketing automation" in general. This set off all kinds of alarms, since this was someone who clearly should have been familiar with the distinction.

As with most things, my immediate reaction was to write up an answer. In fact, I've decided I need at least three pieces on the topic: one explaining demand generation in general; one explaining how it differs from marketing automation; and another distinguishing it from customer relationship management (CRM). All this is on top of the piece I was in the middle of writing, on how to cost-justify a demand generation system. These are all fodder for the "Downloadable Resources" section of the new Guide site (have I mentioned in this paragraph that it's http://www.raabguide.com/?), which was looking pretty threadbare at just two pieces.

Anyway, the piece comparing demand generation vs. marketing automation is just finished and posted you-know-where. The short answer is demand generation systems serve marketers who are focused on the lead acquisition and nurturing portion of the customer life cycle, while marketing automation systems serve marketers who are responsible for managing the entire life cycle: acquisition, maintenance and retention. I suspect this distinction will not be popular among some of you, who will correctly point out that demand generation systems can be and are sometimes used for on-going customer management programs. True enough, but I'd still say the primary focus and competitive advantage of demand generation systems is in the pre-customer stage. One big reason demand generation systems aren't really suited for cradle-to-grave customer management: they don't typically capture the sales and service transactions needed to properly target customer communications.

By all means, download the full paper from http://www.raabguide.com/ and let me know your thoughts.

As with most things, my immediate reaction was to write up an answer. In fact, I've decided I need at least three pieces on the topic: one explaining demand generation in general; one explaining how it differs from marketing automation; and another distinguishing it from customer relationship management (CRM). All this is on top of the piece I was in the middle of writing, on how to cost-justify a demand generation system. These are all fodder for the "Downloadable Resources" section of the new Guide site (have I mentioned in this paragraph that it's http://www.raabguide.com/?), which was looking pretty threadbare at just two pieces.

Anyway, the piece comparing demand generation vs. marketing automation is just finished and posted you-know-where. The short answer is demand generation systems serve marketers who are focused on the lead acquisition and nurturing portion of the customer life cycle, while marketing automation systems serve marketers who are responsible for managing the entire life cycle: acquisition, maintenance and retention. I suspect this distinction will not be popular among some of you, who will correctly point out that demand generation systems can be and are sometimes used for on-going customer management programs. True enough, but I'd still say the primary focus and competitive advantage of demand generation systems is in the pre-customer stage. One big reason demand generation systems aren't really suited for cradle-to-grave customer management: they don't typically capture the sales and service transactions needed to properly target customer communications.

By all means, download the full paper from http://www.raabguide.com/ and let me know your thoughts.

Wednesday, October 15, 2008

Department of the Obvious: Anti-Terrorist Data Mining Doesn't Work

I've emerged from the cave where Osama bin Laden and I were working on the new Guide to Demand Generation Systems (oops -- the Osama part was supposed to be secret) and am now catching up with the rest of the world. One news item that caught my attention described a recent National Research Council report that concluded data mining to find terrorists "is neither feasible as an objective nor desirable as a goal of technology development efforts." See "Government report: data mining doesn't work well" from CNET.

The article pretty much speaks for itself and is no surprise to anyone who is even remotely familiar with the actual capabilities of the underlying technology. Sady, this group is a tiny minority while the rest of the world bases its notions of what's possible on movies like Minority Report and Enemy of the State, and TV shows like 24. (Actually, I've never seen 24, so I don't really know what claims it makes for technology.) So even though this is outside the normal range of topics for this blog, it's worth publicizing a bit in the hopes of stimulating a more informed public conversation.

The article pretty much speaks for itself and is no surprise to anyone who is even remotely familiar with the actual capabilities of the underlying technology. Sady, this group is a tiny minority while the rest of the world bases its notions of what's possible on movies like Minority Report and Enemy of the State, and TV shows like 24. (Actually, I've never seen 24, so I don't really know what claims it makes for technology.) So even though this is outside the normal range of topics for this blog, it's worth publicizing a bit in the hopes of stimulating a more informed public conversation.

Tuesday, October 14, 2008

Sample Guide Entries Now Available on the New Site

The new Guide Web site is now fully functional at www.raabguide.com. Please visit and comment. If you want to make a purchase, even better.

Per yesterday's post regarding the comparison matrix and vendor tables, extracts of both are available on the site (under 'Look Inside' on the 'Guide' page). These will give a concrete view of the difference between the two formats.

I'm sure I'll be adding more to the site over time. For the moment, we have to turn our attention to marketing: press release should have gone out today but I haven't heard from the person working on it. Tomorrow, perhaps.

Per yesterday's post regarding the comparison matrix and vendor tables, extracts of both are available on the site (under 'Look Inside' on the 'Guide' page). These will give a concrete view of the difference between the two formats.

I'm sure I'll be adding more to the site over time. For the moment, we have to turn our attention to marketing: press release should have gone out today but I haven't heard from the person working on it. Tomorrow, perhaps.

Monday, October 13, 2008

Free Usability Assessment Worksheet!

I won’t claim a direct cause-and-effect relationship, but is it really just a coincidence that the stock market finally had a good day exactly when my new Guide to Demand Generation Systems is about to be released? Think about it.

That said, the new Guide Web site is in the final testing and should be launched tomorrow. It might even be working by the time you read this: try http://www.raabguide.com/. The Guide itself has been circulating in draft among the vendors for about two weeks. The extra time was helpful since it allowed a final round of corrections triggered by the yes/no/maybe comparison matrix.

I still feel this sort of matrix oversimplifies matters, but it does seem to focus vendors’ attention in a way that less structured descriptions do not. In fact, I’m wondering whether I should drop the structured descriptions altogether, and just show the matrix categories with little explanatory notes. Readers would lose some nuance, but if nobody pays attention to the descriptions anyway, it might be a good choice for future editions. It would certainly save me a fair amount of work. Thoughts on the topic are welcome (yes, I know few of you have actually seen the Guide yet. I’m still considering how to distribute samples without losing sales.)

Part of my preparation for the release has been to once more ponder the question of usability, which is central to the appeal of several Guide vendors. A little external research quickly drove home the point that usability is always based on context: it can only be measured for particular users for particular functions in particular situations. This was already reflected in my thinking, but focusing on it did clarify matters. It actually implies two important things:

1. each usability analysis has to start with a definition of the specific functions, users and conditions that apply to the purchasing organization. This, in turn, means

2. there’s no way to create a generic usability ranking.

Okay, I’ll admit #2 is a conclusion I’m very happy to reach. Still, I do think it’s legitimate. More important, it opens a clear path towards a usability assessment methodology. The steps are:

- define the functions you need, the types of users who perform each function, and the conditions the users will work under. “Types of users” vary by familiarity with the system, how often they use it, their administrative rights, and their general skill sets (e.g. marketers vs. analysts vs. IT specialists). The effort required for a given task varies greatly for different user types, and so do the system features that are most helpful. To put it in highway terms: casual users need directions and guardrails; experienced users like short cuts.

“Conditions” are variables like the time available for a task, the number of tasks to complete, the cost of making an error, and external demands on the user’s time. A system that’s optimized for one set of conditions might be quite inefficient under another set. For example, a system designed to avoid errors through careful review and approvals of new programs might be very cumbersome for users who don’t need that much control.

- assess the effort that the actual users will spend on the functions. The point is that having a specific type of user and set of conditions in mind makes it much easier to assess a system’s suitability. Ideally, you would estimate the actual hours per year for each user group for each task (recognizing that some tasks may be divided among different user types). But even if you don't have that much detail, you should still be able to come up with a score that reflects which systems are more easier to use in a particular situation.

- if you want to get really detailed, break apart the effort associated with each task into three components: training, set-up (e.g. a new email template or campaign structure), and execution (e.g. customizing an email for a particular campaign). This is the most likely way for labor to be divided: more skilled users or administrators will set things up, while casual users or marketers will handle day-to-day execution. This division also matches important differences among the systems themselves: some require more set-up but make incremental execution very easy, while others need less set-up for each project but allow less reuse. It may be hard to actually uncover these differences in a brief vendor demonstration, but this approach at least raises the right question and gives a framework for capturing the answers.

- after the data is gathered, summarize it in a traditional score card fashion. If the effort measures are based on hours per year, no weighting is required; if you used some other type of scoring system, weights may be needed. You can use the same function list for traditional functionality assessments, which boil down to the percentage of requirements (essential and nice-to-have) each system can meet. Functional scores almost always need to be weighted by importance. Once you have functionality and usability scores available, comparing different systems is easy.

In practice, as I’ve said so many times before, the summary scores are less important than the function-by-function assessments going into them. This is really where you see the differences between systems and decide which trade-offs make the most sense.

For those of you who are interested, I’ve put together a Usability Assessment Worksheet that supports this methodology. This is available for free on the new Guide Web site: just register (if registration is working yet) and you’ll be able to download it. I’ll be adding other resources over time as well—hopefully the site will evolve into a useful repository of tools.

That said, the new Guide Web site is in the final testing and should be launched tomorrow. It might even be working by the time you read this: try http://www.raabguide.com/. The Guide itself has been circulating in draft among the vendors for about two weeks. The extra time was helpful since it allowed a final round of corrections triggered by the yes/no/maybe comparison matrix.

I still feel this sort of matrix oversimplifies matters, but it does seem to focus vendors’ attention in a way that less structured descriptions do not. In fact, I’m wondering whether I should drop the structured descriptions altogether, and just show the matrix categories with little explanatory notes. Readers would lose some nuance, but if nobody pays attention to the descriptions anyway, it might be a good choice for future editions. It would certainly save me a fair amount of work. Thoughts on the topic are welcome (yes, I know few of you have actually seen the Guide yet. I’m still considering how to distribute samples without losing sales.)

Part of my preparation for the release has been to once more ponder the question of usability, which is central to the appeal of several Guide vendors. A little external research quickly drove home the point that usability is always based on context: it can only be measured for particular users for particular functions in particular situations. This was already reflected in my thinking, but focusing on it did clarify matters. It actually implies two important things:

1. each usability analysis has to start with a definition of the specific functions, users and conditions that apply to the purchasing organization. This, in turn, means

2. there’s no way to create a generic usability ranking.

Okay, I’ll admit #2 is a conclusion I’m very happy to reach. Still, I do think it’s legitimate. More important, it opens a clear path towards a usability assessment methodology. The steps are:

- define the functions you need, the types of users who perform each function, and the conditions the users will work under. “Types of users” vary by familiarity with the system, how often they use it, their administrative rights, and their general skill sets (e.g. marketers vs. analysts vs. IT specialists). The effort required for a given task varies greatly for different user types, and so do the system features that are most helpful. To put it in highway terms: casual users need directions and guardrails; experienced users like short cuts.

“Conditions” are variables like the time available for a task, the number of tasks to complete, the cost of making an error, and external demands on the user’s time. A system that’s optimized for one set of conditions might be quite inefficient under another set. For example, a system designed to avoid errors through careful review and approvals of new programs might be very cumbersome for users who don’t need that much control.

- assess the effort that the actual users will spend on the functions. The point is that having a specific type of user and set of conditions in mind makes it much easier to assess a system’s suitability. Ideally, you would estimate the actual hours per year for each user group for each task (recognizing that some tasks may be divided among different user types). But even if you don't have that much detail, you should still be able to come up with a score that reflects which systems are more easier to use in a particular situation.

- if you want to get really detailed, break apart the effort associated with each task into three components: training, set-up (e.g. a new email template or campaign structure), and execution (e.g. customizing an email for a particular campaign). This is the most likely way for labor to be divided: more skilled users or administrators will set things up, while casual users or marketers will handle day-to-day execution. This division also matches important differences among the systems themselves: some require more set-up but make incremental execution very easy, while others need less set-up for each project but allow less reuse. It may be hard to actually uncover these differences in a brief vendor demonstration, but this approach at least raises the right question and gives a framework for capturing the answers.

- after the data is gathered, summarize it in a traditional score card fashion. If the effort measures are based on hours per year, no weighting is required; if you used some other type of scoring system, weights may be needed. You can use the same function list for traditional functionality assessments, which boil down to the percentage of requirements (essential and nice-to-have) each system can meet. Functional scores almost always need to be weighted by importance. Once you have functionality and usability scores available, comparing different systems is easy.

In practice, as I’ve said so many times before, the summary scores are less important than the function-by-function assessments going into them. This is really where you see the differences between systems and decide which trade-offs make the most sense.

For those of you who are interested, I’ve put together a Usability Assessment Worksheet that supports this methodology. This is available for free on the new Guide Web site: just register (if registration is working yet) and you’ll be able to download it. I’ll be adding other resources over time as well—hopefully the site will evolve into a useful repository of tools.

Wednesday, October 01, 2008

New Guide is Ready

I've been distracted this week by an unrelated client deadline, but the new Raab Guide to Demand Generation Systems is indeed complete. A proper e-commerce site will be available shortly, but if anyone really can't wait, the salient details are:

- 150+ point comparison matrix and detailed tables on: Eloqua, Manticore, Marketo, Market2Lead and Vtrenz, based on extensive vendor interviews and demonstrations

- price: $595 for single copy, $995 for one-year subscription (provides access to updates as these are made--I expect to add more vendors and update entries on current ones).

- to order in the next few days, contact me via email at draab@raabassociates.com

More details to follow....

- 150+ point comparison matrix and detailed tables on: Eloqua, Manticore, Marketo, Market2Lead and Vtrenz, based on extensive vendor interviews and demonstrations

- price: $595 for single copy, $995 for one-year subscription (provides access to updates as these are made--I expect to add more vendors and update entries on current ones).

- to order in the next few days, contact me via email at draab@raabassociates.com

More details to follow....

Subscribe to:

Comments (Atom)