I spent some time recently delving into QlikView’s automation functions, which allow users to write macros to control various activities. These are an important and powerful part of QlikView, since they let it function as a real business application rather than a passive reporting system. But what the experience really did was clarify why QlikView is so much easier to use than traditional software.

Specifically, it highlighted the difference between QlikView’s own scripting language and the VBScript used to write QlikView macros.

I was going to label QlikView scripting as a “procedural” language and contrast it with VBScript as an “object-oriented” language, but a quick bit of Wikipedia research suggests those may not be quite the right labels. Still, whatever the nomenclature, the difference is clear when you look at the simple task of assigning a value to a variable. With QlikView scripts, I use a statement like:

Set Variable1 = ‘123’;

With VBScript using the QlikView API, I need something like:

set v = ActiveDocument.GetVariable("Variable1")

v.SetContent "123",true

That the first option is considerably easier may not be an especially brilliant insight. But the implications are significant, because they mean vastly less training is needed to write QlikView scripts than to write similar programs in a language like VBScript, let alone Visual Basic itself. This in turn means that vastly less technical people can do useful things in QlikView than with other tools. And that gets back to the core advantage I’ve associated with QlikView previously: that it lets non-IT people like business analysts do things that normally require IT assistance. The benefit isn’t simply that the business analysts are happier or that IT gets a reduced workload. It's that the entire development cycle is accelerated because analysts can develop and refine applications for themselves. Otherwise, they'd be writing specifications, handing these to IT, waiting for IT to produce something, reviewing the results, and then repeating the cycle to make changes. This is why we can realistically say that QlikView cuts development time to hours or days instead of weeks or months.

Of course, any end-user tool cuts the development cycle. Excel reduces development time in exactly the same way. The difference lies in the power of QlikView scripts. They can do very complicated things, giving users the ability to create truly powerful systems. These capabilities include all kinds of file manipulation—loading data, manipulating it, splitting or merging files, comparing individual records, and saving the results.

The reason it’s taken me so long time to recognize that this is important is that database management is not built into today's standard programming languages. We’re simply become so used to the division between SQL queries and programs that the distinction feels normal. But reflecting on QlikView script brought me back to the old days of FoxPro and dBase database languages, which did combine database management with procedural coding. They were tremendously useful tools. Indeed, I still use FoxPro for certain tasks. (And not that crazy new-fangled Visual FoxPro, either. It’s especially good after a brisk ride on the motor-way in my Model T. You got a problem with that, sonny?)

Come to think of it FoxPro and dBase played a similar role in their day to what QlikView offers now: bringing hugely expanded data management power to the desktops of lightly trained users. Their fate was essentially to be overwhelmed by Microsoft Access and SQL Server, which made reasonably priced SQL databases available to end-users and data centers. Although I don’t think QlikView is threatened from that direction, the analogy is worth considering.

Back to my main point, which is that QlikView scripts are both powerful and easy to use. I think they’re an underreported part of the QlikView story, which tends to be dominated by the sexy technology of the in-memory database and the pretty graphics of QlikView reports. Compared with those, scripting seems pedestrian and probably a bit scary to the non-IT users whom I consider QlikView’s core market. I know I myself was put off when I first realized how dependent QlikView was on scripts, because I thought it meant only serious technicians could take advantage of the system. Now that I see how much easier the scripts are than today’s standard programming languages, I consider them a major QlikView advantage.

(Standard disclaimer: although my firm is a reseller for QlikView, opinions expressed in this blog are my own.)

This is the blog of David M. Raab, marketing technology consultant and analyst. Mr. Raab is founder and CEO of the Customer Data Platform Institute and Principal at Raab Associates Inc. All opinions here are his own. The blog is named for the Customer Experience Matrix, a tool to visualize marketing and operational interactions between a company and its customers.

Thursday, January 31, 2008

Friday, January 25, 2008

Alterian Branches Out

It was Alterian's turn this week in my continuing tour of marketing automation vendors. As I’ve mentioned before, Alterian has pursued a relatively quiet strategy of working through partners—largely marketing services providers (MSPs)—instead of trying to sell their software directly to corporate marketing departments. This makes great sense because their proprietary database engine, which uses very powerful columnar indexes, is a tough sell to corporate IT groups who prefer more traditional technology. Service agencies are more willing to discard such technology to gain the operating cost and performance advantages that Alterian can provide. This strategy has been a major success: the company, which is publicly held, has been growing revenue at better than 30% per year and now claims more than 70 partners with more than 400 clients among them. These include 10 of the top 12 MSPs in the U.S. and 12 of the top 15 in the U.K.

Of course, this success poses its own challenge: how do you continue to grow when you’ve sold to nearly all of your largest target customers? One answer is you sell more to those customers, which means expanding the scope of the product. In Alterian’s situation, it also probably means getting the MSPs to use it for more of their own customers.

Alterian has indeed been expanding product scope. Over the past two years it has moved from being primarily a data analysis and campaign management tool to competing as a full-scope enterprise marketing system. Specifically, it added high volume email generation through its May 2006 acquisition of Dynamics Direct, and added marketing resource management through the acquisition of Nvigorate in September of that year. It says those products are now fully integrated with its core system, working directly with the Alterian database engine. The company also purchased contact optimization technology in April 2007, and has since integrated that as well.

The combination of marketing resource management (planning, project management and content management) with campaign execution is pretty much the definition of an enterprise marketing system, so Alterian can legitimately present itself as part of that market.

The pressure that marketing automation vendors feel to expand in this manner is pretty much irresistible, as I’ve written many times before. In Alterian’s case it is a bit riskier than usual because the added capabilities don’t particularly benefit from the power of its database engine. (Or at least I don’t see many benefits: Alterian may disagree.) This means having a broader set of features actually dilutes the company’s unique competitive advantage. But apparently that is a price they are willing to pay for growth. In any case, Alterian suffers no particular disadvantage in offering these services—how well it does them is largely unrelated to its database.

Alterian’s other avenue for expansion is to add new partners outside of its traditional base. The company is moving in that direction too, looking particularly at advertising agencies and systems integrators. These are two quite different groups. Ad agencies are a lot like marketing services providers in their focus on cost, although (with some exceptions) they have fewer technical resources in-house. Alterian is addressing this by providing hosting services when the agencies ask for them. System integrators, on the other hand, are primarily technology companies. The attraction of Alterian for them is they can reduce the technology cost of the solutions they deliver to their clients, thereby either reducing the total cost or allowing them to bill more for other, more profitable services. But systems integrators, like in-house IT departments, are not always terribly price-conscious and are very concerned about the number of technologies they must support. It will be interesting to see how many systems integrators find Alterian appealing.

Mapped against my five major marketing automation capabilities of planning, project management, content management, execution and analytics, Alterian provides fairly complete coverage. In addition to the acquisitions already mentioned, it has expanded its reporting and analytics, which were already respectable and benefit greatly from its database engine. It still relies on alliances (with SPSS and KXEN) for predictive modeling and other advanced analysis, but that’s pretty common even among the enterprise marketing heavyweights. Similarly, it relies on integration with Omniture for Web analytics. Alterian can embed Omniture tags in outbound emails and landing pages, which lets Omniture report on email response at both campaign and individual levels.

Alterian also lacks real-time interaction management, in the sense of providing recommendations to a call center agent or Web site during a conversation with a customer. This is a hot growth area among enterprise marketing vendors, so it may be something the company needs to address. It does have some real-time or at least near-real-time capabilities, which it uses to drive email responses, RSS, and mobile phone messages. It may leverage these to move into this area over time.

If there’s one major issue with Alterian today, it’s that the product still relies on a traditional client/server architecture. Until recently, Web access often appeared on company wish lists, but wasn’t actually important because marketing automation systems were primarily used by a small number of specialists. If anything, remote access was more important to Alterian than other vendors because its partners needed to give their own clients ways to use the system. Alterian met this requirement by offering browser access through a Citrix connection. (Citrix allows a user to work on a machine in a different location; so, essentially, the agency’s clients would log into a machine that was physically part of the MSP’s in-house network.)

What changes all this is the addition of marketing resource management. Now, the software must serve large numbers of in-house users for budgeting, content management, project updates, and reporting. To meet this need, true Web access is required. The ystems that Alterian acquired for email (Dynamics Direct) and marketing resource management (Nvigorate) already have this capability, as might be expected. But Alterian’s core technology still relies on traditional installed client software. The company is working to Web-enable its entire suite, a project that will take a year or two. This involves not only changing the interfaces, but revising the internal structures to provide a modern services-based approach.

Of course, this success poses its own challenge: how do you continue to grow when you’ve sold to nearly all of your largest target customers? One answer is you sell more to those customers, which means expanding the scope of the product. In Alterian’s situation, it also probably means getting the MSPs to use it for more of their own customers.

Alterian has indeed been expanding product scope. Over the past two years it has moved from being primarily a data analysis and campaign management tool to competing as a full-scope enterprise marketing system. Specifically, it added high volume email generation through its May 2006 acquisition of Dynamics Direct, and added marketing resource management through the acquisition of Nvigorate in September of that year. It says those products are now fully integrated with its core system, working directly with the Alterian database engine. The company also purchased contact optimization technology in April 2007, and has since integrated that as well.

The combination of marketing resource management (planning, project management and content management) with campaign execution is pretty much the definition of an enterprise marketing system, so Alterian can legitimately present itself as part of that market.

The pressure that marketing automation vendors feel to expand in this manner is pretty much irresistible, as I’ve written many times before. In Alterian’s case it is a bit riskier than usual because the added capabilities don’t particularly benefit from the power of its database engine. (Or at least I don’t see many benefits: Alterian may disagree.) This means having a broader set of features actually dilutes the company’s unique competitive advantage. But apparently that is a price they are willing to pay for growth. In any case, Alterian suffers no particular disadvantage in offering these services—how well it does them is largely unrelated to its database.

Alterian’s other avenue for expansion is to add new partners outside of its traditional base. The company is moving in that direction too, looking particularly at advertising agencies and systems integrators. These are two quite different groups. Ad agencies are a lot like marketing services providers in their focus on cost, although (with some exceptions) they have fewer technical resources in-house. Alterian is addressing this by providing hosting services when the agencies ask for them. System integrators, on the other hand, are primarily technology companies. The attraction of Alterian for them is they can reduce the technology cost of the solutions they deliver to their clients, thereby either reducing the total cost or allowing them to bill more for other, more profitable services. But systems integrators, like in-house IT departments, are not always terribly price-conscious and are very concerned about the number of technologies they must support. It will be interesting to see how many systems integrators find Alterian appealing.

Mapped against my five major marketing automation capabilities of planning, project management, content management, execution and analytics, Alterian provides fairly complete coverage. In addition to the acquisitions already mentioned, it has expanded its reporting and analytics, which were already respectable and benefit greatly from its database engine. It still relies on alliances (with SPSS and KXEN) for predictive modeling and other advanced analysis, but that’s pretty common even among the enterprise marketing heavyweights. Similarly, it relies on integration with Omniture for Web analytics. Alterian can embed Omniture tags in outbound emails and landing pages, which lets Omniture report on email response at both campaign and individual levels.

Alterian also lacks real-time interaction management, in the sense of providing recommendations to a call center agent or Web site during a conversation with a customer. This is a hot growth area among enterprise marketing vendors, so it may be something the company needs to address. It does have some real-time or at least near-real-time capabilities, which it uses to drive email responses, RSS, and mobile phone messages. It may leverage these to move into this area over time.

If there’s one major issue with Alterian today, it’s that the product still relies on a traditional client/server architecture. Until recently, Web access often appeared on company wish lists, but wasn’t actually important because marketing automation systems were primarily used by a small number of specialists. If anything, remote access was more important to Alterian than other vendors because its partners needed to give their own clients ways to use the system. Alterian met this requirement by offering browser access through a Citrix connection. (Citrix allows a user to work on a machine in a different location; so, essentially, the agency’s clients would log into a machine that was physically part of the MSP’s in-house network.)

What changes all this is the addition of marketing resource management. Now, the software must serve large numbers of in-house users for budgeting, content management, project updates, and reporting. To meet this need, true Web access is required. The ystems that Alterian acquired for email (Dynamics Direct) and marketing resource management (Nvigorate) already have this capability, as might be expected. But Alterian’s core technology still relies on traditional installed client software. The company is working to Web-enable its entire suite, a project that will take a year or two. This involves not only changing the interfaces, but revising the internal structures to provide a modern services-based approach.

Thursday, January 17, 2008

Aprimo 8.0 Puts a New Face on Campaign Management

Loyal readers will recall a series of posts before New Years providing updates on the major marketing automation vendors: SAS, Teradata, and Unica. I spoke Aprimo around the same time, but had some follow-up questions that were deferred due to the holidays. Now I have my answers, so now you get your post.

Aprimo, if you’re not familiar with them, is a bit different from the other marketing automation companies because its has always focused on marketing administration—that is, planning, budgeting, project management and marketing asset management. It has offered traditional campaign management as well, although with more of a business-to-business slant than its competitors. Over the past few years the company acquired additional technology from Doubleclick, picking up the SmartPath workflow system and Protagona Ensemble consumer-oriented campaign manager. Parts of these have since been incorporated into its product.

The company’s most recent major release is 8.0, which came out last November. This provided a raft of analytical enhancements, including real-time integration with SPSS, near-real-time integration with WebTrends Web analytics, a data mart generator to simplify data exports, embedded reports and dashboards, and rule-based contact optimization.

The other big change was a redesigned user interface. This uses a theme of “offer management” to unify planning and execution. In Aprimo’s terms, planning includes cell strategy, marketing asset management and finance, while execution includes offers, counts and performance measurement.

Campaigns, the traditional unit of marketing execution, still play an important role in the system Aprimo campaigns contain offers, cells and segmentations, which are all defined independently—that is, one cell can be used in several segmentations, one offer can be applied to several cells, and one cell can be linked to several offers.

This took some getting used to. I generally think of cells of subdivisions within a segmentation, but in Aprimo those subdivisions are called lists. A cell is basically a label for a set of offers. The same cell can be attached to different lists, even inside the same segmentation. The same offers are attached to each cell across all segmentations. This provides some consistency, but is limited since a cell may use only some of its offers with a particular list. (Offers themselves can be used with different treatments, such as a postcard, letter, or email.) Cells also have tracking codes used for reporting. These can remain consistent across different lists, although the user can change the code for a particular list if she needs to.

Complex as this may sound, its purpose was simplicity. The theory is each marketer works with certain offers, so their view can be restricted to those offers by letting them work with a handful of cells. This actually makes sense when you think about.

The 8.0 interface also includes a new segmentation designer. This uses the flow chart approach common to most modern campaign managers: users start with a customer universe and then apply icons to define steps such as splits, merges, deduplication, exclusions, scoring, and outputs. Version 8.0 adds new icons for response definition, contact optimization, and addition of lists from outside the main marketing database. The system can give counts for each node in the flow or for only some branches. It can also apply schedules to all or part of the flow.

The analytical enhancements are important but don’t require much description. One exception is contact optimization, a hot topic that means different things in different systems. Here, it is rule-based rather than statistical: that is, users specify how customer contacts will be allocated, rather than letting the system find the mathematically best combination of choices. Aprimo’s optimization starts with users assigning customers to multiple lists, across multiple segmentations if desired. Rules can then specify suppressions, priorities for different campaigns, priorities within campaigns, and limits on the maximum number of contacts per person. These contact limits can take into account previous promotion history. The system then produces a plan that shows how many people will be assigned to each campaign.

This type of optimization is certainly valuable, although it is not as sophisticated as optimization available in some other major marketing systems.

I’m giving a somewhat lopsided view of Aprimo by focusing on campaign management. Its greatest strength has always been in marketing administration, which is very powerful and extremely well integrated—something Aprimo’s competitors cannot always say. Anyone with serious needs in both marketing administration and campaign management should give Aprimo a good, close look.

Aprimo, if you’re not familiar with them, is a bit different from the other marketing automation companies because its has always focused on marketing administration—that is, planning, budgeting, project management and marketing asset management. It has offered traditional campaign management as well, although with more of a business-to-business slant than its competitors. Over the past few years the company acquired additional technology from Doubleclick, picking up the SmartPath workflow system and Protagona Ensemble consumer-oriented campaign manager. Parts of these have since been incorporated into its product.

The company’s most recent major release is 8.0, which came out last November. This provided a raft of analytical enhancements, including real-time integration with SPSS, near-real-time integration with WebTrends Web analytics, a data mart generator to simplify data exports, embedded reports and dashboards, and rule-based contact optimization.

The other big change was a redesigned user interface. This uses a theme of “offer management” to unify planning and execution. In Aprimo’s terms, planning includes cell strategy, marketing asset management and finance, while execution includes offers, counts and performance measurement.

Campaigns, the traditional unit of marketing execution, still play an important role in the system Aprimo campaigns contain offers, cells and segmentations, which are all defined independently—that is, one cell can be used in several segmentations, one offer can be applied to several cells, and one cell can be linked to several offers.

This took some getting used to. I generally think of cells of subdivisions within a segmentation, but in Aprimo those subdivisions are called lists. A cell is basically a label for a set of offers. The same cell can be attached to different lists, even inside the same segmentation. The same offers are attached to each cell across all segmentations. This provides some consistency, but is limited since a cell may use only some of its offers with a particular list. (Offers themselves can be used with different treatments, such as a postcard, letter, or email.) Cells also have tracking codes used for reporting. These can remain consistent across different lists, although the user can change the code for a particular list if she needs to.

Complex as this may sound, its purpose was simplicity. The theory is each marketer works with certain offers, so their view can be restricted to those offers by letting them work with a handful of cells. This actually makes sense when you think about.

The 8.0 interface also includes a new segmentation designer. This uses the flow chart approach common to most modern campaign managers: users start with a customer universe and then apply icons to define steps such as splits, merges, deduplication, exclusions, scoring, and outputs. Version 8.0 adds new icons for response definition, contact optimization, and addition of lists from outside the main marketing database. The system can give counts for each node in the flow or for only some branches. It can also apply schedules to all or part of the flow.

The analytical enhancements are important but don’t require much description. One exception is contact optimization, a hot topic that means different things in different systems. Here, it is rule-based rather than statistical: that is, users specify how customer contacts will be allocated, rather than letting the system find the mathematically best combination of choices. Aprimo’s optimization starts with users assigning customers to multiple lists, across multiple segmentations if desired. Rules can then specify suppressions, priorities for different campaigns, priorities within campaigns, and limits on the maximum number of contacts per person. These contact limits can take into account previous promotion history. The system then produces a plan that shows how many people will be assigned to each campaign.

This type of optimization is certainly valuable, although it is not as sophisticated as optimization available in some other major marketing systems.

I’m giving a somewhat lopsided view of Aprimo by focusing on campaign management. Its greatest strength has always been in marketing administration, which is very powerful and extremely well integrated—something Aprimo’s competitors cannot always say. Anyone with serious needs in both marketing administration and campaign management should give Aprimo a good, close look.

Thursday, January 10, 2008

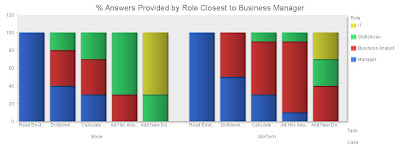

One More Chart on QlikTech

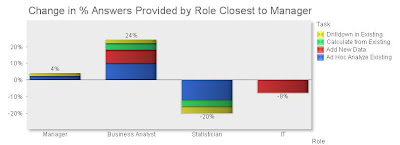

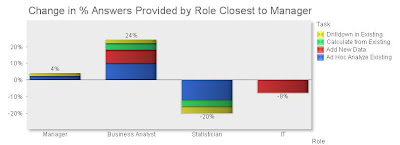

Appearances to the contrary, I do have work to do. But in reflecting on yesterday's post, I did think of one more way to present the impact of QlikTech (or any other software) on an existing environment. This version shows the net change in percentage of answers provided by each user role for each activity type. It definitely shows which roles gain capacity and which have their workload reduced. What I particularly like here is that the detail by task is clearly visible based on the size and colors of segments within the stacked bars, while the combined change is equally visible in the total height of the bars themselves.

In case you were wondering, all these tables and charts have been generated in QlikView. I did the original data entry and some calculations in Excel, where they are simplest. But tables and charts are vastly easier in QlikView, which also has very nice export features to save them as images.

In case you were wondering, all these tables and charts have been generated in QlikView. I did the original data entry and some calculations in Excel, where they are simplest. But tables and charts are vastly easier in QlikView, which also has very nice export features to save them as images.

Wednesday, January 09, 2008

Visualizing the Value of QlikTech (and Any Others)

As anyone who knows me would have expected, I couldn't resist figuring out how to draw and post the chart I described last week to illustrate the benefits of QlikTech.

The mechanics are no big deal, but getting it to look right took some doing. I started with a simple version of the table I described in the earlier post, a matrix comparing business intelligence questions (tasks) vs. the roles of the people who can answer them.

Per Friday's post, I listed four roles: business managers, business analysts, statisticians, and IT specialists. The fundamental assumption is that each question will be answered by the person closest to business managers, who are the ultimate consumers. In other words, starting with the business manager, each person answers a question if she can, or passes it on to the next person on the list.

I defined five types of tasks, based on the technical resources needed to get an answer. These are:

- Read an Existing Report: no work is involved; business managers can answer all these questions themselves.

- Drilldown in Existing Report: this requires accessing data that has already been loaded into a business intelligence system and preppred for access. Business managers can do some of this for themselves, but most will be done by business analysts who are more fluent with the business intelligence product.

- Calculate from Existing Report: this requires copying data from an existing report and manipulating it in Excel or something more substantial. Business managers can do some of this, but more complex analyses are performed by business analysts or sometimes statisticians.

- Ad Hoc Analyze in Existing: this requires accessing data that has already been made available for analysis, but is not part of an existing reporting or business intelligence output. Usually this means it resides in a data warehouse or data mart. Business managers don't have the technical skills to get at this data. Some may be accessible to analysts, more will be available to statisticians, and a remainder will require help from IT.

- Add New Data: this requires adding data to the underlying business intelligence environment, such as putting a new source or field in a data warehouse. Statisticians can do some of this but most of the time it must be done by IT.

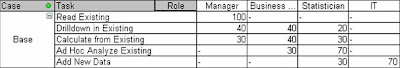

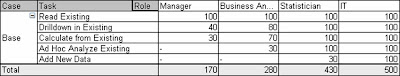

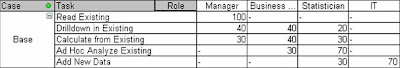

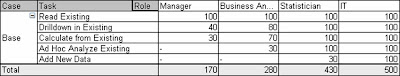

The table below shows the numbers I assigned to each part of this matrix. (Sorry the tables are so small. I haven't figured out how to make them bigger in Blogger. You can click on them for a full-screen view.) Per the preceding definitions, they numbers represent the percentage of questions of each type that can be answered by each sort of user. Each row adds to 100%.

I'm sure you recognize that these numbers are VERY scientific.

I then did a similar matrix representing a situation where QlikTech was available. Essentially, things move to the left, because less technically skilled users gain more capabilities. Specifically,

- There is no change for reading reports, since the managers could already do that for themselves.

- Pretty much any drilldown now becomes accessible to business managers or analysts, because QlikTech makes it so easy to add new drill paths.

- Calculations on existing data don't change for business managers, since they won't learn the finer points of QlikTech or even have the licenses needed to do really complicated things. But business analysts can do a lot more than with Excel. There are still some things that need a statistician's help.

- A little ad hoc analysis becomes possible for business managers, but business analysts gain a great deal of capability. Again, some work still needs a statistician.

- Adding new data now becomes possible in many cases for business analysts, since they can connect directly to data sources that would otherwise have needed IT support or preparation. Statisticians can also pick up some of the work. The rest remains with IT.

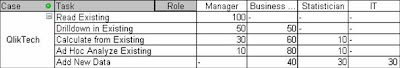

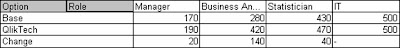

Here is the revised matrix:

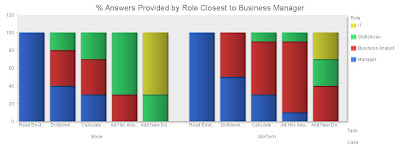

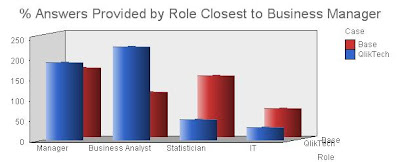

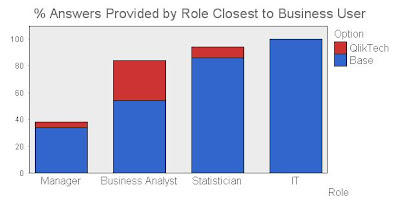

So far so good. But now for the graph itself. Just creating a bar chart of the raw data didn't give the effect I wanted.

Yes, if you look closely, you can see that business managers and business analysts (blue and red bars) gain the most. But it definitely takes more concentration than I'd like.

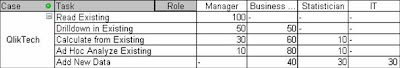

What I really had in mind was a single set of four bars, one for each role, showing how much capability it gained when QlikTech was added. This took some thinking. I could add the scores for each role to get a total value. The change in that value is what I want to show, presumably as a stacked bar chart. But you can't see that when the value goes down. I ultimately decided that the height of the bar should represent the total capability of each role: after all, just because statisticians don't have to read reports for business managers, they still can do it for themselves. This meant adding the values for each row across, so each role accumulated the capabilities of less technical rows to their left. So, the base table now looked like:

The sum of each column now shows the total capability available to each role. A similar calculation and sum for the QlikTech case shows the capability after QlikTech is added. The Change between the two is each role's gain in capability.

Now we have what I wanted. Start with the base value and stack the change on top of it, and you see very clearly how the capabilities shift after adding QlikTech.

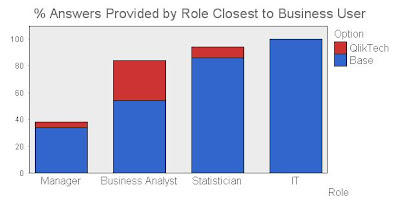

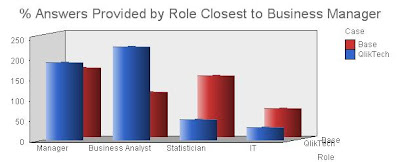

I did find one other approach that I may even like better. This is to plot the sums of the two cases for each group in a three-way bar chart, as below. I usually avoid such charts because they're so hard to read. But in this case it does show both the shift of capability to the left, and the change in workload for the individual roles. It's a little harder to read but perhaps the extra information is worth it.

Obviously this approach to understanding software value can be applied to anything, not just QlikTech. I'd be interested to hear whether anybody else finds it useful.

Fred, this means you.

The mechanics are no big deal, but getting it to look right took some doing. I started with a simple version of the table I described in the earlier post, a matrix comparing business intelligence questions (tasks) vs. the roles of the people who can answer them.

Per Friday's post, I listed four roles: business managers, business analysts, statisticians, and IT specialists. The fundamental assumption is that each question will be answered by the person closest to business managers, who are the ultimate consumers. In other words, starting with the business manager, each person answers a question if she can, or passes it on to the next person on the list.

I defined five types of tasks, based on the technical resources needed to get an answer. These are:

- Read an Existing Report: no work is involved; business managers can answer all these questions themselves.

- Drilldown in Existing Report: this requires accessing data that has already been loaded into a business intelligence system and preppred for access. Business managers can do some of this for themselves, but most will be done by business analysts who are more fluent with the business intelligence product.

- Calculate from Existing Report: this requires copying data from an existing report and manipulating it in Excel or something more substantial. Business managers can do some of this, but more complex analyses are performed by business analysts or sometimes statisticians.

- Ad Hoc Analyze in Existing: this requires accessing data that has already been made available for analysis, but is not part of an existing reporting or business intelligence output. Usually this means it resides in a data warehouse or data mart. Business managers don't have the technical skills to get at this data. Some may be accessible to analysts, more will be available to statisticians, and a remainder will require help from IT.

- Add New Data: this requires adding data to the underlying business intelligence environment, such as putting a new source or field in a data warehouse. Statisticians can do some of this but most of the time it must be done by IT.

The table below shows the numbers I assigned to each part of this matrix. (Sorry the tables are so small. I haven't figured out how to make them bigger in Blogger. You can click on them for a full-screen view.) Per the preceding definitions, they numbers represent the percentage of questions of each type that can be answered by each sort of user. Each row adds to 100%.

I'm sure you recognize that these numbers are VERY scientific.

I then did a similar matrix representing a situation where QlikTech was available. Essentially, things move to the left, because less technically skilled users gain more capabilities. Specifically,

- There is no change for reading reports, since the managers could already do that for themselves.

- Pretty much any drilldown now becomes accessible to business managers or analysts, because QlikTech makes it so easy to add new drill paths.

- Calculations on existing data don't change for business managers, since they won't learn the finer points of QlikTech or even have the licenses needed to do really complicated things. But business analysts can do a lot more than with Excel. There are still some things that need a statistician's help.

- A little ad hoc analysis becomes possible for business managers, but business analysts gain a great deal of capability. Again, some work still needs a statistician.

- Adding new data now becomes possible in many cases for business analysts, since they can connect directly to data sources that would otherwise have needed IT support or preparation. Statisticians can also pick up some of the work. The rest remains with IT.

Here is the revised matrix:

So far so good. But now for the graph itself. Just creating a bar chart of the raw data didn't give the effect I wanted.

Yes, if you look closely, you can see that business managers and business analysts (blue and red bars) gain the most. But it definitely takes more concentration than I'd like.

What I really had in mind was a single set of four bars, one for each role, showing how much capability it gained when QlikTech was added. This took some thinking. I could add the scores for each role to get a total value. The change in that value is what I want to show, presumably as a stacked bar chart. But you can't see that when the value goes down. I ultimately decided that the height of the bar should represent the total capability of each role: after all, just because statisticians don't have to read reports for business managers, they still can do it for themselves. This meant adding the values for each row across, so each role accumulated the capabilities of less technical rows to their left. So, the base table now looked like:

The sum of each column now shows the total capability available to each role. A similar calculation and sum for the QlikTech case shows the capability after QlikTech is added. The Change between the two is each role's gain in capability.

Now we have what I wanted. Start with the base value and stack the change on top of it, and you see very clearly how the capabilities shift after adding QlikTech.

I did find one other approach that I may even like better. This is to plot the sums of the two cases for each group in a three-way bar chart, as below. I usually avoid such charts because they're so hard to read. But in this case it does show both the shift of capability to the left, and the change in workload for the individual roles. It's a little harder to read but perhaps the extra information is worth it.

Obviously this approach to understanding software value can be applied to anything, not just QlikTech. I'd be interested to hear whether anybody else finds it useful.

Fred, this means you.

Friday, January 04, 2008

Fitting QlikTech into the Business Intelligence Universe

I’ve been planning for about a month to write about the position of QlikTech in the larger market for business intelligence systems. The topic has come up twice in the past week, so I guess I should do it already.

First, some context. I’m using “business intelligence” in the broad sense of “how companies get information to run their businesses”. This encompasses everything from standard operational reports to dashboards to advanced data analysis. Since these are all important, you can think of business intelligence as providing a complete solution to a large but finite list of requirements.

For each item on the list, the answer will have two components: the tool used, and the person doing the work. That is, I’m assuming a single tool will not meet all needs, and that different tasks will be performed by different people. This all seems reasonable enough. It means that a complete solution will have multiple components.

It also means that you have to look at any single business intelligence tool in the context of other tools that are also available. A tool which seems impressive by itself may turn out to add little real value if its features are already available elsewhere. For example, a visualization engine is useless without a database. If the company already owns a database that also includes an equally-powerful visualization engine, then there’s no reason to buy the stand-alone visualization product. This is why vendors to expand their product functionality and why it is so hard for specialized systems to survive. It’s also why nobody buys desk calculators: everyone has a computer spreadsheet that does the same and more. But I digress.

Back to the notion of a complete solution. The “best” solution is the one that meets the complete set of requirements at the lowest cost. Here, “cost” is broadly defined to include not just money, but also time and quality. That is, a quicker answer is better than a slower one, and a quality answer is better than a poor one. “Quality” raises its own issues of definition, but let’s view this from the business manager’s perspective, in which case “quality” means something along the lines of “producing the information I really need”. Since understanding what’s “really needed” often takes several cycles of questions, answers, and more questions, a solution that speeds up the question-answer cycle is better. This means that solutions offering more power to end-users are inherently better (assuming the same cost and speed), since they let users ask and answer more questions without getting other people involved. And talking to yourself is always easier than talking to someone else: you’re always available, and rarely lose an argument.

In short: the way to evaluate a business intelligence solution is to build a complete list of requirements and then, for each requirement, look at what tool will meet it, who will use that tool, what the tool will cost; and how quickly the work will get done.

We can put cost aside for the moment, because the out-of-pocket expense of most business intelligence solutions is insignificant compared with the value of getting the information they provide. So even though cheaper is better and prices do vary widely, price shouldn’t be the determining factor unless all else is truly equal.

The remaining critieria are who will use the tool and how quickly the work will get done. These come down to pretty much the same thing, for the reasons already described: a tool that can be used by a business manager will give the quickest results. More grandly, think of a hierarchy of users: business managers; business analysts (staff members who report to the business managers); statisticians (specialized analysts who are typically part of a central service organization); and IT staff. Essentially, questions are asked by business managers, and work their way through the hierarchy until they get to somebody who can answer them. Who that person is depends on what tools each person can use. So, if the business manager can answer her own question with her own tools, it goes no further; if the business analyst can answer the question, he does and sends it back to his boss; if not, he asks for help from a statistician; and if the statistician can’t get the answer, she goes to the IT department for more data or processing.

Bear in mind that different users can do different things with the same tool. A business manager may be able to do use a spreadsheet only for basic calculations, while a business analyst may also know how to do complex formulas, graphics, pivot tables, macros, data imports and more. Similarly, the business analyst may be limited to simple SQL queries in a relational database, while the IT department has experts who can use that same relational database to create complex queries, do advanced reporting, load data, add new tables, set up recurring processes, and more.

Since a given tool does different things for different users, one way to assess a business intelligence product is to build a matrix showing which requirements each user type can meet with it. Whether a tool “meets” a requirement could be indicated by a binary measure (yes/no), or, preferably, by a utility score that shows how well the requirement is met. Results could be displayed in a bar chart with four columns, one for each user group, where the height of each bar represents the percentage of all requirements those users can meet with that tool. Tools that are easy but limited (e.g. Excel) would have short bars that get slightly taller as they move across the chart. Tools that are hard but powerful (e.g. SQL databases) would have low bars for business users and tall bars for technical ones. (This discussion cries out for pictures, but I haven’t figured out how to add them to this blog. Sorry.)

Things get even more interesting if you plot the charts for two tools on top of each other. Just sticking with Excel vs. SQL, the Excel bars would be higher than the SQL bars for business managers and analysts, and lower than the SQL bars for statisticians and IT staff. The over-all height of the bars would be higher for the statisticians and IT, since they can do more things in total. Generally this suggests that Excel would be of primary use to business managers and analysts, but pretty much redundant for the statisticians and IT staff.

Of course, in practice, statisticians and IT people still do use Excel, because there are some things it does better than SQL. This comes back to the matrices: if each cell has utility scores, comparing the scores for different tools would show which tool is better for each situation. The number of cells won by each tool could create a stacked bar chart showing the incremental value of each tool to each user group. (Yes, I did spend today creating graphs. Why do you ask?)

Now that we’ve come this far, it’s easy to see that assessing different combinations of tools is just a matter of combining their matrices. That is, you compare the matrices for all the tools in a given combination and identify the “winning” product in each cell. The number of cells won by each tool shows its incremental value. If you want to get really fancy, you can also consider how much each tool is better than the next-best alternative, and incorporate the incremental cost of deploying an additional tool.

Which, at long last, brings us back to QlikTech. I see four general classes of business intelligence tools: legacy systems (e.g. standard reports out of operational systems); relational databases (e.g. in a data warehouse); traditional business intelligence tools (e.g. Cognos or Business Objects; we’ll also add statistical tools like SAS); and Excel (where so much of the actual work gets done). Most companies already own at least one product in each category. This means you could build a single utility matrix, taking the highest score in each cell from all the existing systems. Then you would compare this to a matrix for QlikTech and find cells where the QlikView is higher. Count the number of those cells, highlight them in a stacked bar chart, and you have a nice visual of where QlikTech adds value.

If you actually did this, you’d probably find that QlikTech is most useful to business analysts. Business managers might benefit some from QlikView dashboards, but those aren’t all that different from other kinds of dashboards (although building them in QlikView is much easier). Statisticians and IT people already have powerful tools that do much of what QlikTech does, so they won’t see much benefit. (Again, it may be easier to do some things in QlikView, but the cost of learning a new tool will weigh against it.) The situation for business analysts is quite different: QlikTech lets them do many things that other tools do not. (To be clear: some other tools can do those things, but it takes more skill than the business analysts possess.)

This is very important because it means those functions can now be performed by the business analysts, instead of passed on to statisticians or IT. Remember that the definition of a “best” solution boils down to whatever solution meets business requirements closest to the business manager. By allowing business analysts to perform many functions that would otherwise be passed through to statisticians or IT, QlikTech generates a hugh improvement in total solution quality.

First, some context. I’m using “business intelligence” in the broad sense of “how companies get information to run their businesses”. This encompasses everything from standard operational reports to dashboards to advanced data analysis. Since these are all important, you can think of business intelligence as providing a complete solution to a large but finite list of requirements.

For each item on the list, the answer will have two components: the tool used, and the person doing the work. That is, I’m assuming a single tool will not meet all needs, and that different tasks will be performed by different people. This all seems reasonable enough. It means that a complete solution will have multiple components.

It also means that you have to look at any single business intelligence tool in the context of other tools that are also available. A tool which seems impressive by itself may turn out to add little real value if its features are already available elsewhere. For example, a visualization engine is useless without a database. If the company already owns a database that also includes an equally-powerful visualization engine, then there’s no reason to buy the stand-alone visualization product. This is why vendors to expand their product functionality and why it is so hard for specialized systems to survive. It’s also why nobody buys desk calculators: everyone has a computer spreadsheet that does the same and more. But I digress.

Back to the notion of a complete solution. The “best” solution is the one that meets the complete set of requirements at the lowest cost. Here, “cost” is broadly defined to include not just money, but also time and quality. That is, a quicker answer is better than a slower one, and a quality answer is better than a poor one. “Quality” raises its own issues of definition, but let’s view this from the business manager’s perspective, in which case “quality” means something along the lines of “producing the information I really need”. Since understanding what’s “really needed” often takes several cycles of questions, answers, and more questions, a solution that speeds up the question-answer cycle is better. This means that solutions offering more power to end-users are inherently better (assuming the same cost and speed), since they let users ask and answer more questions without getting other people involved. And talking to yourself is always easier than talking to someone else: you’re always available, and rarely lose an argument.

In short: the way to evaluate a business intelligence solution is to build a complete list of requirements and then, for each requirement, look at what tool will meet it, who will use that tool, what the tool will cost; and how quickly the work will get done.

We can put cost aside for the moment, because the out-of-pocket expense of most business intelligence solutions is insignificant compared with the value of getting the information they provide. So even though cheaper is better and prices do vary widely, price shouldn’t be the determining factor unless all else is truly equal.

The remaining critieria are who will use the tool and how quickly the work will get done. These come down to pretty much the same thing, for the reasons already described: a tool that can be used by a business manager will give the quickest results. More grandly, think of a hierarchy of users: business managers; business analysts (staff members who report to the business managers); statisticians (specialized analysts who are typically part of a central service organization); and IT staff. Essentially, questions are asked by business managers, and work their way through the hierarchy until they get to somebody who can answer them. Who that person is depends on what tools each person can use. So, if the business manager can answer her own question with her own tools, it goes no further; if the business analyst can answer the question, he does and sends it back to his boss; if not, he asks for help from a statistician; and if the statistician can’t get the answer, she goes to the IT department for more data or processing.

Bear in mind that different users can do different things with the same tool. A business manager may be able to do use a spreadsheet only for basic calculations, while a business analyst may also know how to do complex formulas, graphics, pivot tables, macros, data imports and more. Similarly, the business analyst may be limited to simple SQL queries in a relational database, while the IT department has experts who can use that same relational database to create complex queries, do advanced reporting, load data, add new tables, set up recurring processes, and more.

Since a given tool does different things for different users, one way to assess a business intelligence product is to build a matrix showing which requirements each user type can meet with it. Whether a tool “meets” a requirement could be indicated by a binary measure (yes/no), or, preferably, by a utility score that shows how well the requirement is met. Results could be displayed in a bar chart with four columns, one for each user group, where the height of each bar represents the percentage of all requirements those users can meet with that tool. Tools that are easy but limited (e.g. Excel) would have short bars that get slightly taller as they move across the chart. Tools that are hard but powerful (e.g. SQL databases) would have low bars for business users and tall bars for technical ones. (This discussion cries out for pictures, but I haven’t figured out how to add them to this blog. Sorry.)

Things get even more interesting if you plot the charts for two tools on top of each other. Just sticking with Excel vs. SQL, the Excel bars would be higher than the SQL bars for business managers and analysts, and lower than the SQL bars for statisticians and IT staff. The over-all height of the bars would be higher for the statisticians and IT, since they can do more things in total. Generally this suggests that Excel would be of primary use to business managers and analysts, but pretty much redundant for the statisticians and IT staff.

Of course, in practice, statisticians and IT people still do use Excel, because there are some things it does better than SQL. This comes back to the matrices: if each cell has utility scores, comparing the scores for different tools would show which tool is better for each situation. The number of cells won by each tool could create a stacked bar chart showing the incremental value of each tool to each user group. (Yes, I did spend today creating graphs. Why do you ask?)

Now that we’ve come this far, it’s easy to see that assessing different combinations of tools is just a matter of combining their matrices. That is, you compare the matrices for all the tools in a given combination and identify the “winning” product in each cell. The number of cells won by each tool shows its incremental value. If you want to get really fancy, you can also consider how much each tool is better than the next-best alternative, and incorporate the incremental cost of deploying an additional tool.

Which, at long last, brings us back to QlikTech. I see four general classes of business intelligence tools: legacy systems (e.g. standard reports out of operational systems); relational databases (e.g. in a data warehouse); traditional business intelligence tools (e.g. Cognos or Business Objects; we’ll also add statistical tools like SAS); and Excel (where so much of the actual work gets done). Most companies already own at least one product in each category. This means you could build a single utility matrix, taking the highest score in each cell from all the existing systems. Then you would compare this to a matrix for QlikTech and find cells where the QlikView is higher. Count the number of those cells, highlight them in a stacked bar chart, and you have a nice visual of where QlikTech adds value.

If you actually did this, you’d probably find that QlikTech is most useful to business analysts. Business managers might benefit some from QlikView dashboards, but those aren’t all that different from other kinds of dashboards (although building them in QlikView is much easier). Statisticians and IT people already have powerful tools that do much of what QlikTech does, so they won’t see much benefit. (Again, it may be easier to do some things in QlikView, but the cost of learning a new tool will weigh against it.) The situation for business analysts is quite different: QlikTech lets them do many things that other tools do not. (To be clear: some other tools can do those things, but it takes more skill than the business analysts possess.)

This is very important because it means those functions can now be performed by the business analysts, instead of passed on to statisticians or IT. Remember that the definition of a “best” solution boils down to whatever solution meets business requirements closest to the business manager. By allowing business analysts to perform many functions that would otherwise be passed through to statisticians or IT, QlikTech generates a hugh improvement in total solution quality.