It’s been a while since I wrote about Customer Data Platforms, but only because I’ve been distracted by other topics. The CDP industry has been moving along nicely without my attention: new CDPs keep emerging and the existing vendors are growing.

Fliptop wasn’t on my original list of CDPs, having launched its relevant product just after the initial CDP report was published. But it fits perfectly into the “data enhancement” category, joining Infer, Lattice Engines, Mintigo, Growth Intelligence (which I’ve also yet to review) and ReachForce. Like all the others except ReachForce, the company builds a master database of information about businesses and individuals by scanning the social networks, company Web pages, job sites, paid search spend, search engine page rank, and other sources. When it gets a new client, it loads that company’s own customer list and sales from its CRM system, finds those companies and individuals in the Fliptop database, enhances their records with Fliptop data, and uses the combined information to build a predictive model that identifies the likelihood of someone making a purchase. This model can score new leads and classify existing opportunities in the sales pipeline.

So what makes Fliptop different from its competitors? The one objective distinction is that Fliptop is publicly listed on the Salesforce.com App Exchange, meaning it has passed the Salesforce.com security reviews. Not surprisingly, the company’s Salesforce connector is very efficient, automatically pulling down leads, contacts, accounts, and opportunities through the Salesforce API and feeding them into the modeling system. New clients who import only Salesforce data can have a model ready within 24 hours, which is faster than most competitors. But data from other sources may require custom connectors, slowing the process. Fliptop is also able to model quickly because it defaults to predicting revenue: in other systems, part of the set-up time is devoted to deciding what to model against.

Once the model is built, Fliptop scores the client’s entire database and assigns contacts, accounts, and opportunities into classes based on expected results. A typical scheme would create A, B, C, and D lead classes, where A leads are best. Reports show the percentage of records in each group and the expected win rate, which in turn relates to expected revenue. A typical result might find that the top 10% of contacts account for 40% of the expected revenue or that the top 40% of contacts account for 95% of the revenue. Clients can adjust the breakpoints to create custom performance ranges. Reports also show which categories of data are contributing the most to the scoring models: this is more information than some systems provide and is presented quite understandably. (Incidentally, Fliptop reports it has generally found that "fit" data, such as company size and industry, is more powerful than behavioral data such as email clicks and content downloads.)

Fliptop scores are loaded into a CRM or marketing automation system where they can be used to prioritize sales efforts and guide campaign segmentation. There are existing connectors for Salesforce.com, Marketo, and Eloqua and it’s fairly easy to connect with others. New leads can be scored in under one minute or in a few seconds if the system is directly connected to a lead capture form. Clients can build separate models for different products or segments and receive a score for each model. The system automatically checks for new sales results at regular intervals and adjusts the models when needed.

At present, Fliptop only sends scores to other systems. (Infer takes a similar approach.) The next release of its Salesforce.com integration is expected to add top positive and negative factors on individual records. The company is considering future applications including campaign optimization, pipeline forecasting, and account-level targeting. But it does not plan to match competitors who offer treatment recommendations, sell lists of new prospects, or provide their own behavior tracking pixels.

Pricing for Fliptop is based on data volume and starts at $2,500 per month. The company offers a free 30 day trial – unusual in this segment and possible because set-up is so automated. After the trial, clients are required to sign a one-year contract. The system currently has about three dozen paying clients and a larger number of active trials.

Bottom line: Fliptop does a very good job with predictive lead scoring. Marketers looking for a broader range of applications may find other CDPs are a better fit.

This is the blog of David M. Raab, marketing technology consultant and analyst. Mr. Raab is founder and CEO of the Customer Data Platform Institute and Principal at Raab Associates Inc. All opinions here are his own. The blog is named for the Customer Experience Matrix, a tool to visualize marketing and operational interactions between a company and its customers.

Friday, June 27, 2014

Sunday, June 22, 2014

NextPrinciples Offers Integrated Social Marketing Automation

Social marketing is growing up.

We’re seen this movie before, folks. It starts when a new medium is created – email, Web, now social. Pioneering marketers create custom tools to exploit it. These are commercialized into “point solutions” that perform a single task such as social listening, posting, and measurement. Point solutions are later combined into integrated products that manage all tasks associated with the medium. Eventually, those medium-specific products themselves become part of larger, multi-medium suites (for which the current buzzword is “omni-channel”).

But knowing the plot doesn’t make a story any less interesting: what matters is how well it’s told. In the case of social marketing, we've reached the chapter where point solutions are combined into integrated products. The challenge has shifted from finding new ideas to meshing existing features into a single efficient machine. More Henry Ford than Thomas Edison, if you will.

NextPrinciples, launched earlier this month, illustrates the transition nicely. Originally envisioned as a platform for social listening and engagement, it evolved before launch into a broader solution that addresses every step in the process of integrating social media with marketing automation. Functionally, this means it provides social listening for lead identification, social data enhancement to build expanded lead profiles, social lead scoring, social nurture campaigns, integration with marketing automation and CRM systems, and reporting to measure results.

It’s important to clarify that NextPrinciples isn’t simply a collection of point solutions. Rather, it is a truly integrated system with its own profile database that is used by all functions. It could operate without any marketing automation or CRM connection if a company wanted to, although that doesn’t sound like a good idea. Its target users are social media marketers who want to work in a single system of their own, rather than relying on point solutions and social marketing features scattered through existing marketing automation and CRM platforms.

The specific functions provided by NextPrinciples are well implemented. Users set up “trackers” to listen to social conversations on Twitter (today) and other public channels (soon), based on inclusion and exclusion keywords, date ranges, location, and language. Users review the tracker results to decide which leads are of interest, and can then pull demographic information from the leads’ public social profiles. Leads can also be imported from marketing automation or CRM systems to be tracked and enhanced. Trackers can be connected with lead scoring rules that rate leads based on demographics and social behaviors, including sentiment analysis of their social content. Qualified leads can be pushed to marketing automation or CRM, as well as entered into NextPrinciples’ own social marketing campaigns to receive targeted social messages. Campaigns can include multiple waves of templated content. The system can track results at the wave and campaign levels. It can also poll CRM systems for revenue data linked to leads acquired through NextPrinciples, thus measuring financial results. Salespeople and other users can view individual lead profiles, including a “heatmap” of topics they are discussing in social channels.

If describing these features as “well implemented” struck you as faint praise, you are correct: as near as I can tell, there’s nothing especially innovative going on here. But that’s really okay. NextPrinciples is more about integration than innovation, and its integration seems just fine. I do wonder a bit about scope, though: if this is to be a social marketer’s primary tool, I’d want more connectors for profile data such as company information and influencer scores. I’d also want lead scoring based on predictive models rather than rules. And I want more help with creating social content, such as Facebook forms, sharing buttons to embed in emails and landing pages, multi-variate testing and optimization, and semantic analysis of content “meaning”.

NextPrinciples is working on at least some of these and they’ve probably considered them all. As a practical matter, the question marketers should ask is whether NextPrinciples’ current features add enough value to justify trying the system. In this context, pricing matters: and at $99 per month for up to 100 actively managed leads, the risk is quite low. For many firms, the lead identification or publishing features alone would be worth the investment. Remember that NextPrinciples is only the next chapter in an evolving story. It doesn’t have to be the last social marketing system you buy, so long as it moves you a bit further ahead.

We’re seen this movie before, folks. It starts when a new medium is created – email, Web, now social. Pioneering marketers create custom tools to exploit it. These are commercialized into “point solutions” that perform a single task such as social listening, posting, and measurement. Point solutions are later combined into integrated products that manage all tasks associated with the medium. Eventually, those medium-specific products themselves become part of larger, multi-medium suites (for which the current buzzword is “omni-channel”).

But knowing the plot doesn’t make a story any less interesting: what matters is how well it’s told. In the case of social marketing, we've reached the chapter where point solutions are combined into integrated products. The challenge has shifted from finding new ideas to meshing existing features into a single efficient machine. More Henry Ford than Thomas Edison, if you will.

NextPrinciples, launched earlier this month, illustrates the transition nicely. Originally envisioned as a platform for social listening and engagement, it evolved before launch into a broader solution that addresses every step in the process of integrating social media with marketing automation. Functionally, this means it provides social listening for lead identification, social data enhancement to build expanded lead profiles, social lead scoring, social nurture campaigns, integration with marketing automation and CRM systems, and reporting to measure results.

It’s important to clarify that NextPrinciples isn’t simply a collection of point solutions. Rather, it is a truly integrated system with its own profile database that is used by all functions. It could operate without any marketing automation or CRM connection if a company wanted to, although that doesn’t sound like a good idea. Its target users are social media marketers who want to work in a single system of their own, rather than relying on point solutions and social marketing features scattered through existing marketing automation and CRM platforms.

The specific functions provided by NextPrinciples are well implemented. Users set up “trackers” to listen to social conversations on Twitter (today) and other public channels (soon), based on inclusion and exclusion keywords, date ranges, location, and language. Users review the tracker results to decide which leads are of interest, and can then pull demographic information from the leads’ public social profiles. Leads can also be imported from marketing automation or CRM systems to be tracked and enhanced. Trackers can be connected with lead scoring rules that rate leads based on demographics and social behaviors, including sentiment analysis of their social content. Qualified leads can be pushed to marketing automation or CRM, as well as entered into NextPrinciples’ own social marketing campaigns to receive targeted social messages. Campaigns can include multiple waves of templated content. The system can track results at the wave and campaign levels. It can also poll CRM systems for revenue data linked to leads acquired through NextPrinciples, thus measuring financial results. Salespeople and other users can view individual lead profiles, including a “heatmap” of topics they are discussing in social channels.

If describing these features as “well implemented” struck you as faint praise, you are correct: as near as I can tell, there’s nothing especially innovative going on here. But that’s really okay. NextPrinciples is more about integration than innovation, and its integration seems just fine. I do wonder a bit about scope, though: if this is to be a social marketer’s primary tool, I’d want more connectors for profile data such as company information and influencer scores. I’d also want lead scoring based on predictive models rather than rules. And I want more help with creating social content, such as Facebook forms, sharing buttons to embed in emails and landing pages, multi-variate testing and optimization, and semantic analysis of content “meaning”.

NextPrinciples is working on at least some of these and they’ve probably considered them all. As a practical matter, the question marketers should ask is whether NextPrinciples’ current features add enough value to justify trying the system. In this context, pricing matters: and at $99 per month for up to 100 actively managed leads, the risk is quite low. For many firms, the lead identification or publishing features alone would be worth the investment. Remember that NextPrinciples is only the next chapter in an evolving story. It doesn’t have to be the last social marketing system you buy, so long as it moves you a bit further ahead.

Thursday, June 12, 2014

B2B Marketing Automation Vendor Strategies: What's Worked and What's Next

I recently did a study of the strategies of B2B marketing automation vendors. Of the two dozen or so companies in the sample, six were clearly successful (defined as achieving major share within their segment), seven had failed to survive as independent companies and sold for a low price, and the rest fell somewhere in between.

The research identified 28 different strategies which fell into six major groups. Some approaches definitely had better track records than others, but it’s important to recognize that the market has changed over time, so past performance doesn’t necessarily indicate future success. What I found most intriguing was the sheer diversity of the approaches, showing that vendors continue to explore new paths to success.

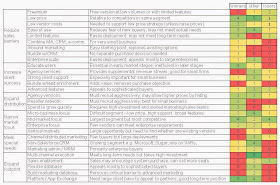

The table below shows results for each strategy for each set of vendors, grouped by the major strategy categories. Most vendors used more than one strategy. Shading indicates the relative frequency of each strategy.

In general, the winners have focused on two of the major strategy groups: reducing sales barriers and expanding distribution. This made considerable sense in the early stages of a new market, when building awareness and market share was critical.

Within these categories, some strategies have worked better than others. Freemium has been particularly unsuccessful, while low price, ease of use, limited features, and agency versions have been applied by vendors with all types of results. Winning vendors were most distinguished by user education, reseller networks, and heavy spending to grow quickly. There is certainly some chicken-and-egg ambiguity about whether the companies were successful because of their strategies or were able to adopt those strategies after some initial success. One thing that doesn't show up on the chart is that some successful vendors have shifted strategies over time, generally moving away from low prices to higher prices and from small businesses to mid-size and larger.

As the market matures, I’d expect different strategies to become more important. Established vendors will need to focus on increasing client success in order to retain the clients and will want to expand their footprint to leverage their installed base, especially through setting themselves up as platforms. Those two shifts are well under way. Smaller vendors will find it harder to challenge the leaders, especially if they lack heavy financing. But they may be able to thrive in niches by focusing on narrow market segments or meeting special client needs.

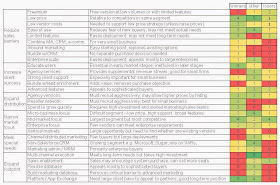

The chart below shows the same data as the table but in a more visual format, for all you right-brainers out there.

The research identified 28 different strategies which fell into six major groups. Some approaches definitely had better track records than others, but it’s important to recognize that the market has changed over time, so past performance doesn’t necessarily indicate future success. What I found most intriguing was the sheer diversity of the approaches, showing that vendors continue to explore new paths to success.

The table below shows results for each strategy for each set of vendors, grouped by the major strategy categories. Most vendors used more than one strategy. Shading indicates the relative frequency of each strategy.

In general, the winners have focused on two of the major strategy groups: reducing sales barriers and expanding distribution. This made considerable sense in the early stages of a new market, when building awareness and market share was critical.

Within these categories, some strategies have worked better than others. Freemium has been particularly unsuccessful, while low price, ease of use, limited features, and agency versions have been applied by vendors with all types of results. Winning vendors were most distinguished by user education, reseller networks, and heavy spending to grow quickly. There is certainly some chicken-and-egg ambiguity about whether the companies were successful because of their strategies or were able to adopt those strategies after some initial success. One thing that doesn't show up on the chart is that some successful vendors have shifted strategies over time, generally moving away from low prices to higher prices and from small businesses to mid-size and larger.

As the market matures, I’d expect different strategies to become more important. Established vendors will need to focus on increasing client success in order to retain the clients and will want to expand their footprint to leverage their installed base, especially through setting themselves up as platforms. Those two shifts are well under way. Smaller vendors will find it harder to challenge the leaders, especially if they lack heavy financing. But they may be able to thrive in niches by focusing on narrow market segments or meeting special client needs.

The chart below shows the same data as the table but in a more visual format, for all you right-brainers out there.

Wednesday, June 04, 2014

Marketing Automation Buyer Survey: Many Myths Busted but Planning is Still Key to Success

The marketing automation user survey I mentioned last March has finally been published on the VentureBeat site (you can order it here). At more than 50 pages and with dozens of graphs and charts, it’s not light reading. But it’s still fascinating because the findings challenge much of the industry’s conventional wisdom.

For example, industry deep thinkers often say that deployment failure has more to do with bad users than bad software. The underlying logic runs along the lines that all major marketing automation systems have similar features, and certainly they share a core set that is more than adequate for most marketing organizations. So failure is the result of poor implementation, not choosing the wrong tools.

But, as I reported in my March post, it turns out that 25% of users cited “missing features” as a major obstacle – indicating that the system they bought wasn’t adequate after all. My analysis since then found that people who cited “missing features” are among the least satisfied of all users: so it really mattered that those features were missing. The contrast here is with obstacles such as creating enough content, which were cited by people who were highly satisfied, suggesting those obstacles were ultimately overcome.*

We also found that people who evaluated on “breadth of features” were far more satisfied than people who evaluated on price, ease of learning, or integration. This is independent confirmation of the same point: people who took care to find the features they needed were happy the result; those who didn’t, were not.

But the lesson isn’t just that features matter. Other answers revealed that satisfaction also depended on taking enough time to do a thorough vendor search, on evaluating multiple systems, and (less strongly) on using multiple features from the start. These findings all point to concluding that the primary driver of marketing automation success is careful preparation, which means defining in advance the types of programs you’ll run and how you’ll use marketing automation. Buying the right system is just one result of a solid preparation process; it doesn't cause success by itself. So it's correct that results ultimately depend on users rather than technology, but not in the simplistic way this is often presented.

I’d love to go through the survey results in more detail because I think they provide important insights about the organization, integration, training, outside resources, project goals, and other issues. But then I’d end up rewriting the entire report. At the very least, take a look at the executive summary available on the VentureBeat site for free. And if you really care about marketing automation success, tilt the odds in your favor by buying the full report.

__________________________________________________________________________

* I really struggled to find the best way to present this data. There are two dimensions: how often each obstacle was cited and the average satisfaction score (on a scale of 1 to 5) of people who cited that obstacle. The table in the body of the post just shows the deviation of the satisfaction scores from the over-all average of 3.21, highlighting the "impact" of each obstacle (with the caveat that "impact" implies causality, which isn't really proven by the correlation). The more standard way to show two dimensions is a scatter chart like the one below, but I find this is difficult to read and doesn't communicate any message clearly.

Another option I tried was a bar graph showing the frequency of each obstacle with color coding to show the satisfaction level. This does show both bits of information but you have to look closely to see the red and green bars: the image is dominated by frequency, which is not the primary message being communicated. If anyone has a better solution, I'm all ears.

For example, industry deep thinkers often say that deployment failure has more to do with bad users than bad software. The underlying logic runs along the lines that all major marketing automation systems have similar features, and certainly they share a core set that is more than adequate for most marketing organizations. So failure is the result of poor implementation, not choosing the wrong tools.

But, as I reported in my March post, it turns out that 25% of users cited “missing features” as a major obstacle – indicating that the system they bought wasn’t adequate after all. My analysis since then found that people who cited “missing features” are among the least satisfied of all users: so it really mattered that those features were missing. The contrast here is with obstacles such as creating enough content, which were cited by people who were highly satisfied, suggesting those obstacles were ultimately overcome.*

We also found that people who evaluated on “breadth of features” were far more satisfied than people who evaluated on price, ease of learning, or integration. This is independent confirmation of the same point: people who took care to find the features they needed were happy the result; those who didn’t, were not.

But the lesson isn’t just that features matter. Other answers revealed that satisfaction also depended on taking enough time to do a thorough vendor search, on evaluating multiple systems, and (less strongly) on using multiple features from the start. These findings all point to concluding that the primary driver of marketing automation success is careful preparation, which means defining in advance the types of programs you’ll run and how you’ll use marketing automation. Buying the right system is just one result of a solid preparation process; it doesn't cause success by itself. So it's correct that results ultimately depend on users rather than technology, but not in the simplistic way this is often presented.

I’d love to go through the survey results in more detail because I think they provide important insights about the organization, integration, training, outside resources, project goals, and other issues. But then I’d end up rewriting the entire report. At the very least, take a look at the executive summary available on the VentureBeat site for free. And if you really care about marketing automation success, tilt the odds in your favor by buying the full report.

__________________________________________________________________________

* I really struggled to find the best way to present this data. There are two dimensions: how often each obstacle was cited and the average satisfaction score (on a scale of 1 to 5) of people who cited that obstacle. The table in the body of the post just shows the deviation of the satisfaction scores from the over-all average of 3.21, highlighting the "impact" of each obstacle (with the caveat that "impact" implies causality, which isn't really proven by the correlation). The more standard way to show two dimensions is a scatter chart like the one below, but I find this is difficult to read and doesn't communicate any message clearly.

Another option I tried was a bar graph showing the frequency of each obstacle with color coding to show the satisfaction level. This does show both bits of information but you have to look closely to see the red and green bars: the image is dominated by frequency, which is not the primary message being communicated. If anyone has a better solution, I'm all ears.