Summary: Public data gives some insights into Marketo's financial history and prospects. Despite past losses, the company is in a strong position to continue to compete aggressively. (Note: as Marketo has commented below, this article is based on my own analysis and was written without access to Marketo's actual financial information.)Here’s a bit more on this week's $25 million investment in

Marketo: a piece in

VentureWire quotes revenue for Markteo as $4.5 million for 2009 and "triple that" ($13.5 million) for 2010. This is the first time I've seen published revenue figures for the company. They allow for some interesting analysis.

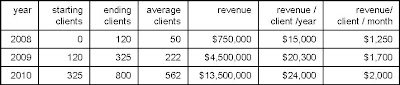

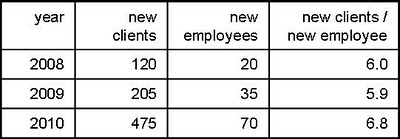

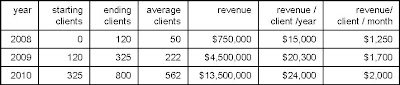

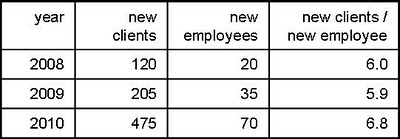

Data I've collected over the years shows that Marketo had about 120 clients at the start of 2009, 325 at the start of 2010, and should end 2010 with about 800. Doing a bit of math, this yields average counts of 222 for 2009 and 562 for 2010, which in turn shows average revenue per client of $20,000 per year or $1,700 per month in 2009 and $24,000 or $2,000 per month in 2010. The table below throws in a reasonable guess for 2008 as well.

Given that Marketo’s list prices start at $2,000 per month for the smallest implementation of its full-featured edition, this is pretty firm evidence that

the company has indeed been aggressively discounting its system – as competitors have long stated.

(Some competitors have also said that Marketo's reported client counts are cumulative new clients, without reductions for attrition. If so, the revenue per active client would actually be a bit higher than I've calculated here. But Marketo itself says the reported figures are indeed active clients and I've no basis to doubt them. The following analysis wouldn't change much either way.)

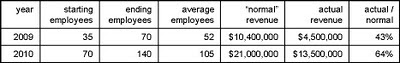

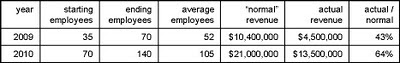

If you’ll accept a bit more speculation, we can even estimate the size of those discounts. That same VentureWire article quotes Marketo’s current headcount as 130 employees, compared with half that number at the start of the year. Assume there were 70 at the start of 2010 (which matches my own data) and will be 140 by year-end, for an average of 105. My records suggest that the headcount at the start of the 2009 was around 35, so the average headcount for that year was about 52.

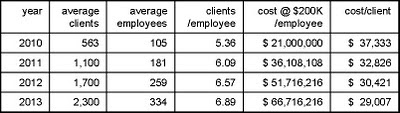

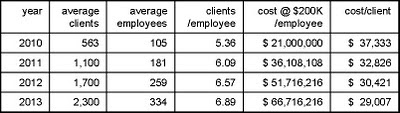

Let’s assume a "normal" revenue of $200,000 per employee, which is about typical for software companies (and matches published figures for Marketo competitors

Aprimo and

Unica). That means Marketo revenues without discounting “should” have been about $10.4 million in 2009 and $21 million in 2010. Compared with actual revenues, this shows 2009 revenue was about 43% of the “normal” price ($4.5 million actual vs. $10.4 million expected) and 2010 revenue at about 64% ($13.5 million vs. $21 million).

So the good news for Marketo’s new investors is that Marketo has been discounting less (although there’s an alternative explanation that we’ll get to in a minute). The bad news is

they have quite a way to go before they’re selling at full price.

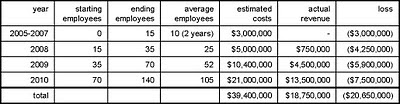

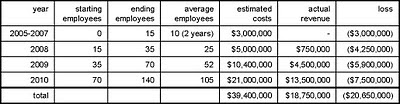

We can use the same data to estimate Marketo’s burn rate. Costs are likely to be very close to the same $200,000 per employee (this includes everything, not just salary). My records suggest the company had about 25 average employees in 2008, for $5 million in expenses. Marketo was founded in late 2005, so let’s figure it averaged 10 employees during the previous two years, and that they cost only $150,000 because the early stage doesn’t involve marketing costs. This adds another $3 million. That gives a cumulative investment of $39.4 million.

We already know revenue for 2009 and 2010 will be about $18 million. The company started selling in late February 2008 and my records show it ended that year with 120 clients. Assume the equivalent of 50 annual clients at $15,000 and you get 2008 revenue of $750,000, for $18.75 million total. That leaves a gap of $20.65 million between life-to-date costs vs. revenues.

This nicely matches the “approximately $20 million” investment to date that Marketo CEO Phil Fernandez reportedin

his own blog post on the new funding.

Now you can see why Marketo needed more money: its losses are actually growing despite having more customers and improved pricing. It lost nearly $16,000 for each new client last year ($7.5 million loss on 475 new clients). At that rate, even a modest increase in the number of new clients would have burned through nearly all of the company’s remaining $12 million within one year.

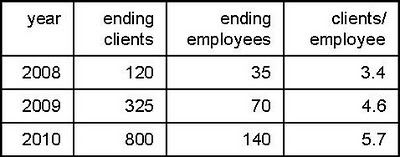

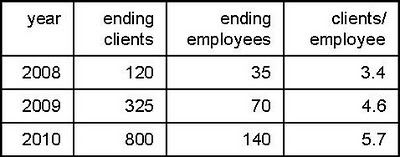

This isn’t just a matter of scale. It’s true that a start-up has to spread its fixed costs over a small number of clients, yielding a high cost per client during the early stages. Marketo shows this effect: the number of clients per employee has grown started at 3.4 at the end of 2008 and dropped to 5.7 at the end of 2010. This is the alternative to discounting as an explanation for those ratios of "normal" to actual revenue (remember: “normal” revenue based on number of employees).

But the client/employee ratio can’t improve indefinitely. Many costs are not fixed: staffing for customer support, marketing, sales and administrative functions will all increase as clients are added. To get some idea of Marketo's variable costs, compare the change in employees with the change in clients. This is improving more slowly:

And here’s the problem: at 1 new employee for every 6.8 clients, Marketo is adding $200,000 in cost for just $163,000 in revenue (=6.8 x $24,000 / client). It truly does lose money on each new customer. You can’t grow your way out of that.

So what happens now? Let’s assume Marketo gets a bit more efficient and the new clients to new employee ratio eventually tops out at a relatively optimistic 8. At a cost of $200,000 per employee, those clients have to generate $25,000 in revenue for Marketo just to cover the increased expense. This is just a bit higher than the current $24,000 per client, so it seems pretty doable. But it leaves the existing $7.5 million annual loss in place forever.

In other words,

Marketo must substantially increase revenue per client to become profitable. (In theory, Marketo could also cut costs. But the main controllable cost is sales and marketing, and incremental cost per sale is likely to rise as the company enters new markets and faces stiffer competition while pushing for continued growth. So higher revenue is the only real option.)

Revenue per client can be increased through higher prices, new products, and/or bigger clients. Pricing will be constrained by competition, although Marketo could probably discount a bit less. This leaves new products and bigger clients.

Those are exactly the areas that Marketo is now pursuing through add-ons such as Revenue Cycle Analytics and Sales Insight, and enhancements for large companies in its Enterprise Edition. So, in my humble opinion, they're doing exactly the right things.

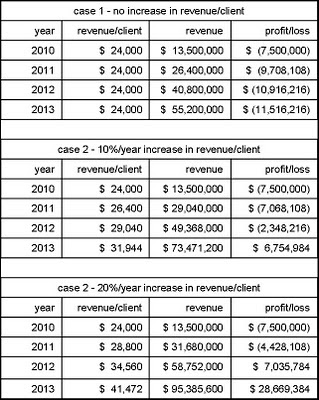

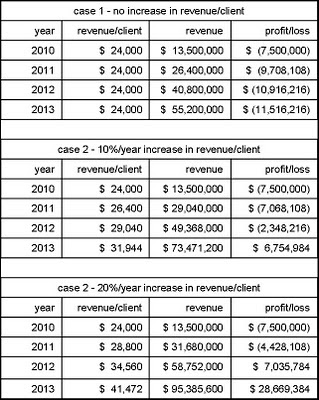

Some back-of-envelope calculations confirm that revenue per client is by far the most important variable in Marketo’s financial future. The following tables use some reasonable assumptions about growth in clients and clients per employee; take my word for it that the results don’t change much if you modify these. But

results change hugely depending on what happens to revenue per client: losses continue indefinitely if it remains at the current $24,000 per year; they continue for two years and total $10 million if it increases at 10% per year; and they end after one year and $4.4 million if it grows at 20% per year. Bear in mind that revenue per customer did grow 20% from 2009 to 2010 ($20,000 to $24,000). So I’d expect it to continue rising sharply as Marketo firms up its pricing and starts acquiring larger clients.

Indeed, these figures raise the unexpected (to me) question of whether $25 million in funding is more than Marketo will need. I’d guess the company’s management and current investors were careful not to dilute their equity any more than necessary, so I think they’re planning some heavy investments that are not factored into my assumptions. In fact, the company has said as much: the VentureWire piece quotes Fernandez as stating the new funds will be used for additional sales and marketing staff, to open offices abroad, to integrate with other vendors and launch vertical services in sectors like health care and financial services.

I also expect continued aggressive pricing (perhaps more selectively than in the past) and maybe some acquisitions. It's possible that Marketo will also expand its own professional services staff, since clients definitely need help with adoption. But that would conflict with its existing channel partners so it would need to move carefully.

What does it all mean? Here are my conclusions:

- Marketo's losses reflect a conscious strategy to grow quickly through aggressive pricing. There is no fundamental problem with its cost structure: company could be profitable fairly quickly if it decided to slow down and raise prices.

- Marketo's future lies in the middle and upper tiers of the market. Its pressing financial need is to raise revenue per client, which will lead it away from the low-cost, bitterly competitive market serving very small businesses.

- The new funding will support an expanded marketing and product push. Competing with Marketo in its target segments is going to be a challenge indeed.